Deploying VMware NSX with Cisco ACI as the Physical Switch Fabric Design Guide (Version: 2024)

Introduction

This VMware NSX® design guide offers an enhanced solution for deploying NSX networking and security virtualization with Cisco ACI as the IP switch fabric. The intended audience of this paper is network and virtualization architects interested in deploying NSX network virtualization solutions. NSX is the leading SDN solution for the data center, providing multiple-site cloud networking and security. NSX is a game-changing solution designed to solve the complex challenges of multi-cloud networking and security—all while being easy to operationalize via a single pane of glass. Networking and security are critical to achieving scale with the cloud operating model.

This guide provides guidance and best practices for leveraging NSX with Cisco ACI. NSX runs over any physical switch fabric. This document aims to provide the prescriptive physical switch fabric setup with data centers running Cisco ACI switching for the most significant amount of interoperability of the VMware Cloud Foundation running NSX and vSphere infrastructures with NSX.

Applications Drive Infrastructure Requirements

The digital business era has increased the need for speed and efficiency in application development and customization. IT must provide expedient delivery, service, and security to keep pace, as customized application delivery directly impacts an organization's success.

- Inherent infrastructure firewall model - Securing application flows, whether east-to-west or north-to-south, and the security policy should be ingrained in the infrastructure. Applying appropriate per-flow policy is operationalized by writing rules applicable to the context of each workload. Each endpoint in a workflow attains policy in its inherent and natural connectivity to another endpoint. Service graphs and policy-based redirects are not inherent architectural methods for policy enforcement. These latter methods are tedious and often operationally do not scale, are difficult to troubleshoot, and often result in more prolonged mean-time-to-repair operations.

- Infrastructure independent – Deploying applications with diverse needs is a chore unto itself. Legacy deployment schemes have bonded the viability of an application to specific physical components of the switched fabric. This creates inherent dependencies for servicing and securing applications. Legacy hardware application deployment models added operational variances based on the physical devices of a site. Untying the infrastructure's application allows a cohesive operational model to be used across all sites regardless of the infrastructure. Cloud-based IT operational models are moving toward adherence to an agnostic approach to hardware infrastructure.

- Security everywhere – Perimeter security models have proven insufficient, as evidenced by the numerous reported exploits occurring deep within the data center and the cloud. The security policy must concern itself with attacks from the public to any site and location and protect all dependent application flows and the ever-increasing east-to-west communication paths. Security must also position every workload as its own demilitarized zone (DMZ). Security must wrap around all application frameworks and their respective tiers, using traditional virtual machine deployments, bare-metal, containers, and microservices composing the newer cloud-native applications.

- Diverse application frameworks, sites, and clouds – As previously mentioned, application development is accelerating. Adding new frameworks, including PaaS, modern application frameworks, and a hybrid set of sites inclusive of clouds, requires an innovative approach to networking and security. A software-only network virtualization solution aids the agility that IT operations require for various tasks. The moving or failing over workloads between diverse locations, managing an ever-changing set of application frameworks, and a need for extensibility to introduce new services and features are only a fraction of these tasks.

- New*** NSX Multi-Tenancy Framework—NSX introduced a multi-tenancy framework in NSX 4.1 and 4.1.1. The native multi-tenancy in NSX 4.1.0 allows multiple users to consume the platform in parallel without the risk of overlap or disruption. This makes it possible to provide users with direct access to NSX but segments each within isolated environments where they can configure their own NSX objects and apply rules only to their workloads.

NSX Network Virtualization Considerations

Easing the burden of data center architecture solutions is at a turning point. Solutions before this modern era introduced modest, gradual, and somewhat evolutionary changes. A new mindset began whereby a software-defined network managing a virtualized network became the desired norm. VMware NSX introduced the first fully software-only, hardware-independent approach to solving this need. With the mainstreaming of VMware NSX network virtualization and an adoption rate second to none, NSX exemplifies the approach to solving network and security problems within the data center. Further, this desire rapidly evolved beyond data center operations for application deployment, security, and availability to a holistic view of operationalizing the primary data center operations, multiple sites, the network edge, and across the cloud.

By leveraging a software-defined, programmable network via NSX, the modern data center network combines networking virtualization plus storage and compute virtualization to realize the full potential of the software-defined data center (SDDC). Figure 1 displays the integration of network virtualization and security with other core platform components of VMware Cloud Foundation.

Figure 1: NSX as the core networking and security component of VMware Cloud Foundation

NSX is the de facto standard for defining a network virtualization platform in the software-defined data center (SDDC). It delivers a more agile operational model for vSphere-hosted virtual workloads and transforms the economics of network and security operations. NSX lets you treat the physical network as a pool of transport capacity without specific features to alleviate attaching network and security services to various physical and virtualized appliances. NSX contains the VMware Firewall and the VMware Firewall with Advanced Threat Prevention that delivers policy via standardized networking with no logical or physical modifications required for policy enforcement.

NSX virtual networking runs atop a physical IP switch fabric supported by any networking vendor. IT teams look to automate the network required for workload connectivity as data center networking evolves. But too often, the ideals of software-defined networking are entangled with a complex hardware-switched dependent model to deliver a software-defined experience. Cloud-like networking solutions do not have a dependency linked to the physical network. Research analysts, legacy-minded network professionals, and others who define it in such ways disregard the market or customers they purport to serve. Customers choosing a hardware-dependent solution have suffered the inevitable delays for fabric deployment and application delivery, extended scoping of troubles encountered, increasing mean-time-to-repair operations (MTTR), and driving IT teams to alternative cloud solutions. For instance, customer X's guest network team chooses a hardware SDN switch model yet fails to deliver applications at speeds required of the business due to one or more of the following issues:

- Overwhelming solution complexity, delaying deployment

- Unforeseen operational overhead of required services promised by the hardware-bound physical network to enforce security policy

A fully software-defined model decouples the physical infrastructure from the application's dependency requirements to simplify the physical network's operations. Reducing the application's dependencies on hardware-defined assets provides flexible service deployment for a more agile workload consumption approach. Reducing hardware dependency is the heart of the cloud service consumption value in an application-centric deployment and service model.

This document guides networking and virtualization architects interested in establishing a programmable network with the full functionality of NSX networking and security while using Cisco ACI for the physical network. While neither solution is dependent on the other, some dependencies ensure interoperability. This paper discusses the fundamental building blocks of NSX with VMware vSphere and ESXi™ and provides a prescriptive configuration for the Cisco ACI switch fabric.

Further, this document continually provides necessary updates, extensive additions to our reference design for NSX networking on Cisco ACI, and helpful best practices. Pictures detailing configuration and a more detailed discussion of the required configuration elements are included.

Necessary: The reference guidance provided in this document facilitates a stable, resilient physical switch fabric. Any deviation from the recommendations may cause challenges in the deployment and operations of both NSX-T and the Virtual Cloud Foundation deployed on a Cisco ACI fabric because the Cisco ACI fabric is not a typical networking deployment. Utilizing the deployment guidance offered in this document normalizes the Cisco ACI fabric, reduces the deployment timeframes, alleviates complexity, and removes the introduction of inappropriate hardware entanglements in managing the SDDC stack of the vSphere environment.

NSX is agnostic to any switch fabric, including any Cisco Data Center Nexus switching. We recommend deploying NSX on standardized switch fabrics like Nexus switches running NX-OS. Cisco NX-OS allows flexibility of topology and features supported by various 9000 series Nexus switches, including the legacy Nexus switching (Nexus 56xx, 6xxx, 7xxx, 3xxx, and older 9xxx series). Fabric management with Cisco Nexus Dashboard can provide much the same in fabric automation.

- Design Guide for NSX with Cisco NX-OS and UCS (coming)

These guides provide overall design guidance for NSX deployments for NSX across one or more sites:

This document assumes that the customer understands Cisco ACI and NSX well. Table 1 provides a complementary view of the capabilities provided by NSX and Cisco ACI when used in conjunction with one another. Customers have requested such interoperability as it gives them the full benefit of a software-defined programmable network overlay with NSX Data Center, easing the automation of networking and services. At the same time, it provides an inherently embedded adaptive micro-segmentation feature set of virtual and physical workloads. Cisco ACI enables customers to build and control the physical underlay fabric.

|

Full NSX Features |

Cisco ACI Underlay Features |

|

|

Table 1: VMware value proposition with NSX and Cisco ACI contributing features

IMPORTANT:

Cisco ACI has multiple modes of operation. Cisco ACI's "network-centric" operational mode provides the least complex interoperable solutions. Cisco ACI in network-centric mode provides the greatest compatibility and interoperable servicing for VMware Cloud Foundation and vSphere with NSX. VMware delivers a supported model, which is outlined in this document. More specifically, the following methods or service features are not supported:

- There was never support for Cisco Application Virtual Switch running on VMware vSphere® 5.5, vSphere 6.0, or vSphere 6.5 in any vSphere deployment.

- Cisco Nexus 1000v cannot be used with NSX and is deprecated as of vSphere 6.5.1. Installation will not be possible for future vSphere releases.

- VMware vSphere Distributed Switch™ cannot be controlled by the Cisco ACI plug-in nor Cisco ACI Virtual Networking (otherwise known as Virtual Machine Management, VMM). ACI VMM was developed independently and is outside the partner support model VMware by Broadcom has in place. All support and subsequent risks for using ACI VMM will be handled solely by Cisco. See the Cisco documentation about out-of-synchronization issues that can arise from using VMM. VMware supports using vSphere APIs for VDS management and operation, but not the unintended extensions imposed by split-brain operations from third-party integrations such as Cisco ACI VMM.

- Cisco Nexus 1000VE is not supported,

NOTE: For supported interoperability of the listed VMware technologies such as VMware vSphere and the vSphere Virtual Distributed Switch, VMware Kubernetes for vSphere, Tanzu Kubernetes, Tanzu Kubernetes Grid (TKG), Tanzu Kubernetes Grid Interface (TKGI), NSX, and vSphere Distributed Port Groups managed via NSX Manager that are either VLAN or Overlay backed Distributed Port Groups must never be provisioned by an integrated API or by an unsupported third party Center Plug-in with the management of the physical switching. VMware by Broadcom will support automation of the vSphere distributed switch and its objects when there is a clear line of demarcation from the switch fabric and managed independently from the physical switching fabric. Cisco ACI utilizes ACI VMM for its Cisco Virtual Networking, which may involve virtual machines, containers, and service virtual machines such as the Cisco ACI Virtual Edge (Cisco AVE). Cisco AVE requires Cisco ACI VMM as it is a dependent setup feature for Cisco AVE.

VMware by Broadcom advocates an on-premises private cloud environment free from the entanglements of the physical infrastructure.

A more in-depth discussion of the management of the vSphere Distributed Switch is found in section 1.4 Native Distributed Switch Management.

NSX and Private Cloud Networking

Traditional enterprise networks were built by connecting physical devices—computers, servers, routers, and switches—to each other. After setting up a network, security solutions were bolted on at the end. Manual changes to the network were necessary to institute security policy. Thus, leaving a wide margin for error and increasing the potential for costly outages.

The emergence of software-driven networks has challenged these existing norms. Modern technologies allow software abstraction of a network's hardware-based feature set. Automation and programmability minimize a company's operational complexity by replacing complicated manual tasks, enabling better scale and reliability. Today's advancement of software-defined networking provides businesses a means to modernize their network with software and open a new set of possibilities driving business innovation.

Most of today's enterprises use or plan to use multiple clouds to run their business. Everything of value connects to the network and delivers new customer experiences through the cloud. Users connect not only through their desktop and laptop computers but also via mobile devices. Applications run everywhere across a company's infrastructure. Yet, many companies don't clearly understand the security and connectivity of their applications and devices across the data center, branch, cloud, and edge.

These emerging technology trends increase enterprise security complexity and challenge the limitations of hardware-driven networks. Software-based networking creates a business fabric that securely and consistently connects a company's data centers, carrier networks, branches, endpoints, and clouds. And it's all done independently of the underlying network hardware.

Virtual networks run more efficiently and lower operational costs by automating network infrastructure functions such as maintenance, patching, and updating. They also increase uptime, improve service, and reduce costs—enabling organizations to maximize the value of their existing network hardware while creating innovation and business opportunities.

In addition to automating data centers, companies are turning to virtualized networks to improve security. With software, security policies can be set once and deployed across the network, following applications, services, and devices wherever they go. Technologies such as micro-segmentation, which enables security around individual workloads, provide additional protection.

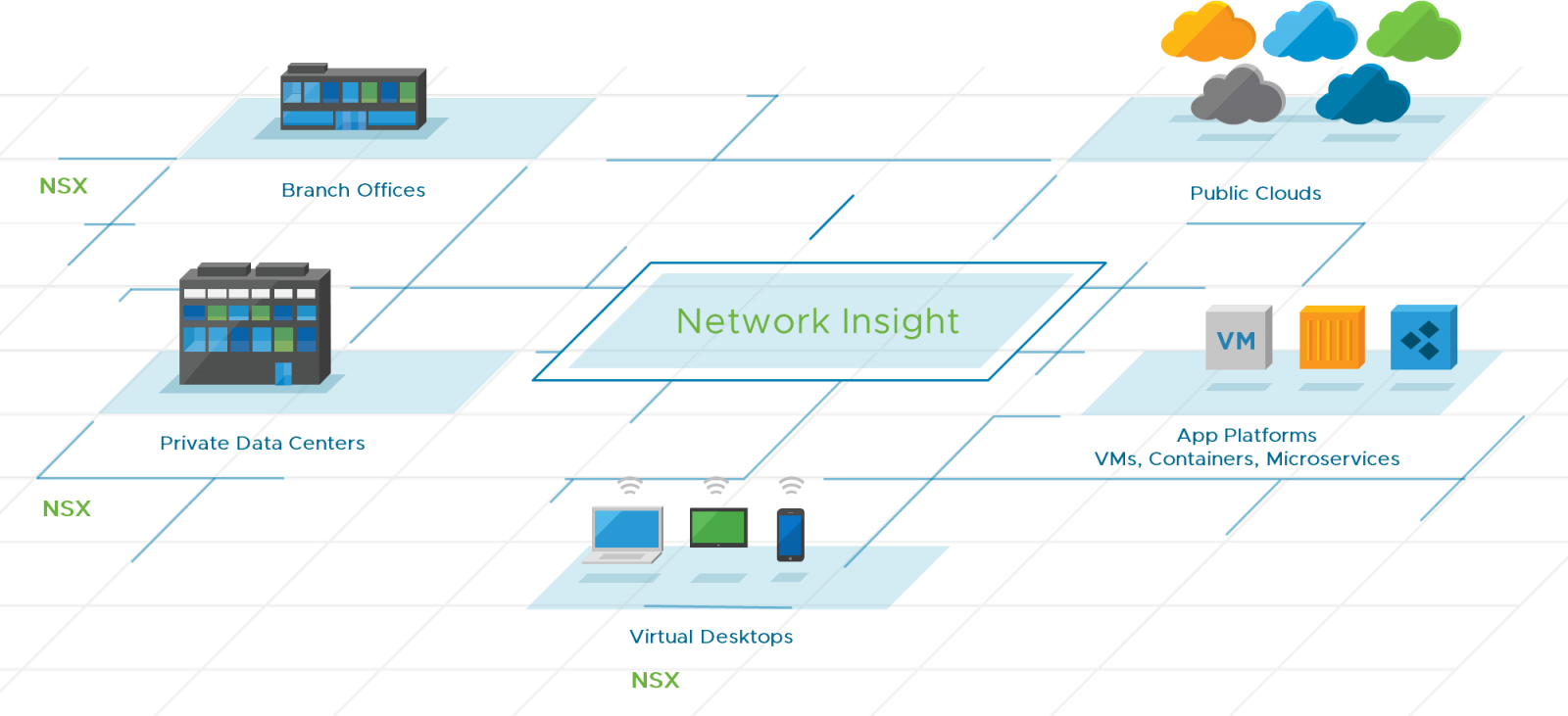

Figure 2: VCF Networking by NSX is the cloud network operating model with network visibility to your on-premises data center

The positioning of NSX as the virtualized network solves these challenges as NSX brings networking and security closest to the workload and carries the policies along with the workload. NSX's unique ability enables customers to solve bona fide business problems. When customers deploy NSX with Cisco ACI as the underlay, they can get all these incremental benefits, which is impossible with an automated hardware underlay-based solution.

Let us now look at some of the critical technical benefits, requirements, and operational aspects of NSX.

1 NSX Architecture and Operations

NSX enables private cloud teams to build logical services for networking and security on any infrastructure or site without making configuration changes to the physical infrastructure to deploy new workloads. For example, once the Cisco ACI fabric is configured to provide IP connectivity and the routing configuration is provisioned in this design, secure application workload deployments proceed with NSX. No operational modification of the Cisco ACI physical switch fabric is required for the most part. However, a mistakenly inherited function of any hardware-defined architecture is the operational differentiation required for transitioning a private cloud model across sites with heterogeneous hardware, sites with managed hardware services, or managed providers with disparate switch hardware.

NSX is architected for any site, device, and application framework. As NSX matures, updates and new features enhance these highly important aspects. NSX achieves operational symmetry by abstracting the endpoints and providing a common framework for policy operations, and software is driving the model. NSX has already achieved a framework for policy management across on-premises private clouds to resources extending into multiple hyperscaler partners.

As the first SDN software-only network platform, NSX implements three separate but integrated planes: management, control, and data. The three planes are implemented as sets of processes, modules, and agents residing on two types of nodes: manager appliances and transport hosts.

Figure 3: NSX SDN Architecture for Private Cloud, VMs, Containers

The following section provides an overview of the NSX functional features and primary use case examples showing how applications can be deployed with NSX as the network virtualization platform of today’s SDDC.

1.1 Manager Operations

The management plane for NSX-T is a cluster of three NSX-T Managers provided in a virtual machine form factor. The NSX-T Manager provides the following functions:

- The manager provides an aggregated system view and is the centralized network management component for the NSX ecosystem.

- The management plane provides a single API entry point to the system via RESTful API or their respective user interfaces.

- The management plane persists user configuration, handles user queries and performs operational tasks on all management, control, and data plane nodes in the system.

- The management’s virtual appliance is responsible for storing the desired configuration in its database.

For a more in-depth understanding of the management, control, and data plane components of the respective NSX platforms, please review:

1.2 Control Plane Operations

NSX provides a multicast-free overlay connectivity for logical switching and routing with control plane functionality provided by a highly available controller cluster. Removing the necessity of layer 3 multicast from the underlay network greatly simplifies physical network configuration. Additionally, the controller cluster services distributed discovery and efficient management of control plane functions for the NSX overlay. These services provided by the highly available clustered controllers are MAC, VTEP/TEP, and ARP table management and distribution of dynamic routing, collected from the distributed router control virtual machine.

Terminology

- NSX Data Center for vSphere uses a VTEP (virtual tunnel endpoint) for its VXLAN overlay.

- NSX-T Data Center employs a TEP (tunnel endpoint) for its Geneve overlay.

Either overlay, VXLAN or Geneve, used in all versions of NSX is agnostic to the underlay. The underlay fabric is only required to provide IP connectivity for the tunneling endpoints used by the respective overlays of NSX. Our NSX designs require only a highly interoperable switch fabric with an appropriately set MTU that any switch fabric vendor provides.

1.3 Logical Layer Connectivity

NSX logical layer connectivity offers drastic improvements over legacy physical deployment models. Leveraging an overlay allows logical layer 2 segments over physically routed environments. Layer 2 adjacency is no longer tied to the physical infrastructure that can be switched or routed, yet an overlay network enables VMs to be in the same subnet, layer 2 adjacent, and providing topology-independent connectivity. This enables mobility beyond the structured topology constraint imposed by physical networking. NSX logical layer 2 adjacency does not require complicated underlay management or control plane operations.

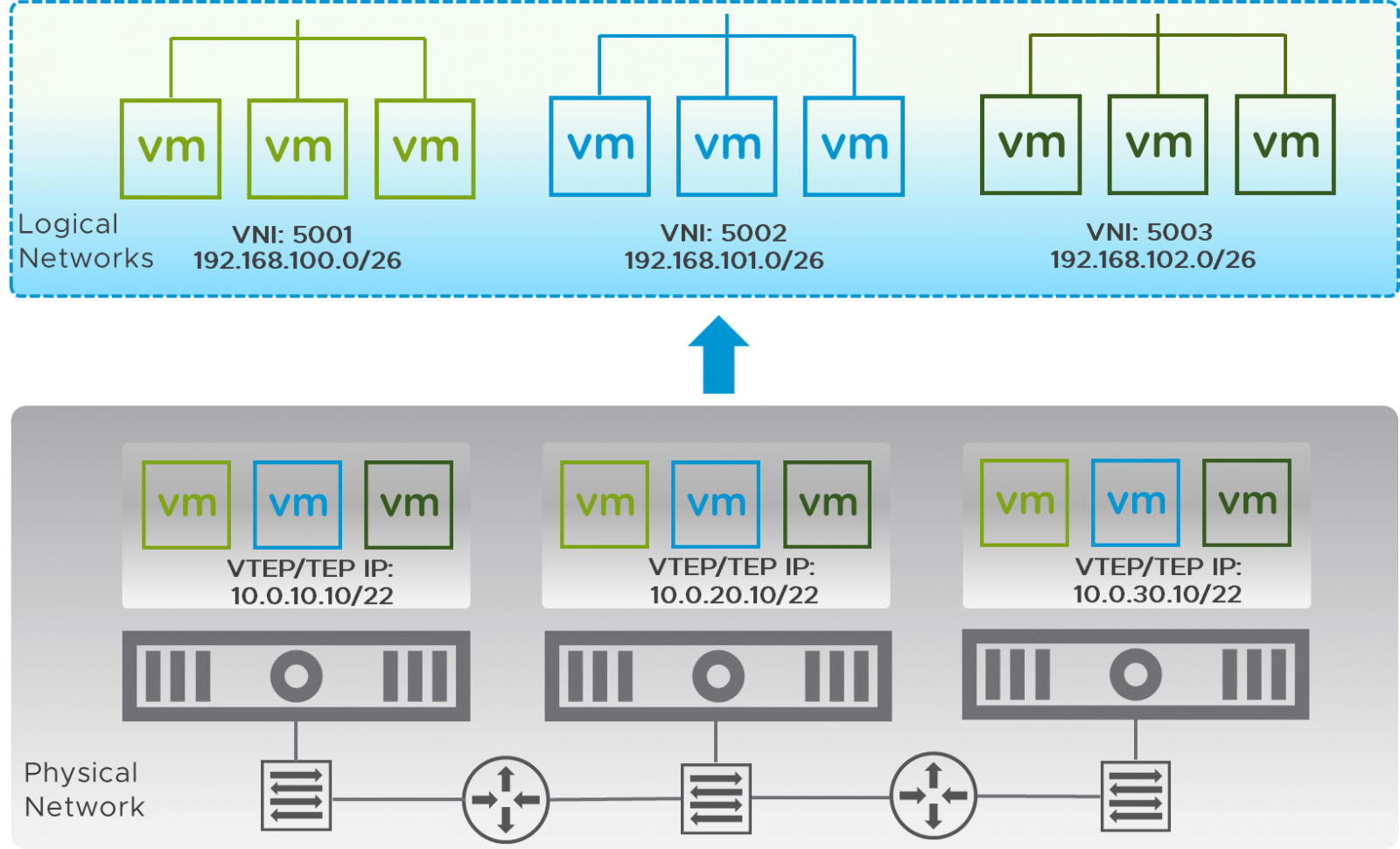

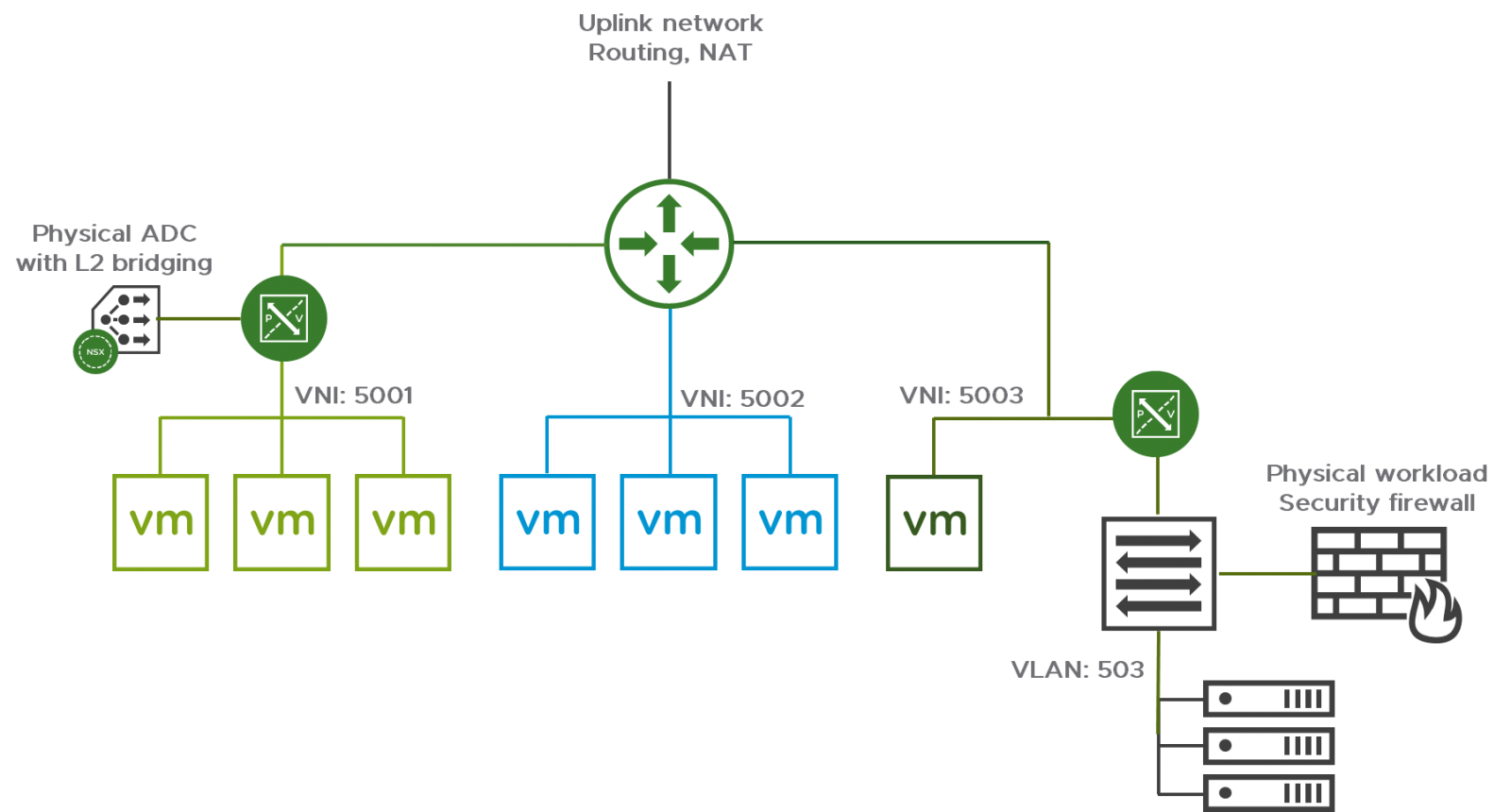

Figure 4 displays a series of logical layer 2 segments with layer 2 adjacent virtual workloads spread across multiple physical hosts situated on three separate routed subnets.

Figure 4: Logical Layer 2

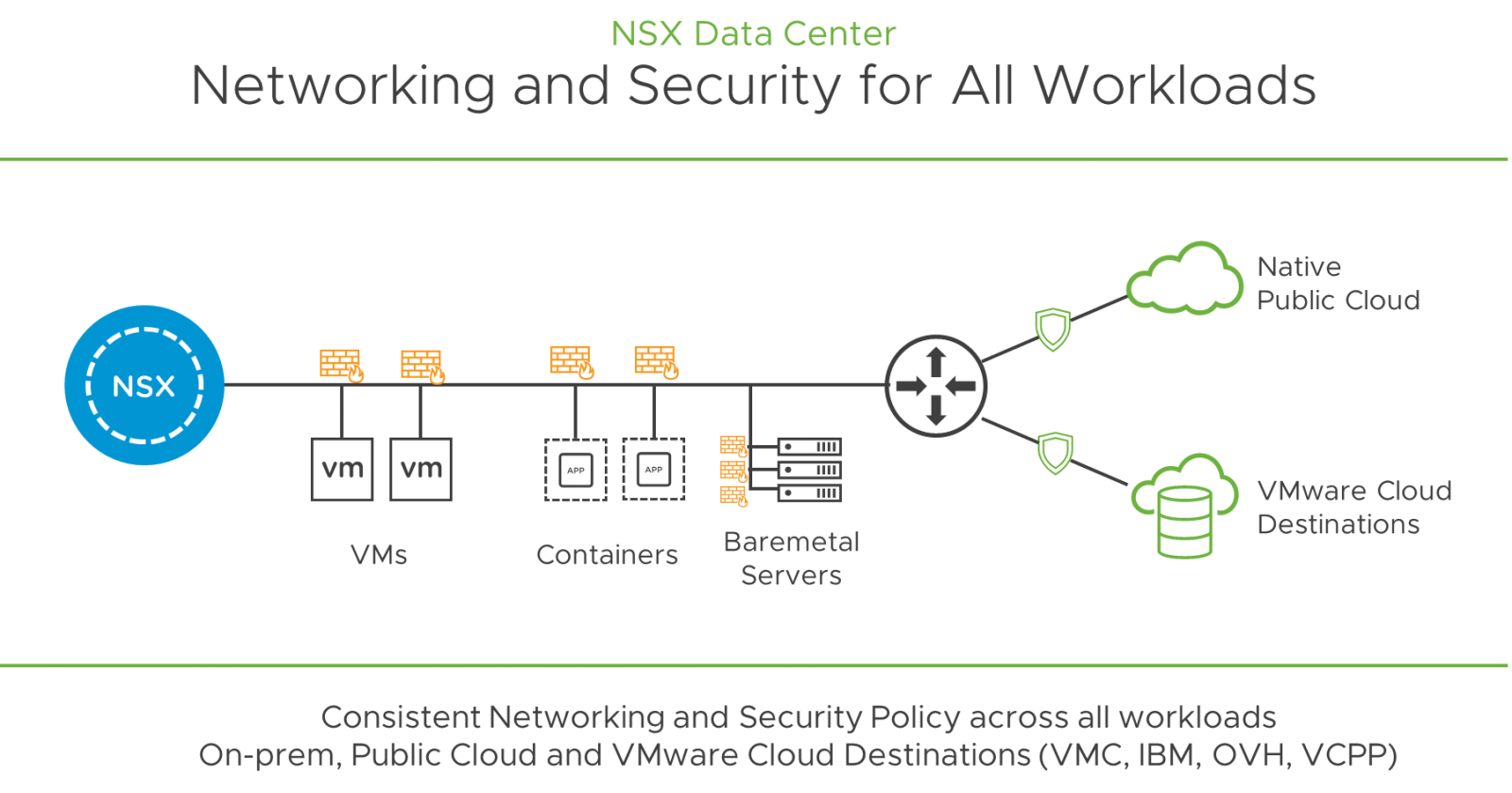

NSX Data Center builds multicast-free VXLAN-based (NSX Data Center for vSphere) and Geneve-based (NSX-T Data Center) overlay networks requiring no Spanning-Tree (STP). This layer 2 adjacency between the VMs can be established independent not only of the physical network configuration, but the hypervisor (vSphere or KVM), the site, and across cloud(s), including VMware Cloud™ on AWS (VMC on AWS) and VMware NSX Cloud™. Extending this network across these deployment targets, as shown in Figure 5, demonstrates that NSX Data Center allows a diverse target environment to be managed under a single policy platform.

Figure 5: NSX Data Center, Securing Deployment Targets Across a Diverse Environment

NSX logical networks provide the basis for a programmable network. New logical networks are created upon demand via NSX integration or use of NSX APIs with various cloud management platforms (CMP), open source platforms, and scripting tools. NSX logical networks can be brought up and torn down when necessary without underlay entanglements or operational overhead. By decoupling the logical networks from the physical network topology required for workload communication, the application is truly the center of attention for service management, accelerating the business needs to a realized functional application.

1.4 Native Distributed Switch Management

One of our key requirements for a successful and validated deployment of NSX is that the virtual switching should be managed by native services of the NSX Data Center deployment. Although the virtual distributed switch is one component leveraged in network virtualization, it is an essential component in offering our customers operational simplicity and rapidity in network configuration for application deployment. Further, most importantly, NSX Data Center leverages the kernel services of the hypervisor to instantiate a high-performance distributed firewall capable of stateful and contextual application security. Validating the insertion of any service positioning itself into the process of configuring a component feature of such importance would be the prime concern of any vendor for its product.

Operationalizing the deployment distributed switch of the VDS used by NSX Data Center for vSphere, or the N-VDS of NSX-T, can leverage a variety of UI, scripting, and automation tools. These tools are available through VMware, partner-validated products and services, or open source. What should never be considered for support by any vendor are operational tools that necessitate the configuration of a vendor’s asset central to its purpose through a non-partner-created service or product. For NSX Data Center, instability and other eventful disasters can be mitigated by avoiding the use of tools that use stateful reproduction of the VMware virtual distributed switch through unsupported event replication or synchronization processes.

Cisco ACI has a feature commonly referred to as a Virtual Machine Manager Domain or VMM. VMware strongly recommends against the use of this feature for creating and managing the vSphere Distributed Switch for NSX Data Center deployments. It is not tested for the designs in this document. VMM was created independently by Cisco outside the VMware partner support program, so any side effects, risk, and support would come solely from Cisco. This includes all aspects inclusive of modes, virtual distributed switch versions, and the use of Cisco Application Virtual Edge (AVE). See the Cisco documentation about out-of-synchronization issues that can arise from the use of VMM domain management.

VMware strongly recommends against the use of VMM domain management when Cisco ACI underlay is the chosen fabric manager. Cisco ACI can be deployed with a more practical approach using a stable “network-centric” management interaction with compute deployment. Network-centric management treats all connectivity with a VLAN-backed connection to an endpoint group (EPG). This style of ACI deployment provides the ideal usage for automation in managing the underlay and normalization of its operations.

VMware Cisco ACI VMM/AVE Mode Support Statement

VMware supports vSphere and all features available through the public API.

Any API-level integration implemented outside of a certified partner program is a customer’s responsibility and is not supported by VMware.

Cisco ACI VMM/AVE leverages the vSphere APIs but was developed outside of any formal partner program and therefore is not supported by VMware.

For Support Requests which directly relate to the ACI VMM/AVE component and how it interacts with vSphere, VMware will request that the Cisco VMM/AVE component be removed for troubleshooting purposes as per the VMware Third-Party Hardware and Software Support Policy.

- If the issue is reproducible without the Cisco VMM/AVE component, VMware will support and investigate as normal.

- If the issue is not reproducible when the ACI VMM/AVE component is removed, VMware will not investigate further.

Source: Cisco ACI AVE/VMM Mode Support in a VMware environment (57780).

Disaggregation of the virtual switching and the overlay from the physical fabric has been the hallmark of NSX Data Center designs. This disaggregation lends stability to the underlay fabric services while providing the preferable cloud-centric approach in abstracting the virtual network from the physical infrastructure.

1.5 Distributed Routing

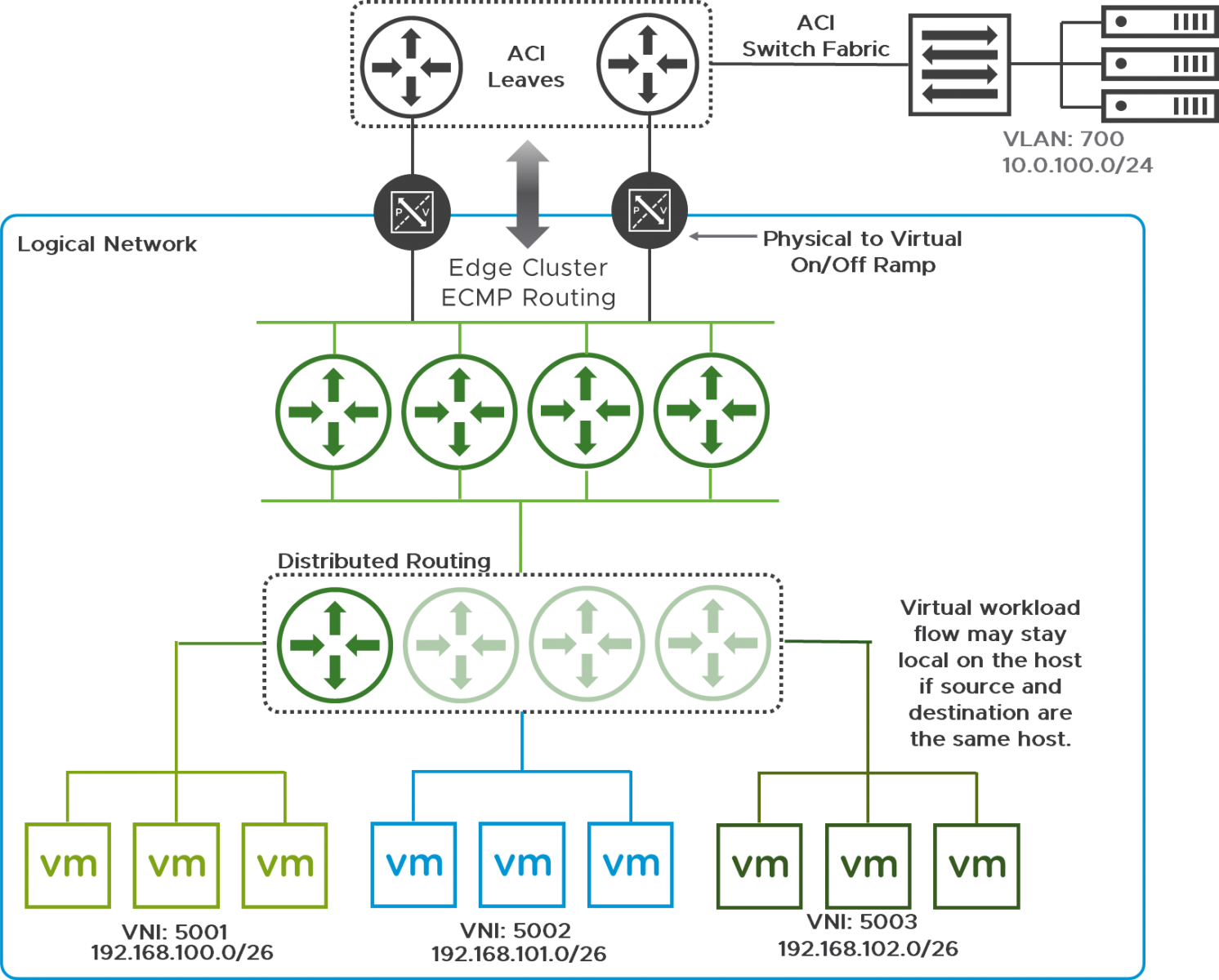

NSX Data Center employs a dual-tier routing made of centralized and distributed routing components. Centralized routing is used for on-ramp/off-ramp functionality between the logical network space and the external L3 physical infrastructure. Distributed routing provides high-performance routing for east-to-west traffic flows.

NSX enables distributed routing and forwarding between logical segments within the hypervisor kernel, as shown in Figure 6. In this topology, three different logical networks are isolated in three different subnets. Using the distributed routing functionality provided by NSX, these subnets are interconnected without the overhead of routing integration changes with the underlay.

Figure 6: Distributed Routing with NSX Data Center

NSX-T high-performance two-tiered routing architecture is composed of T0 and T1 router instances, with T0 providing top-level connectivity and T1 used for tenant-level connectivity when necessary. Also, NSX-T T0 to T1 tiered routing instances are autoplumbed, including all logical networks connected to a downstream T1 router whenever a T1 router is employed. Dynamic or manual configuration of the static routes for distributed routing between the tiers in NSX-T is unnecessary due to this autoplumbing of routes.

NSX-T does not require the use of T1 routing. T0 routing can provide distributed routing and centralized routing for on-ramp/off-ramp functionality between the logical network space and the external L3 physical infrastructure. T0 routing is required for this purpose and discussed later in the NSX Edge Routing to Physical Infrastructure section. T1 routing provides an additional layer of tenant-level routing in addition to service-level placement. T1 routing is optional.

NSX Data Center for vSphere also employs a dual-tier routing structure of centralized and distributed routing. Distributed routing is fulfilled by two components:

- Control plane virtual appliance for dynamic route control plane servicing and distribution of the learned dynamic routes to the NSX Controllers

- Data plane element embedded as a high-performance distributed kernel component within all prepared hypervisor hosts

Centralized routing is discussed in the NSX Edge Routing to Physical Infrastructure section.

The key benefit of distributed routing is an optimal scale-out routing for east-west traffic between VMs. Each hypervisor has a kernel module that is capable of a routing lookup and forwarding decision performed at near line rate. As shown in Figure 6, traffic within a single host can be routed optimally within the host itself when the VMs are located on separate logical switch segments. The localized forwarding reduces traffic to the ToR (top of rack) and potential for reduced latency as packets are switched in memory.

Traffic required to traverse the physical fabric will use the ACI ToR to make a forwarding based upon the destination VTEP or TEP IP where the remote virtual workload is hosted. But in a classic architecture, all routed traffic would be forwarded to the switch with the SVI configuration to make a forwarding decision for all virtual workloads. NSX distributed routing reduces this necessity, provides a simpler traffic view for the infrastructure to manage and operate, and thereby reduces I/O usage of the physical fabric.

In addition, as previously noted, the distributed router scale-out capability supports multi-tenancy in which multiple distributed logical router instances can be invoked to provide routing-control plane separation within the shared overlay infrastructure. Figure 7 shows a common topology use case where tenant-level routing is separated using NSX Data Center two-tiered routing capabilities.

Figure 7: NSX Two-Tiered Routing Separating Route Control Plane of Individual Tenants

NSX not only abstracts away the issue of physical layer 2 adjacency with its overlay networking, NSX further abstracts layer 3 connectivity with high-performance distributed routing.

1.6 NSX Edge Routing to Physical Infrastructure

As we have seen, NSX distributed routing will provide routing between virtual workloads, and when necessary between workloads located within separate tenants. To route from the logical network to the physical network, NSX can learn and exchange routes with the physical infrastructure. Edge routing provides routing workflows from the overlay to physical resources such as a database server, other non-virtualized workloads, and access to networks beyond the data center.

NSX can provide scale-out routing leveraging Equal Cost Multi-Path (ECMP) between the NSX distributed router and the VMware NSX Edge™ cluster, in addition to ECMP with the edge cluster and the router instances of the physical infrastructure. The NSX edges peer with the physical routers using static and dynamic routing, providing either administratively managed connectivity with static routing or scaling north-to-south bandwidth with ECMP-based routing. Figure 8 displays routing from the NSX logical network to the physical network using an edge topology configured for ECMP.

Figure 8: Edge Cluster with ECMP Routing to the Physical Fabric

The ROI attained by the use of NSX Edge routing is accomplished through multiple operational aspects. NSX Edge routing adds stability to the physical network infrastructure through

- Adding and scaling out workloads will require no modification to the physical infrastructure.

- Deployment of services such as NAT, load balancing, DNS, DHCP, VPN, and firewalling also require no modification to the routing or switching functionality of the physical infrastructure.

- Service deployment is completely disaggregated from the physical infrastructure, providing a true private cloud service when deploying workloads.

- Operational servicing of the physical infrastructure is substantially reduced, allowing time expenditure of IT teams to be focused on servicing the application.

- Workload dependency from a physical location is fully realized with the edge cluster service, thereby attaining true application agility.

NSX Edge routing adds centralized servicing of north-to-south traffic flows, service deployment for services performing a centralized function, and a disaggregation of the private cloud deployment of the virtualized network.

1.7 Security with NSX Distributed Firewall

Instantiating a distributed firewall service upon the VMware distributed switching virtual switch is more than just labeling a service or product as a distributed firewall. NSX institutes a stateful firewall offering contextual feature additions at near line-rate performance. Operational simplicity is assured through a modest set of requirements for a successful deployment of NSX for adaptive micro-segmentation. This is one of the main reasons NSX is used to solve a variety of security use cases. Further, NSX can provide a useful service to aid in the migration of the customer’s data center to its new fabric underlay. Running an NSX platform establishes a software-defined data center and requires only solid IP connectivity from the fabric underlay.

NSX, by default, enables the distributed firewall in the kernel, which is realized at the vNIC of each VM. Ingress or egress traffic from the VM will always traverse the distributed firewall. Key benefits include a stateful layer 2 through 4 firewall, and the reduction of security exposure at the root of east-to-west traffic that is isolated from the virtual workload, but with excellent context regarding the workload’s use. Context-aware firewall services through App ID ensures layer 7 provides the identified service of layer 4. The NSX distributed firewall’s inherent software services layer offers an adaptive micro-segmentation capability.

The distributed firewall can supplement a centralized firewall at the periphery of the data center, and remove physical (or concrete) devices used as an east-to-west firewall that require complex service graphs for operational use. Use of these periphery firewalls polarizes traffic to specific regions of the switch fabric in order to stitch IP traffic through them.

The NSX distributed firewall adds the following benefits:

- Eliminates the number of hops while reducing bandwidth consumption either to and from the ToR or when pushing IP traffic to designated remote locations of the switch fabric, while forcing application flows to traverse a centralized firewall

- Flexible rule set applied dynamically, leveraging multiple object types available to NSX security management, such as a logical switch, a cluster dvPortgroups, security tags, virtual machine attributes and more

- Allows the security policy and connection states to move during automated or manual moves with VMware vSphere vMotion®

- A spoofguard policy adjacent to the virtual workload not possible with a physical firewall

- Stateful filtering of ingress and egress traffic at line rate

- Fully API capable for developing an automated workflow leveraging programmatic security policy enforcement at the time of deployment of the VM through a variety of cloud management platforms, based on exposure criteria such as tiers of security levels per client or application zone

As shown in Figure 9, the architect now has flexibility in building a sophisticated security policy because policy is not tied to physical topology. The security policy can be customized for inter- and intra-layer 2 segment(s), complete or partial access, as well as to manage N-S rule sets that are employed directly at the virtual-workload level. The edge firewall can be used as an interdomain security boundary.

Figure 9: Micro-Segmentation and Protection of Traffic

The NSX micro-segmentation distributed firewall policy capability as shown in Figure 9 has many advantages, such as

- Creating PCI zones within shared segments

- Contextual security policies such as Identity Firewall for desktops in a VDI environment

- Reducing operational overhead required for stitching traffic through centralized firewalls with legacy policy-based routing rules

- Virtual demilitarized zone-based firewalls per every workload

- Security policy centrally managed for multiple sites

- The same security policy used across private cloud, hybrid cloud, and public cloud workloads

NSX adaptive micro-segmentation eliminates or reduces

- Scaling limitations imposed by switch TCAMs for storage of access-control lists entries

- Use of legacy-style PVLANs to provide intra-tier segmentation compounding the complexity with proxy-arp added contracts due to sub-groupings

- Use of an array of complex security construct combinations of security groups, micro-segmentation groupings, PVLANs, and proxy-arp all to achieve stateless, reflexive access-control filtering

- Combining use of strongly not recommended virtual distributed switch management services with user space L4 filtering appliance services and PVLANs, that are still limited to the capacity of legacy TCAM space consumption

There are many workarounds combined with line cards and switches with larger and larger TCAM entries that have attempted to scale TCAM. These include dynamic provisioning, use or non-use of a reflexive setting, and combining security rules to apply to a larger endpoint grouping. The designs in this document propose a simpler and more inherent approach with the use of software first for the larger set of dynamic virtualization workloads, virtual-to-physical and physical-to-virtual micro-segmentation. Physical-to-physical segmentation can be covered by traditional firewalling where deep inspection is needed, and ACI segmentation where stateless inspection is acceptable. VMware NSX is adding adaptive capabilities to begin securing physical workloads.

Note: For additional information, see VMware Network Virtualization Blog: Extending the Power of NSX to Bare-Metal Workloads.

Figure 10: NSX Firewalling Model

The NSX adaptive micro-segmentation security model provides its services in a software-driven, API-capable, scalable model. NSX micro-segmentation uses stateful segmentation of each individual workload, regardless of the number of application tiers, flat or routed topology, or dependent services. Micro-segmentation involves the ability to inherently position firewall services beyond layer 4 filtering. NSX distributed firewall and adaptive micro-segmentation provides contextual awareness to security admins offering even deeper granularity of security policies. This context-awareness includes

- Native layer 7 firewalling services for east-to-west communications

- User-identity-based security for virtual desktop environments

- Per-user/session-based security for Remote Desktop Session hosts

NSX adaptive micro-segmentation has raised the bar for east-to-west workload security above all peers, which mainly provide stateless, reflexive access. Figure 11 displays the adaptive micro-segmentation model of integrating all services: overlay network isolation, distributed firewall segmentation, and the use of service insertion.

Figure 11: NSX Micro-Segmentation Platform

A substantial additional value-add is the relative ease in integrating additional L7 deep-packet network inspection and guest introspection. NSX uses a simplistic model with a transparent path for the extended security inspection service, and is rendered local to the source hypervisor of the targeted virtual workload. NSX redirection to L7 guest and network introspection services completes the needs of micro-segmentation without stitching IP pathways or complex network service graphs.

1.8 Flexible Application Scaling with Virtualized Load Balancer

Elastic application workload scaling is one of the critical requirements in today’s data center. Application scaling using a traditional physical load balancer often lacks the sufficient agility for modern application needs, given the dynamic nature of self-service IT and DevOps-style workloads. The load-balancing functionality natively supported in the NSX Edge appliance covers many of the practical requirements required to provide application availability and performance enhancements. NSX load balancing can be deployed programmatically based on application requirements with appropriate scaling and features. The scale and application support level determine whether the load balancer is to be configured with layer 4 or layer 7 services, using application rules and a wide spectrum of additional servicing.

Each instance of the load balancer is an edge appliance that is deployed either using the NSX Manager UI, or dynamically defined via an API as needed and deployed in high-availability mode. Topology wise, the load balancer is deployed either in-line or in single-arm mode. The mode is selected based upon specific application requirements.

The single-arm design offers extensive flexibility as it can be deployed near the application segment and automated with the application deployment. Single-arm load balancing does not require a modification of the IP addressing used for the application communication.

Figure 12 shows the power of a software-based load-balancer using single-arm mode. Multiple single-arm mode instances of the load balancer serve multiple applications and a single instance can serve multiple segments.

Figure 12: Logical Load Balancing per Application

Alternatively, the load balancer can be deployed using in-line mode, which is able to serve the entire logical domain. Scaling the in-line load balancer is done by enabling a tiered edge instance per application. Each application can be a dedicated domain for which the tiered edge instance acts as a gateway, a load balancer, and if desired, a stateful firewall for the application. A provider set of edges are configured as an ECMP gateway for scalable north-south bandwidth.

As one can observe from Figure 13, the first application block on the left is allowing a single-arm load balancer with distributed logical routing. The center and the right blocks of the application allow an in-line load balancer that is either routed or routed with NAT capability respectively. The top-tier edge cluster is enabled with ECMP mode to allow north-to-south flow capacity to scale on demand from 10 Gbps to 80 Gbps and much higher through hardware offloads such as DPDK.

Figure 13: Scaling Application and Services with NSX

The provider edges offer highly interoperable infrastructure connectivity while abstracting application deployment in the overlay from the physical infrastructure. This agility is an essential element of an SDN. The NSX edges and edge nodes provide routing, firewalling, and application services; as well as load balancer, NAT, DHCP, IP pools, and DNS proxy.

A large value-add for the NSX Edge cluster design models is the ability to deploy application-dependent services disaggregated from the physical infrastructure. A logical tenant model is attained with NSX edges that provide services near the applications, avoiding complex service graphing or network traffic stitching.

This type of agility cannot be provided through a hardware-defined switched underlay without considerably more operational management and complexity. This cannot be overstated enough as the cloud services model has shown this to be true. Security and service offerings provided by the NSX platform are easily scaled in and out as well as scaled vertically or horizontally, depending on the application needs. An NSX software-defined network virtualization platform affords a cloud service experience within the enterprise data center.

1.9 NSX Layer 2 Bridging from Virtual to Physical Infrastructure

Some application and service integration may require connecting VMs to physical devices on the same subnet (layer 2-centric workload connectivity). Examples include migrations to virtual workloads, migrating app-tiers on the same L2 network using hard-coded IP addresses, and some virtual workloads with L2 adjacency to application-integrated ADC appliances. Bridging the overlay to a VLAN can be accomplished by leveraging the native bridging functionality in NSX shown in Figure 14.

Figure 14: Layer 2 Bridging from Virtual to Physical

NSX layer 2 bridging design considerations are covered in the NSX design guide. Additionally, depending upon the use case, NSX supports either integrated hardware VTEPs or the use of multicast-based hardware VTEP integration. Either will require additional design considerations.

Note: Nexus 9000 switches running ACI do not support use as integrated hardware

1.10 Operations

Building upon the application topology discussion of the prior section, changes to the underlay are minimized. Further, changes that do happen during application deployment are scoped to the needs of the NSX overlay and rarely require modifications to the hardware switched underlay. The switch fabric experiences more stability and little to no need for hardware modifications or updates, for enhancing physically dependent service functions due to hardware limitations caused by fixed feature sets and physical maximums being breached.

Our goal is to reduce the amount of operational management of the physical fabric and hone the cloud team’s attention to application deployment and service management. The following lists the one-time operations for installation and deployment of the switch fabric. The NSX deployment would follow a high-level series of steps, performed by the Cisco ACI admin:

- Initial configuration for ACI fabric bring up

- Configuration of ACI fabric connectivity for the NSX data center operational clusters

- Management, Compute, and Edge

- For details of the setup of this specific set of system objects, see Cluster Connectivity for the NSX Infrastructure Clusters.

- Configuration of a single ACI tenant requiring the following

- A single Application (Network) Profile containing the necessary EPGs

- External domain routing for the NSX network virtualized overlay

- For details on this specific set of tenant-related objects, see Demystifying the Overlay Transport and IP Connectivity.

Configuring these few items within the ACI fabric normalizes its services with a network-centric deployment architecture. This simplifies the switch fabric management while leveraging the underlay automation. At this point, the cloud admin will perform the deployment and initial configuration for the NSX functional clusters and edge cluster routing with the ACI border leaves.

A few key items to note with this initial configuration:

- Virtual services can be set up without any change to the underlying physical infrastructure.

- The infrastructure environment requires little operational change save for scaling out additional compute and switch fabric assets as workload needs grow.

- This adds stability to the IP infrastructure for application deployment operations upon the NSX platform that follow.

The next course of action is operationalizing NSX and application deployment. This involves a variety of aspects such as optimizing the organizational structure and the corresponding IT organization’s operational processes to fully advantage the teams for NSX usage. Further consideration is needed for the users and applications brought onboard, the developer consumption models, and the tools that will be used by the users and developers.

We will concern ourselves with bringing the applications onboard and the use of several key tools in this section.

A discussion of the people, the processes, and tooling when operationalizing NSX can be found in the VMware blog, The Key to Operationalizing NSX.

For anyone attempting a task, whether it is upgrading a portion of your home or fixing a broken appliance, the tools to put into your toolbelt should be appropriate to the task at hand. For network operations and monitoring, traditional tools that provided flow analysis, packet capture, and network management were not created to operate in the modern virtual networks, much less multi-cloud environments. Due to the nature of network virtualization, end-to-end troubleshooting, visibility, scalability, and a security perspective should be built into the operational tool from its conception, not strapped or glued onto a traditional tool.

Running an NSX overlay over a Cisco ACI fabric presents nothing new. Cloud-based environments are built upon underlays that use their own encapsulation functionality, whether they are VLAN- or VXLAN-based. The underlay’s encapsulation service running VXLAN is often termed an “overlay,” but it is providing a service for endpoint communication from the physical system viewpoint. NSX overlays provide an application platform to service endpoint communication within the virtualized network.

With NSX, several tools are inherent in your toolbelt from the outset. NSX provides a handsome set of tools for packet trace, some flow monitoring, and a helpful set of tools specifically for deploying and troubleshooting security for the communication pathways.

NSX contains both a packet-trace tool, Traceflow, and a packet-capture tool, named Packet Capture. Traceflow provides a hop-by-hop account of the virtual network path inclusive of the NSX distributed firewalls—distributed and tiered edge routing hops encountered from the source virtual workload to its virtual workload destination.

Figure 15: NSX-T for Data Center – Traceflow

NSX Packet Capture enables the traditional concept of packet capture with the benefit of network virtualization enhancements, such as capturing a packet incoming or outgoing from a virtual adapter, before or after a specified filter is applied on the virtual adapter, or specifying a VLAN or overlay network as part of the source’s defined path. This has been available since the earliest versions of NSX.

The NSX Flow Monitoring tool provides a means to grab live flows of virtual workloads, also with a variety of options such as filtering for live flows that are allowed or denied, or viewing the flows based upon their service details. The flow monitoring tool has been greatly enhanced.

Two tools stand out when specifically setting up security policy:

- Application Rule Manager, which is a built-in enhancement to the Flow Monitoring capabilities of NSX Manager in NSX for vSphere for Data Center

- VMware vRealize® Network Insight™, which is a separate product offering and part of the VMware vRealize Cloud Management Platform™ Suite

NSX Application Rule Manager introduces a rapid micro-segmentation capability of any multi-tier application through a simple three-step process:

- Load a set of VMs that you want to micro-segment

- Profile by capturing the application flow

- Analyze the captured flow to auto-generate firewall rules and security groups

NSX Application Rule Manager provides an auto-recommended DFW rule set with automatic intelligent object group suggestions for micro-segmenting applications in a discrete amount of time. Application Rule Manager also provides additional context from the flow data that is gathered and aids further customization of the rule sets crafted to secure the communication flow of the analyzed workloads. Figure 16 displays the published firewall rule list after flow capture and flow analysis, followed by ARM recommendations and a final manual edit of the list prior to publishing, if necessary.

Figure 16: Published Recommended Firewall Rule List After Flow Analysis

Application Rule Manager has been further enhanced to analyze multiple workflows at the same time. vRealize Network Insight extends beyond flow analysis and deeper into Day-2 operations.

vRealize Network Insight adds vision into all facets of your physical and virtual network fabric. vRealize Network Insight value begins at gathering a basic ideal of flow patterns in your private data center, such as east-to-west and north-to-south traffic flow ratios, to more complex flow analytics of any workload deployed across a variety of corporate network assets. vRealize Network Insight can provide capacity planning for the virtualized fabric along with awareness of security settings within the switch, router, and physical firewall systems of the underlay. Figure 17 displays the centralized role that vRealize Network Insight can play when it comes to understanding communication flow in the business fabric.

Figure 17: vRealize Network Insight Provides Vision Across the Business Fabric

vRealize Network Insight provides a complementary set of features for operationalizing adaptive micro-segmentation when combined with the Application Rule Manager tool of NSX Data Center. Figure 18 displays the five-step process to micro-segmentation:

Figure 18: The Five-Step Process to Micro-Segmentation

In addition to application and security planning, vRealize Network Insight provides an optimization and troubleshooting capability that uses multiple informational and graphical output styles of your virtual and physical networks. A third major use case of this tool is aiding in management of misconfiguration errors, NSX compliance, and scaling the NSX platform.

Supplying your toolbelt with the right set of tools in addition to the proper processes and people are the keys to a successful SDDC deployment and its operations.

2 Overview of NSX Design Using Cisco ACI as the physical fabric

This document assumes readers have a functional knowledge of NSX and Cisco ACI. Readers are strongly advised to read the following design guides for additional context; they provide a detailed characterization of NSX operations, components, design, and best practices for deploying NSX.

VMware NSX for vSphere Network Virtualization Design Guide

NSX-T Reference Design Guide

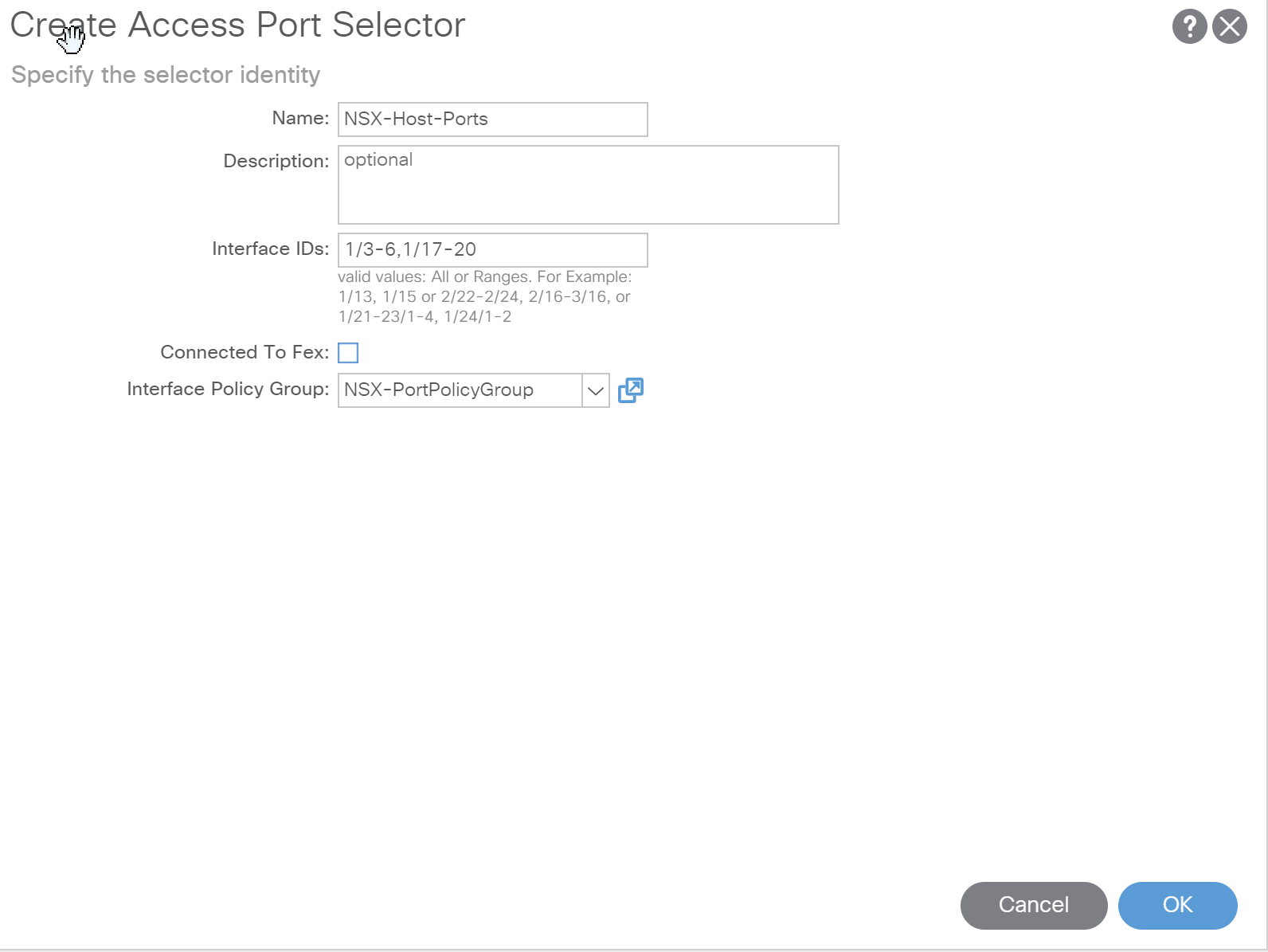

Specifically, the goal of this document is to provide guidance for running NSX over Cisco ACI as the underlay fabric. This document covers setup of the ACI fabric to meet the connectivity requirements for NSX including

- ESXi host to ACI fabric connectivity

- Standard VMkernel networking setup for an NSX design

- VLAN allocation and EPG configuration

- Overlay tunnel endpoint (TEP) configuration

- Layer 3 peering and routing configurations for north-south traffic

This design guide improves upon the earlier documentation by including explicit configuration examples of the ACI fabric. The goal in this regard is to satisfy all the NSX Data Center requirements using a network-centric setup of ACI.

2.1 Establishing NSX Data Center Connectivity Requirements

Prior to configuring the necessary objects within the ACI underlay to provide for vSphere (or the KVM transport nodes of NSX-T) and NSX communication, we must establish the connectivity requirements of an NSX Data Center deployment with ACI. That being stated, the two major requirements of NSX Data Center are essentially the same as any other underlay network:

- IP network – The NSX platform operates on any IP switch fabric.

- MTU size for Transport or Overlay – NSX requires the minimum MTU size of 1600 bytes. Application performance is optimized in an environment with a jumbo frame size setting of 9000 (a vSphere VDS maximum) across the entire fabric for operational ease.

Note: The virtualized workloads will require an MTU setting no larger than 8900 to facilitate the tunnel overlay’s additional headers.

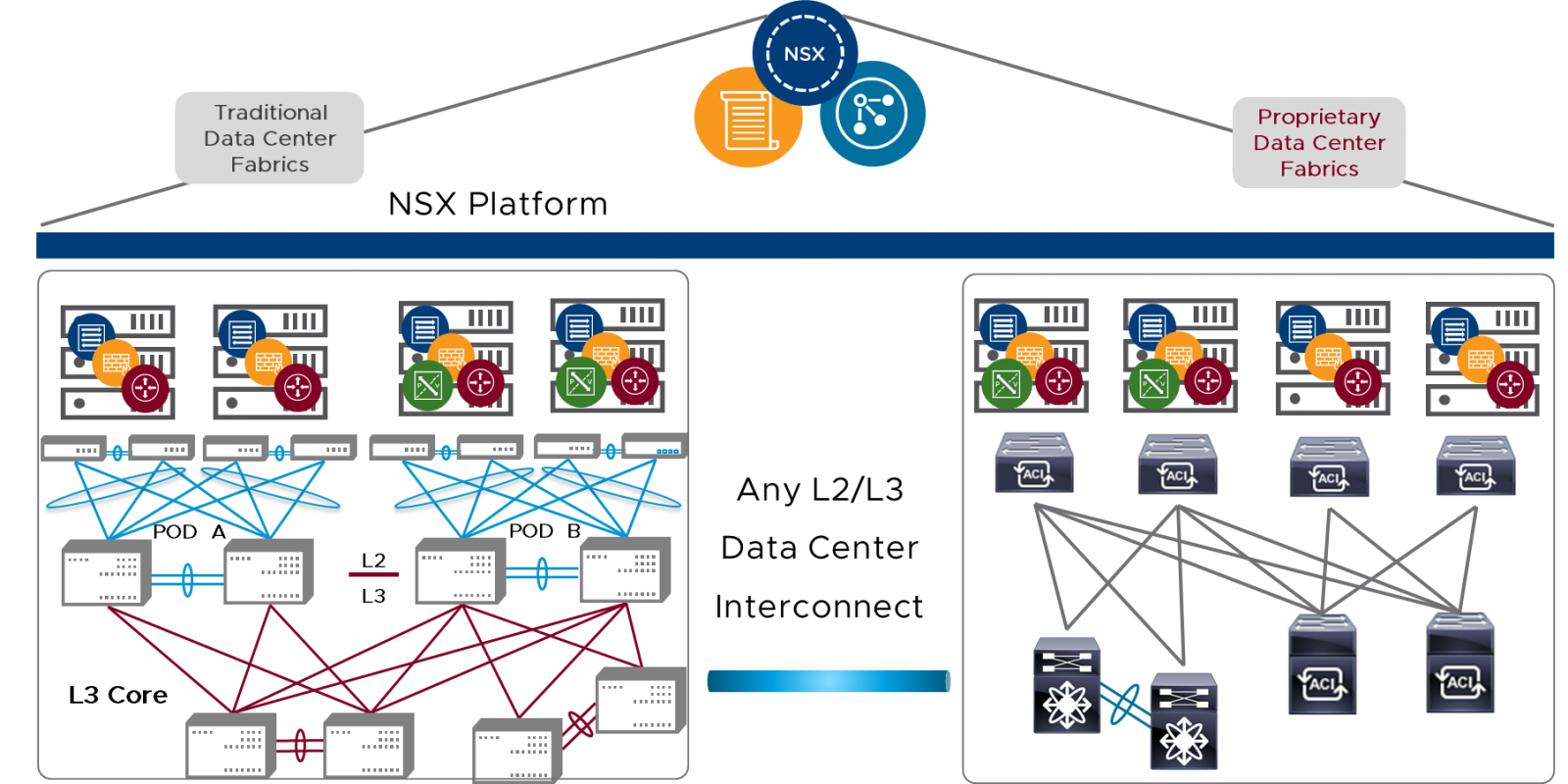

Many environments likely use a traditional-style VLAN-backed multi-tier pod-based architecture. Whether this architecture has been chosen, or migration to a newer fabric such as the leaf-spine model, the NSX Data Center platform operates essentially the same: an agnostic but highly interoperable approach to all switch fabrics. Figure 19 highlights NSX interoperability across all switch fabrics, inclusive of one or more public clouds.

Figure 19: NSX Platform Is Interoperable upon Any Switch Fabric

The difference and the purpose for this portion of our discussion is to establish how connectivity will be provided within the ACI underlay. This begins with mapping out the logical connectivity and the vSphere PNICS that will carry this traffic.

As in our general validated design for NSX, there are four infrastructure traffic types, with one traffic type, vMotion, specifically used for vSphere ESXi clusters. Each traffic type is recommended to be run within its own VLAN. Consolidation of these traffic types into few VLANs is possible, but not recommended for this paper. Table 2 summarizes these four traffic types.

|

Infrastructure Traffic Types |

Functions |

VLAN ID |

|

Management |

ESXi and NSX management plane |

100 |

|

vMotion (vSphere ESXi only) |

VM mobility |

101 |

|

IP Storage VLAN |

Applications and infrastructure data store connectivity |

102 |

|

Transport Zone (Overlay Network) |

Overlay VTEP (NSX for vSphere) |

103 |

|

Edge Transit VLANS |

Edge Cluster Connectivity to physical underlay |

201, 202 |

|

Layer 2 Bridging |

1-to-1 mapping of VLANs bridged from overlay |

- |

Table 2: Infrastructure VMkernel Traffic Types and VLAN

Terminology: The terms Transport and Overlay are interchangeable unless otherwise noted.

These four VLANs are defined for segregating the infrastructure traffic types, or the like number of VMkernel interfaces in the case of ESXi hosts. Any logical numbering for VLANs and subnets used in the design guide are merely suggestive.

Overall, each hypervisor host will be prepared with these infrastructure networks and presented to Cisco ACI through multiple physical uplinks. In standard rack server compute format, there are usually two physical NICs. In the case of blade compute format, Cisco UCS for example, at least two logical NICs are configured to allow access to dual fabrics for redundancy and performance.

Note: For more in-depth information regarding designing NSX Data Center with Cisco UCS, see the VMworld 2017 session, Deploying NSX on a Cisco Infrastructure (NET1350BUR-r1).

During the preparation of the hosts, a special infrastructure interface for the encapsulation traffic is formulated for the express purpose of servicing the overlay traffic for the transport zone. The overlay transport zone is where the tunnel endpoints are defined that provide network connectivity within the transport zones.

NSX Data Center hosts and VM nodes (NSX-T) prepped as transport nodes create a separate kernel service to carry overlay communication. In the case of NSX for vSphere, a separate IP stack is also installed for the VTEPs. In NSX for vSphere, this additional IP stack provides a separation for the VTEPs routing table and allows use of a separate default gateway. But our design mediates the need for routing between endpoints of the respective infrastructure traffic types, by viewing the entire Cisco ACI fabric as a layer 2 domain. The Cisco ACI guides themselves state the ACI fabric can be viewed as a single layer 3 hop fabric. Therefore, there is pervasive layer 2 connectivity throughout the fabric, which allows for the four VLANs allotted for the infrastructure traffic, including the VTEPs and their transport zone, to be deployed as four flat logically separate networks. We require only a single set of these VLANs for a single NSX Data Center deployment to segregate the infrastructure traffic.

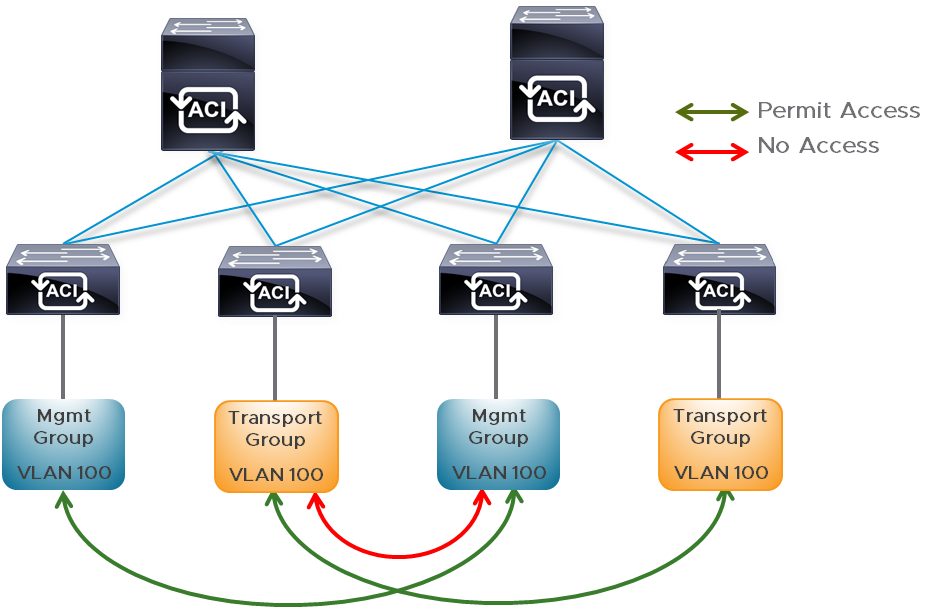

To provide allowed connectivity between endpoints of each individual traffic type, we will use an ACI construct called an endpoint group (EPG). In ACI, all endpoints within an EPG are permitted to communicate without additional allowances, filters, or contracts. Each of the infrastructure VLANs will be mapped to an individual EPG. In this use of VLANs in ACI, the corresponding configuration step involved in their use when mapping endpoints to EPGs is one of several fundamental management concepts for managing connectivity within the ACI fabric. The optimal design option for EPG use in our design is “EPG as a VLAN.” We will show how to configure the use of EPGs and all other necessary Cisco ACI constructs required for our NSX Data Center deployment on a Cisco ACI underlay.

Our design will make use of a network-centric deployment of ACI to remove much of the complexity involved in its setup and day-2 operations. This essentially means all connectivity to the fabric is configured in separately assigned VLANs through each EPG. For the rest of the design, the VLANs required for NSX are equivalent to an EPG required to be configured at an ACI leaf through a combination of fabric policies and tenant-based Application Profile EPGs. Figure 20 displays the allowance of intra-EPG communication, with no communication permitted inter-EPG without an ACI contract.

Figure 20: ACI Intra-EPG and Inter-EPG Communication Example

Refer to the following for more information on using EPG as VLAN:

- EPG as a VLAN section in Cisco Application Centric Infrastructure (ACI) – Endpoint Groups (EPG) Usage and Design

- Per Port VLAN section of Cisco Application Centric Infrastructure Fundamentals

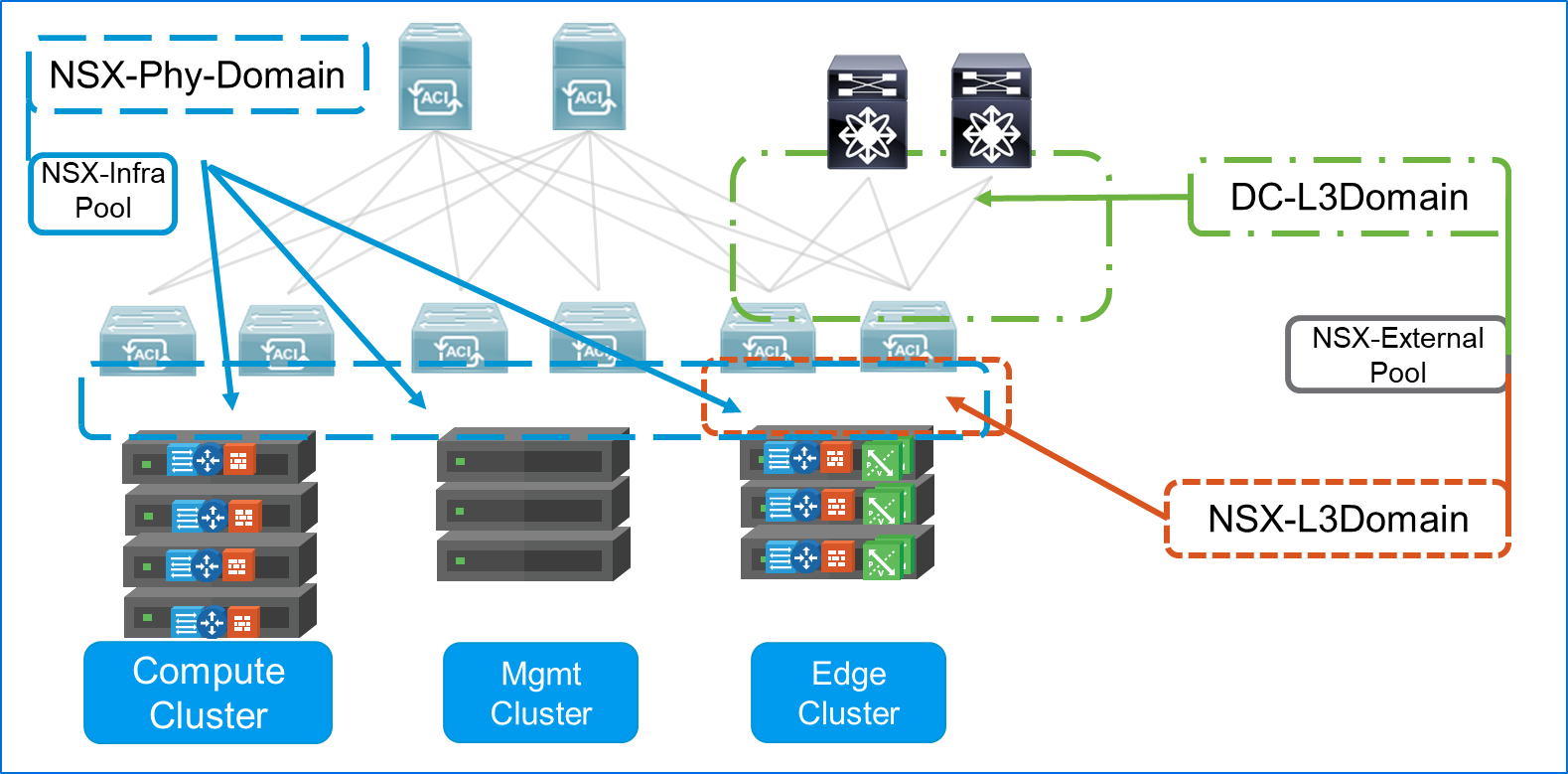

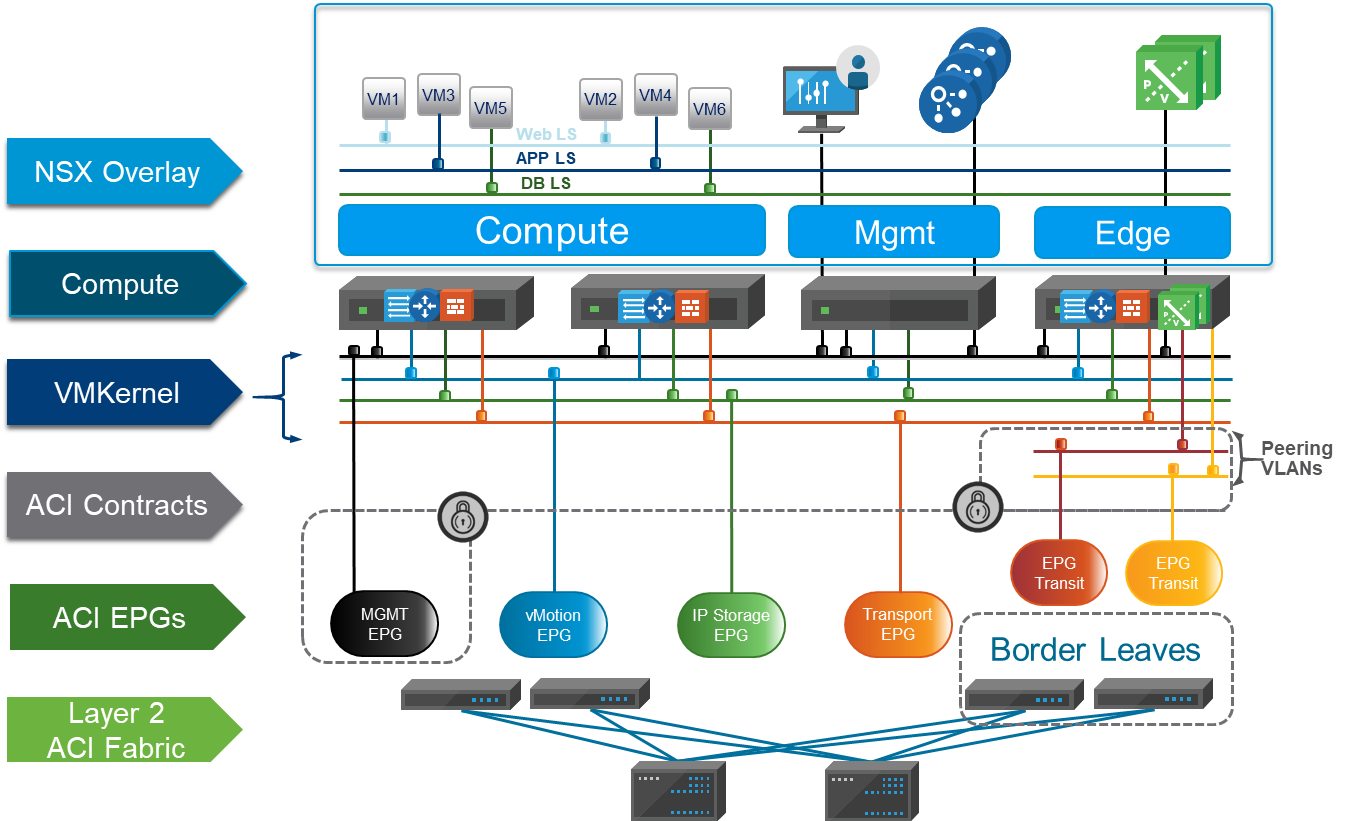

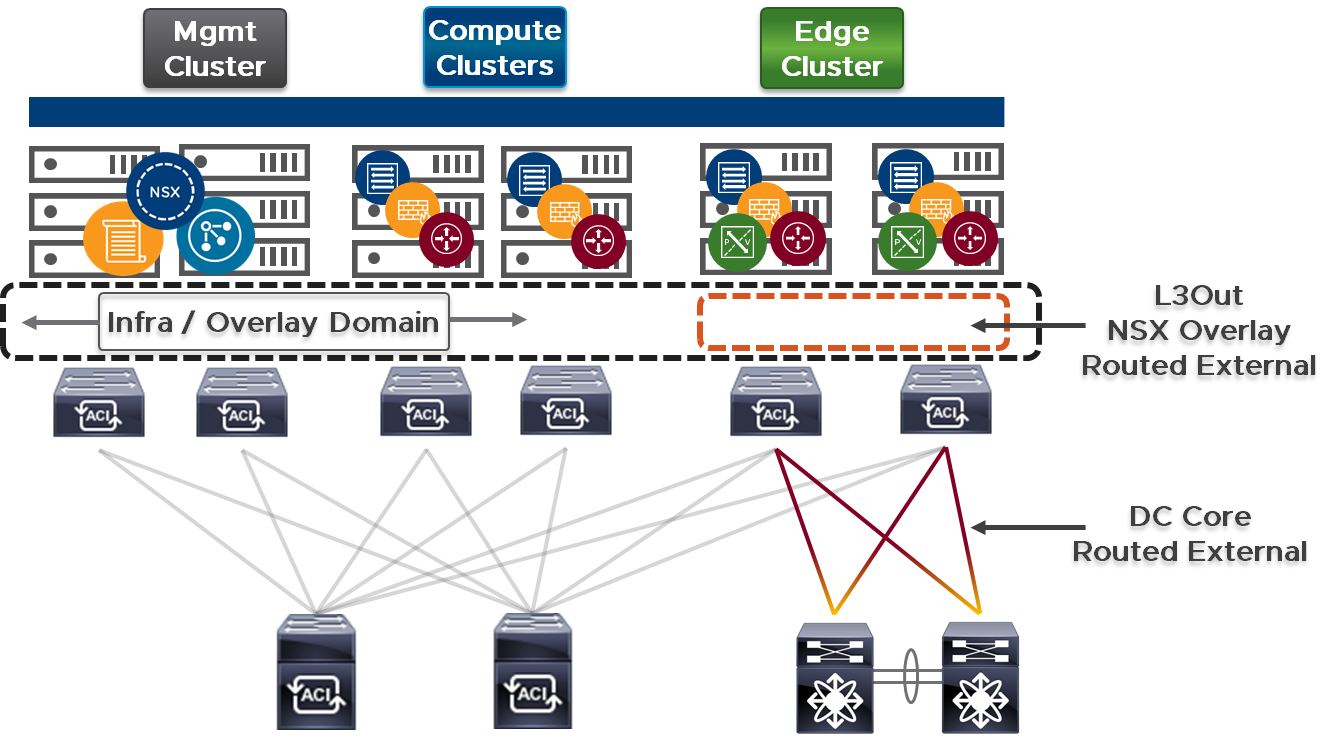

In using an EPG as a VLAN, a network-centric operational construction of the ACI fabric helps establish a good portion of the required communication for our NSX on ACI Underlay design. Figure 21 displays a high-level view of NSX Data Center running on an ACI infrastructure. Pictured are the three standard NSX operational clusters: Management, Compute, and Edge. In addition, the associated infrastructure connectivity for the NSX operational clusters is managed through Cisco ACI EPGs. Figure 21 also includes the required EPGs for the transit communication use by the NSX edges to route the NSX overlay network.

Figure 21: NSX Data Center Deployed on an ACI Underlay – High-Level EPG View

Configuring the infrastructure EPGs (VLANs) is a one-time task in the ACI APIC and provides the basis for deployment of NSX and the NSX platform operation. With ACI operating as a layer 2 fabric for the bulk of the infrastructure communication, the infrastructure EPGs can be scaled by adding physical ports along with any newly introduced switches when additional compute growth is required. This design ideal was created with the explicit purpose of aiding the maintenance of a stable switch fabric operating state, especially Cisco ACI. A minimal amount of modification to the fabric’s basic transport configuration is required when future scale-out is required. Our design facilitates the creation of NSX logical networks independent of the physical network and therefore eliminates defining new EPGs (VLANs) for new logical segments, additional workloads, or any logical application-scaling requirement needed for workloads deployed within the NSX overlays.

For all virtual workload traffic, the NSX Transport VLAN is used. For example, all VMs deployed upon the logical overlay use a default gateway represented as a logical interface (LIF) of a distributed logical router (DLR) shown previously in the section on Distributed Routing. This logical switched and routed fabric operates independent of the switch fabric for the most part.

Likewise, the infrustructure VLANs for any necessary routed physical fabric connectivity may use the distributed gateway feature of ACI. This ACI feature provides a consistent routed interface for the entire infrastructure network on every ACI leaf if needed. The key understanding is that the default gateway of the VM is provided by NSX and is different than the gateway for the VMkernel interfaces.

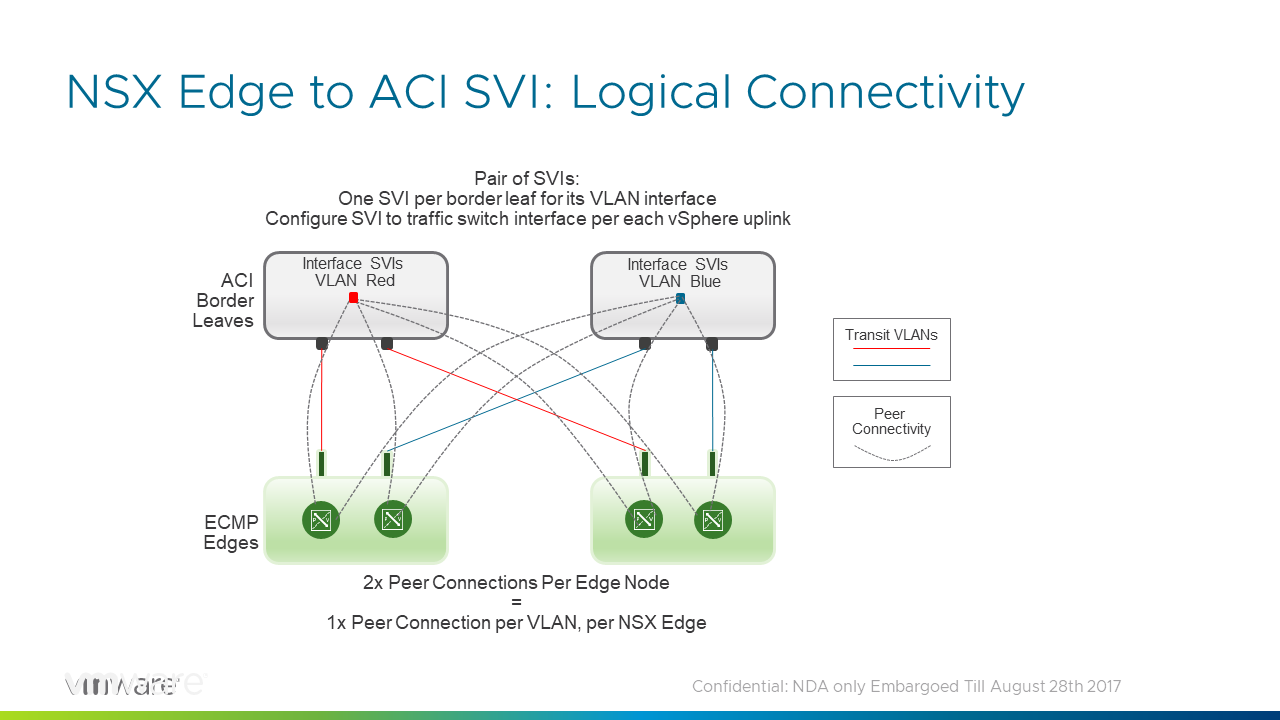

As previously noted in Table 2, there are additional VLANs required to establish the transit communication paths used for the edge cluster’s connectivity to the physical fabric. Cisco ACI has a specially designed EPG called an L3Out to establish routed connectivity external to the fabric. An ACI external routing domain is a specific portion of the ACI tenant connectivity for routed connectivity exiting the ACI fabric. Our design makes use of this ACI abstraction along with a minimum of two transit VLANs configured for the L3Out EPG.

An optional set of VLANs may also be required if leveraging NSX Data Center for L2 bridging. Table 2 references that the VLANs required for this use will be equivalent to the number of overlay networks (for example, VXLAN IDs to VLAN IDs) bridged to VLANs in the physical switched network.

2.2 Cluster Connectivity for the NSX Infrastructure Clusters

The general design criteria used for connecting ESXi hosts (KVM hosts for NSX-T) to the ToR switches for each type of rack takes into consideration

- The type of traffic carried – Overlay, vMotion, management, storage

- Type of isolation required based on traffic SLA – Dedicated uplinks (for example, for vMotion/Management) vs. shared uplinks

- Type of cluster – Compute workloads, edge, and management either with or without storage

- The amount of bandwidth required for overlay traffic (single vs. multiple VTEP/TEP)

- Simplicity of configuration – LACP vs. non-LACP

- Convergence and uplink utilization factors – Flow-based vs. MAC-based

A diverse setup of uplink connectivity options is provided by the respective NSX Data Center platforms (NSX for vSphere and NSX-T), and their respective hosts’ uplink and virtual switching service offerings. For connectivity discussions in this document, all hosts used will be assumed to be dual connected to the switch fabric. Further connectivity discussion and guidance can be found within their respective NSX Data Center design guides.sda

2.2.1 Management Cluster Connectivity

The management cluster consists of hosts supporting multiple critical virtual machines and virtual appliances. The VMs for the NSX manager and controllers are typically deployed in the management cluster requiring high availability (for surviving the failure of the host or ToR/uplink). Further, the management cluster is not required to be prepared for use with an overlay, as management connectivity is performed via the fabric underlay. Thus, connectivity for the vSphere hosts forming the management cluster is a VLAN based port-group on a separate VDS from the other functional clusters, Compute and Management.

This document calls out the use of SRC_ID for all teaming of the uplinks from the virtual switch perspective. LACP teaming mode can be used and would require LACP on the Cisco ACI for the ports connecting to the management hosts. For Cisco ACI switches, this is achieved by enabling traditional layer 2 VLAN-based vPC (Virtual Port Channel). To avoid the necessity for additional PNICS and uplink connectivity, all the traffic types including management, vMotion, and IP storage can be configured to use the same LAG. This would require a substantial change in the initial configuration of the fabric access policies to assemble the necessary ACI objects to support vPC connectivity. Consult Cisco ACI documentation for use and configuration of vPC.

2.2.2 Compute Cluster Connectivity

NSX offers a clear departure from the traditional methods for designing the infrastructure connectivity. The necessary VLANs are required to be defined only once for infrastructure traffic (overlay, vMotion, storage, management). Connectivity for the logical switching of the overlay for the virtual workloads, VMs, and containers are defined programmatically without relying on the physical network. This decoupling enables a repeatable rack design where physical planning (power, space, cooling, and cookie-cutter switch configuration) is streamlined. The physical network only requires robust forwarding and adequate bandwidth planning.

The compute cluster requires the most flexibility as it carries multiple types of traffic. Each type of traffic can have its own service level. For example, the storage traffic requires the lowest latency, as opposed to vMotion, which may require higher bandwidth.

Some workloads may have many sources and destinations and require load sharing by using multiple tunnel endpoints (VTEPs or TEPs). The flexibility of selecting teaming mode per infrastructure traffic type, and allowing variability in choosing the teaming mode for the overlay (as described in the VDS uplink configuration section), are primary reasons for not recommending LACP for the compute cluster host’s connectivity to Cisco ACI. An additional reason that is a corollary consideration is the day-2 operational concern: simplifying troubleshooting.

2.2.3 Edge Cluster Connectivity

NSX Data Center edges provide multi-function services such as north-south routing, firewall, NAT, load balancing, and various VPN capabilities. The capabilities and features are beyond the scope of this paper. Please refer to the individual design guides for the respective NSX Data Center platform:

- VMware NSX for vSphere Network Virtualization Design Guide

- VMware NSX-T for Data Center Reference Design Guide

This document covers the necessary technical details pertinent to physical and logical connectivity required for connecting an NSX Data Center edge cluster to the switch fabric. The critical functions of the edge cluster that provide connectivity to the NSX Data Center overlay are

- On-ramp and off-ramp connectivity to physical networks (north-south L3 routing delivered by NSX Edge virtual appliances, or NSX bare metal edge of NSX-T for Data Center)

- Allows communication with physical devices connected to VLANs in the physical networks

- Supports centralized logical or physical services (firewall, load balancers, and logical router control VM)

NSX edges perform a similar function as border leaves, providing on-ramp and off-ramp gateways just like ACI connected resources do, to access northbound networks and users. It is highly recommended that you align the NSX Edge cluster connectivity to border leaves in the ACI fabric.

The benefits of confining edge clusters to the pair of border leaves within ACI are

- Consistent single-hop connectivity for the traffic flow from NSX to ACI connected devices as well as north-bound network access

- Localizes the routing configuration for north-south traffic, reducing the need to apply any additional configuration knobs for north-south routing on the compute leaves

- Allows network admins to manage the cluster workload that is network-centric (operational management, BW monitoring, and enabling network-centric features such as NetFlow and security)

This is typical of any spine/leaf design to use border leaves.

2.2.4 Demystifying the Overlay Transport and IP Connectivity

This section demystifies the use of an overlay, its capabilities, and the difference in the way ACI implements the physical underlay functionality. It also helps to understand the benefits of using an overlay encapsulation originating from a hypervisor, such as an ESXi host to support logical networks and layer 3 logical routing, that are agnostic to the physical network underlay. Also note that any encapsulation used by NSX, VXLAN for NSX for vSphere, or Geneve for NSX-T provide the exact same overlay benefits proposed in this discussion.

NSX for vSphere for Data Center enables standard VXLAN encapsulation, as does the proposed Geneve in the case of NSX-T for Data Center, at the hypervisor level and thus treats the ACI iVXLAN fabric like any other IP transport. The advantage of overlay encapsulation in the hypervisor follows.

An overlay encapsulation sourced in the hypervisor decouples the connectivity for the logical space from the physical network infrastructure. Devices connected to the logical networks can leverage the complete set of network services (load balancer, firewall, NAT) independent from the underlying physical infrastructure. This solves many of the challenges within traditional data center deployments, such as the risk of L2 loops being diminished, agile and programmatic application deployment, vMotion across layer 3 boundaries, and multi-tenancy support. It also overcomes the VLAN limitation of 4,094 logical segments.

Hypervisor-based encapsulation allows NSX to not only operate on a proprietary fabric like ACI, but also allows the freedom to change or enhance the encapsulation since it is done in software. Decoupling the underlay from the overlay further enhances network operations and allows faster adaptation of technology.

Traffic flow for an NSX overlay on a Cisco ACI fabric is treated similarly to any other switch fabric from an application workload viewpoint. The Cisco ACI fabric is not aware of the NSX overlay encapsulated traffic and treats the traffic received from the tunnel endpoints as normal IP traffic. The ACI fabric forwards all communication within the switch fabric using its own non-standard proprietary VXLAN header for inter-rack traffic, with the ACI span of control terminating at the top of rack as a VLAN.

The following steps are the high-level overview of a packet processed for end-to-end communication of a VM-to-VM workload using an NSX for vSphere deployment with an ACI fabric underlay. Figure 22 provides a graphical view of the same set of steps that are discussed.

Figure 22: NSX VM-to-VM Packet Flow over an ACI Underlay

- Source VM originates an L2 Frame encapsulating an IP packet with source and destination IP address for the application workload.

- Source hypervisor performs lookup for a VTEP where the destination VM is hosted. The VM’s L2 frame is encapsulated with the corresponding VXLAN and UDP header. Further, the outer frame constructed will contain source and destination IP addresses of the NSX VTEPs and an 801q VLAN value for the NSX for vSphere transport zone.

(Up to this point, these steps follow a standardized method used for NSX overlay packet processing and forwarding.)

- When this standard VXLAN frame from the hypervisor reaches the ACI leaf, the ACI leaf will remove the outer VLAN encapsulating the NSX VXLAN packet and add its own non-standard, proprietary VXLAN header used for ACI tunnel endpoint communication within the ACI switch fabric.

(This will look like a “double encapsulated” packet if sniffed inside the fabric; more on this shortly.)

As the packet egresses the ACI fabric, the ACI leaf will strip the ACI fabric VXLAN encapsulation and replace it with an appropriate destination VLAN header, leaving the original NSX VXLAN packet intact.

- The destination hypervisor will strip off the VLAN header after transferring it to the appropriate VTEP dvPortgroup. The VTEP will receive this packet, strip off the NSX VXLAN header, and transmit the packet onto the NSX logical switch where the destination VM resides.

- The destination VM receives the intended L2 frame.

This encapsulation process is like the packet flow used by any standard VLAN-backed switch fabric that only modestly differs in the ACI fabric use of VXLAN. Also, an NSX-T overlay would employ the same general set of steps. Regardless of the fabric’s use of either encapsulation, VLAN or VXLAN, packet forwarding on an NSX platform is performed at line rate.

To complete this discussion, the previously mentioned “double encapsulation” has zero effect on the ability to provide proper flow monitoring or packet analysis. Any modern packet analysis tool can decode the headers of packets captured within a VXLAN underlay with NSX VXLAN running on top. Figure 23 displays an example of a captured packet within the ACI fabric using ACI ERSPAN to forward packets to an analysis system. A VM running a recent version of Wireshark displays a decoded NSX VXLAN packet.

Figure 23: Wireshark Decoding NSX VXLAN Packet Captured on an ACI Underlay

The use of an NSX overlay is quite formidable. Use of NSX overlays provides the foundation for a truly software-programmable network that leaves the hardware infrastructure untouched, simplifying its operation and maintenance while only requiring a small and stable set of VLANs for the infrastructure traffic.

2.3 Cisco ACI Connectivity Options with the NSX Infrastructure

Our standard NSX design calls out three options for deployment of the compute services:

- Three separate functional compute clusters consisting of Management, Compute, and the Edge cluster

- Collapsed Management and Edge cluster and a separate Compute cluster

- Collapsed Management, Compute, and Edge deployment

The last two options may be employed for smaller deployments, operational necessity, or a desire for simplicity. The initial option, separating all three, tends to be chosen for mid-size to larger deployments. This guide concentrates much of its discussion on either one of the first two options. Nevertheless, most design variations regarding this section, interfacing the compute services with the ACI infrastructure, are concerned with the number of PNICS used for a rack server infrastructure platform, and which type of teaming should be used for load distribution and availability. The blade server form factor, such as Cisco UCS, would have a different set of connectivity options to use.

Note: For a discussion on connectivity with NSX and using the Cisco UCS blade form factor, review the VMworld presentation from 2017, Deploying NSX on a Cisco Infrastructure (NET1350BUR-r1). Also, the previously mentioned design guides for NSX Data Center for vSphere and for NSX-T should be referenced for a discussion on load sharing.