NSX Easy Adoption Design Guide

1 Introduction

1.1 Scope of the document

VMware NSX is a full-stack Software-Defined Networking and Security offering from VMware. It contains L2 through L7 network and security services designed to meet the needs of small two-node development or proof of concept deployments all the way to highly regulated global enterprises and Service Providers or mega cloud providers. This solutions guide is not intended for massive service providers and Global Enterprises.This guide is actionable for small to medium-sized deployments that fall into two specific use cases:

- A simplified security solution designed for existing workloads where the physical network retains many networking functionalities.

- A full-stack design that primarily targets new deployments minimizing interaction with the external network while providing extensive flexibility and Network and Security services inside the solution.

The solutions presented focus on the following goals and parameters:

- Physical network-friendly configuration – minimum configuration

- Leverage existing knowledge base from vSphere and Security Admin

- Exploit the features and capabilities from NSX to build a flexible yet consolidated solution for a variety of application needs, services (NAT, VPN, FW, LB) and security

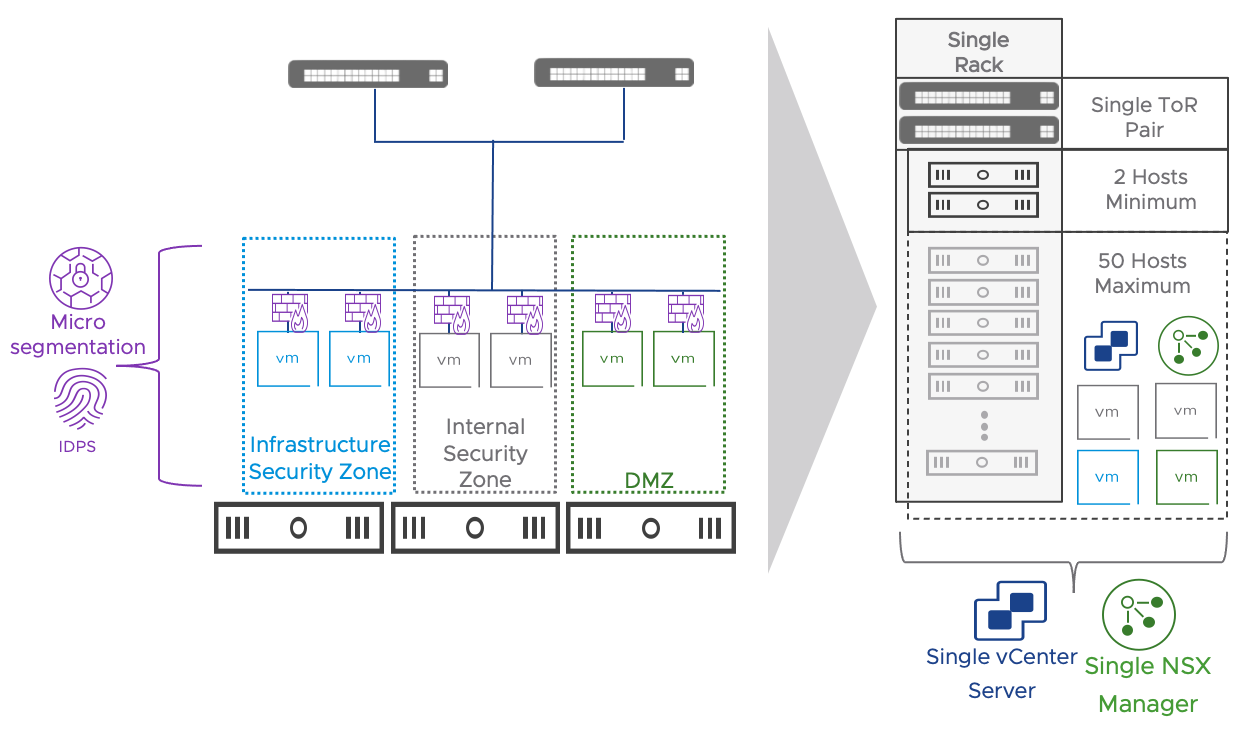

- Scope of deployment meeting most common footprint for small workload, satellite DC, and hosted solutions:

- The number of Hosts is as small as two and as large as 50

- Single vSphere Cluster deployment model

- Total number of virtual machines is less than 1000

- All hosts connect to a single pair of switches (usually in a single rack)

- Existing infrastructure level services already exist and are reachable (e.g., NTP, DNS, LDAP/AD, etc.)

The minimum NSX software version to implement the solutions described in this document is NSX 3.1.3. Later versions have not been validated but should be compatible with the two use cases in scope.

Due to their specific audience, the solutions presented in this document make some compromises in the interest of simplicity for implementation and operations at the expense of optimizing performance and handling compound failure scenarios. If these trade-offs are not palatable, we recommend that this guide be used as a starting point for a customized solution or leverage VMware Cloud Foundation, which includes NSX as a core component.

1.2 How to use this document

This document incorporates two main sections. Each of them addresses the two use cases at a different level.

Section 2 covers a high-level overview of the two solutions, together with their value proposition in the context of well-defined requirements and constraints. We also include a brief overview of the relevant NSX components.

Section 3 provides a detailed design and engineering specification for both use cases. It includes a comprehensive list of assumptions on the supporting infrastructure. Design decisions have accompanying justifications and implications for making the designs actionable and the rationale behind the choices clear and transparent.

Readers are encouraged to send feedback to NSXDesignFeedback_AT_groups_vmware_com (convert to email format).

2 Solutions Overview

2.1 The Simple Security and DC in a Box solutions

This solutions reference guide provides guidelines to streamline the adoption of VMware NSX in small environments. The presented prescriptive approaches minimize the time required for planning and designing the implementation of software-defined security with or without network virtualization on a single vCenter, single vSphere cluster infrastructure. While this topology is common in small and medium businesses, enterprises may also leverage it. This is especially true if they embrace the infrastructure consumption model standard in public cloud environments based on smaller independent units of computing resources.

This solutions reference guide provides two different approaches to consuming VMware NSX to enable a simplified, but high-value consumption of Software Defined Networking and Security for VMware vSphere based environments. The approaches below are divided based on the state of the vSphere environment where the NSX admin will implement the solution.

The first use case (labeled as Simple Security for Applications) is about rapidly gaining some of the benefits of VMware NSX for an existing VMware vSphere based environment, commonly referred to as a brownfield implementation. In this scenario, the VMware vSphere environment is present, and Applications have already been deployed. The objective is to elevate the functional level of the environment to include robust but non-invasive Software-Defined Security Services without major application, vSphere, or physical network modification or redesigns. Without introducing any physical or virtual security appliance in the data path, VMware NSX will provide L2-L7 security services to all the workloads in the virtual environment. For each workload, an independent instance of the NSX Distributed firewall will run in the ESXi hypervisor providing the ability to inspect all network traffic. The Virtual Machines on the same network could be potentially separated in different security zones, with only the desired traffic allowed.

The second use case (labeled as Data Center in a Box) focuses on deploying a full-stack solution intended as a net new environment, commonly called greenfield. In this use case, networking, security, and other essential services such as load balancer, NAT, VPN are offered as a single software-defined solution while only minimally interacting with the physical network. This minimal interaction is essential to accelerate the deployment and consumption of the new environment. The term Data Center in Box refers to the entire software stack capabilities of NSX and is a powerful driver for appliance consolidation and improved consumption models.

NSX supports the integration of multiple vCenter servers’ environments within a single SDN deployment, and we could extend the solutions presented in this document to a multi-VC deployment. Integrating multiple vCenter servers’ environment with a single NSX deployment allows a single unified networking and security model across those environments. This model would make the management by a single entity easier. This use case and design model are not in scope for this document. We will focus on smaller and independent compute units common in satellite DCs and hosted solutions.

2.2 Overview of the Relevant NSX Components

NSX is a distributed system capable of providing the full stack of networking services (switching, routing, security, QoS) in software. In this section we will cover the NSX components and functionalities relevant to the two solutions in scope for this document. For a more in-depth explanation of these concepts please reference the Architecture section of the NSX Reference Design Guide.

2.2.1 NSX Manager

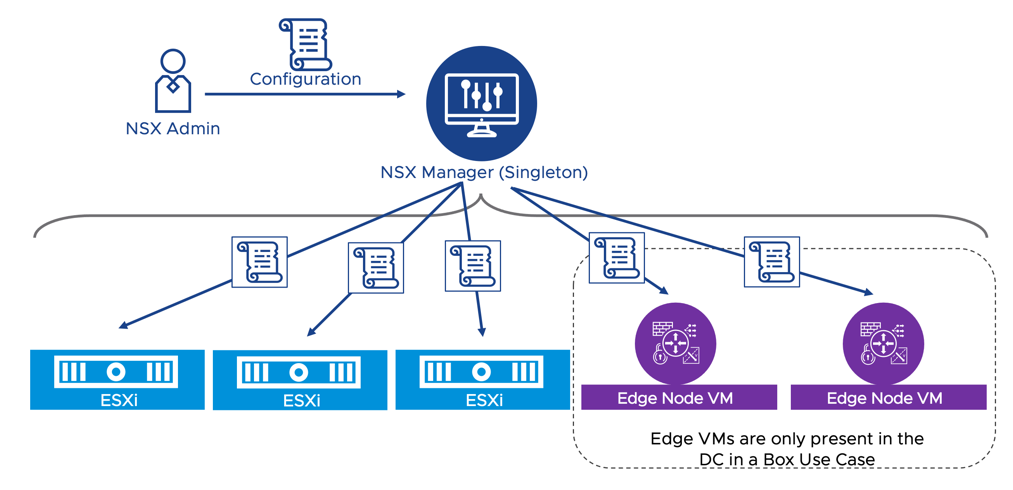

NSX Manager is a virtual appliance that provides management and control plane services to the NSX solution. It is the single point of management and monitoring for the entire system. The NSX admin can push configurations via the GUI or the API. Those configurations are then distributed to the data plane components of the platform.

The general recommendation is to deploy a cluster of three NSX Manager appliances for high availability. Still, the two solutions described in this document adopt a singleton deployment model instead to minimize resource consumption in a small deployment. vSphere HA and backup restore procedure are used to recover NSX manager in case of failure. The singleton deployment model can be scaled out to a clustered deployment of three NSX Managers if deemed necessary for high-availability.

In the simple security for applications use case, the NSX manager validates the user-defined security policy and stores them in its internal database. The NSX admin can define security policies based on objects, tags, IPs, AD Group, etc. NSX Manager expands those definitions; it converts them into IP-based rules and pushes them to the hypervisors for enforcement. NSX Manager must perform a VM to IP Address mapping for all the objects present in the configured security policy. To do so, it relies on the ESXi hypervisor to collect the VM inventory, discover the VM IPs via ARP snooping, DHCP snooping, or VM tools, and report the information to NSX Manager. The process is completely independent of the vCenter Server managing the hosts, potentially standalone.

In the Data Center in a Box Solution, NSX Manager also provides centralized control plane services for the overlay network. It maintains the global MAC address table and the global ARP table for the system. For an in-depth explanation of these functionalities, please review the logical switching section of the NSX Reference Design Guide.

Figure 1: NSX Manager - Single point of management for network and security

2.2.2 ESXi Host

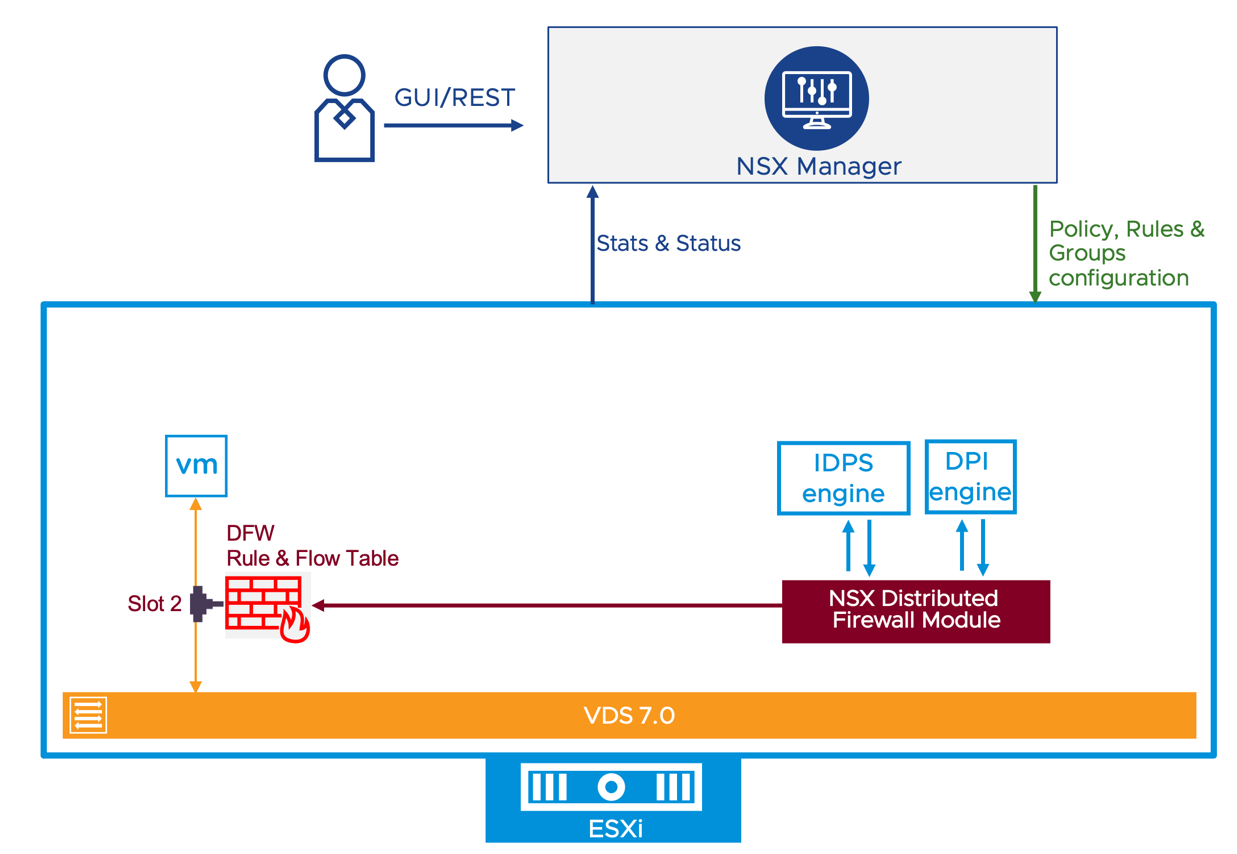

The ESXi hosts provide virtual machine connectivity via the VDS and constitutes the enforcement point for security policies. Each ESXi connects to the NSX manager appliance and receives the firewall configuration from the NSX Manager. The host pushes the firewall rules to the data plane filters, instantiated in the kernel, for each VMs’ virtual NIC (vNIC).

The NSX admin configures the scope of enforcement in the “Apply-To” field of a policy or rule. The host uses that information to ensure only relevant DFW rules are programmed on each virtual NIC. The hosts report back to the NSX manager the firewall policy realization status and statistics so that they are centrally located for easy consumption by the NSX admin via the UI or the API.

An independent instance of the distributed firewall is created for each VM vNIC. Each instance can be configured with unique firewall rules that are appropriate for the protected workload while NSX Manager provides the central management capabilities necessary in such a distributed security deployment. The NSX admin can configure each instance with unique firewall rules appropriate for the protected workload without the need for an agent.

Advanced security features such as Intrusion Prevention and Application Firewall are also available via the Intrusion Detection and Prevention System engine (IDPS) and the Deep Packet Inspection (DPI) engine respectively. Those components run in the ESXi host. The NSX Distributed Firewall module can pass traffic to the IDPS engine or the Deep Packet Inspection engine when the NSX admin specifies in the configuration that those services should be applied to a specific traffic flow.

Figure 2: ESXi Host Distributed Security Data Plane Implementation

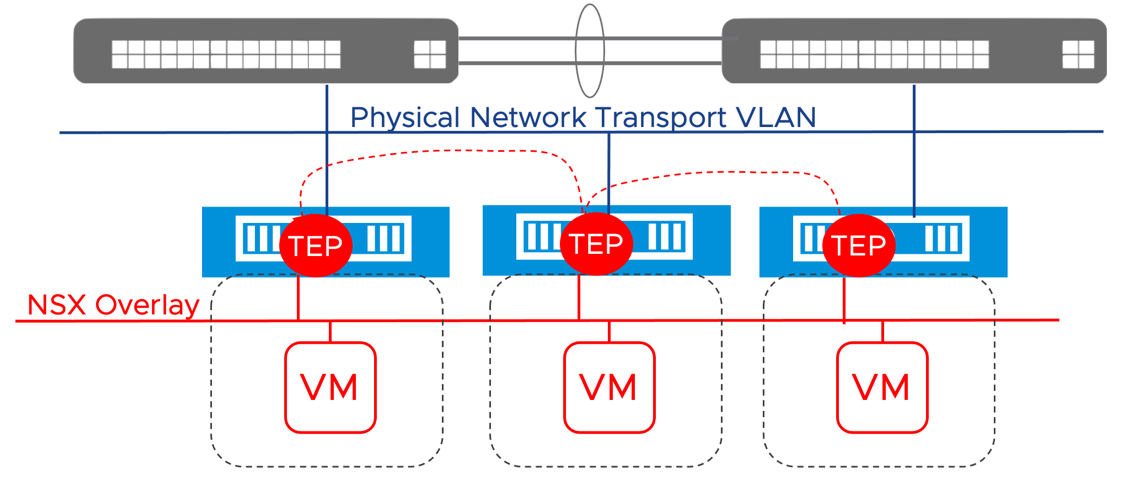

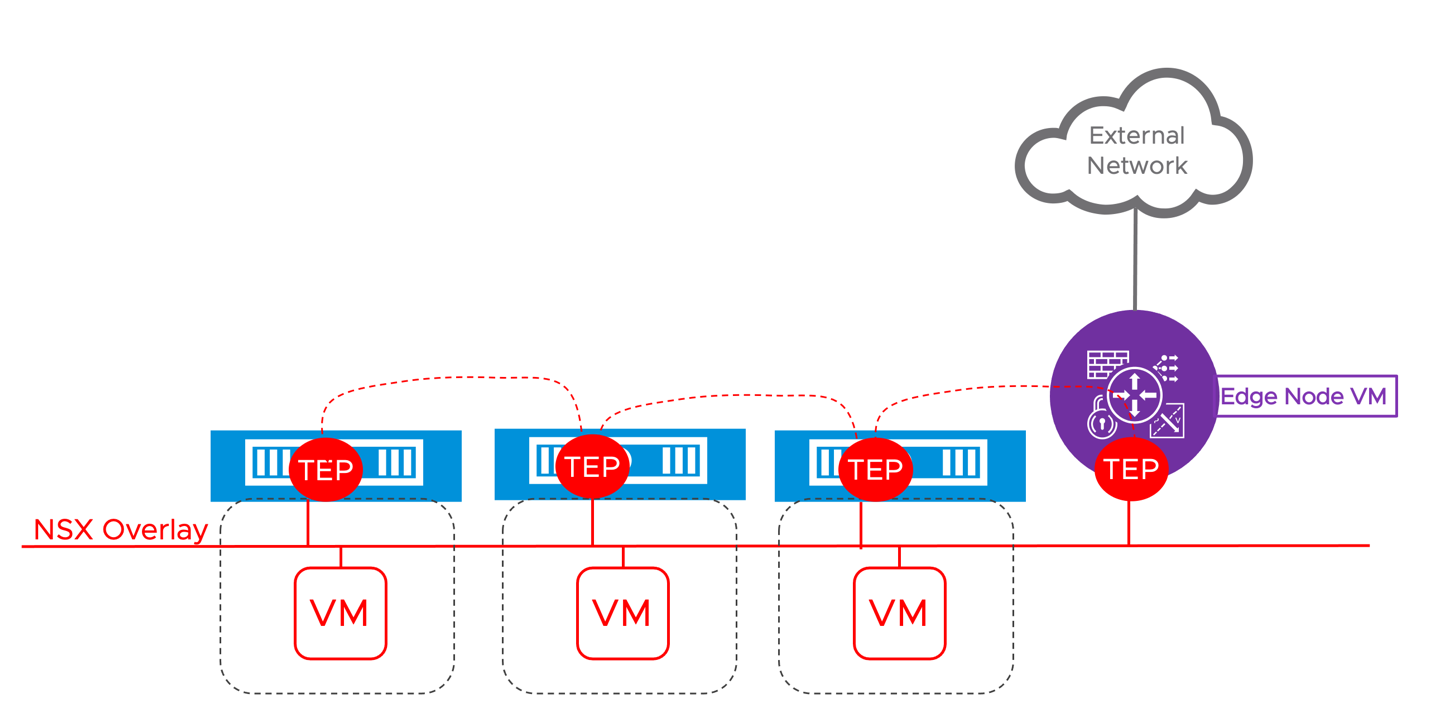

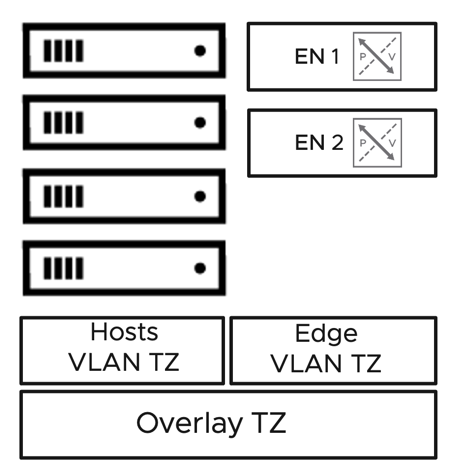

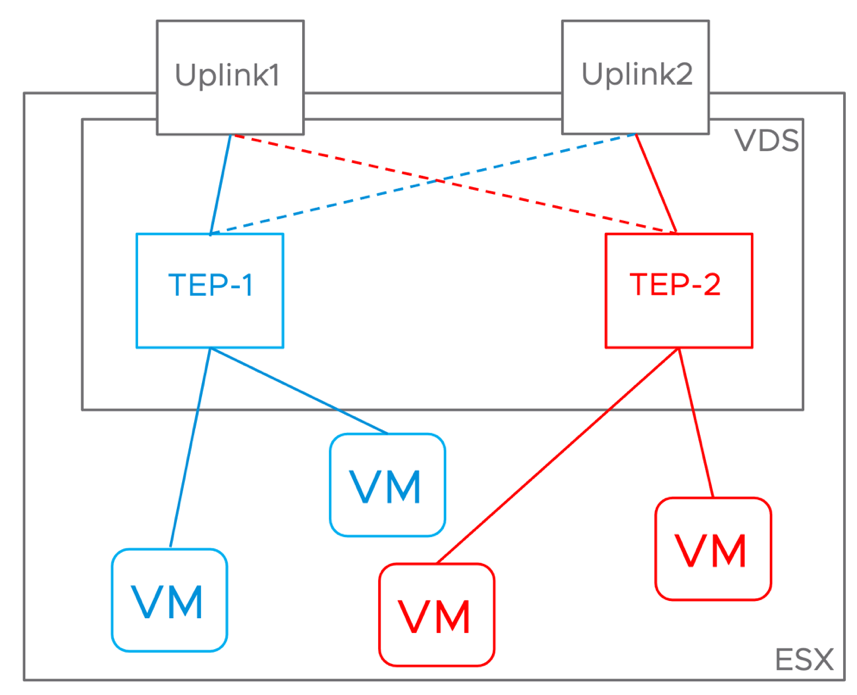

Only in the DC in a Box use case, the VDS provides advanced network services. When the NSX admin creates a new overlay segment, the virtual switches on the different hosts will create a virtual network on top of the existing basic connectivity provided by the physical network. The virtual machines on different hosts but the same network will communicate because the hosts will establish Layer 2 over Layer 3 tunnels via dedicated VMKernel interfaces named Tunnel EndPoints (TEPs). This mechanism allows the DC in a Box Administrator to create and delete new networks without coordinating with the physical network administrator.

Figure 3: NSX Overlays

2.2.3 Edge Node VMs – Data Center in a Box Use Case Only

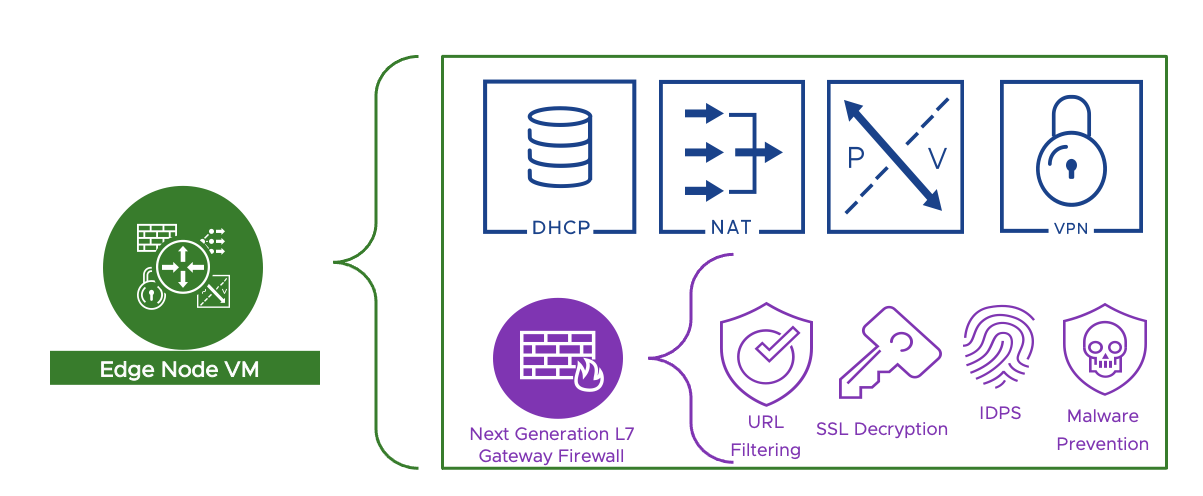

In the simple security for applications use case, the only additional virtual machine deployed on the collapsed vSphere cluster is the NSX Manager virtual appliance. We will deploy two other virtual appliances for the DC in a Box use case, the NSX Edge node VMs. These two VMs provide a pool of capacity for edge networking and security services such as routing with the physical network, Network Address Translation (NAT),Next Generation Firewall, DHCP server, DNS forwarder, VPN, and bridging. In the DC in a Box use case, all services are deployed as Active/Standby; two Edge Node VMs are required to provide high availability.

Figure 4: The edge Node VM provides edge networking and security services to the DC in a box solution

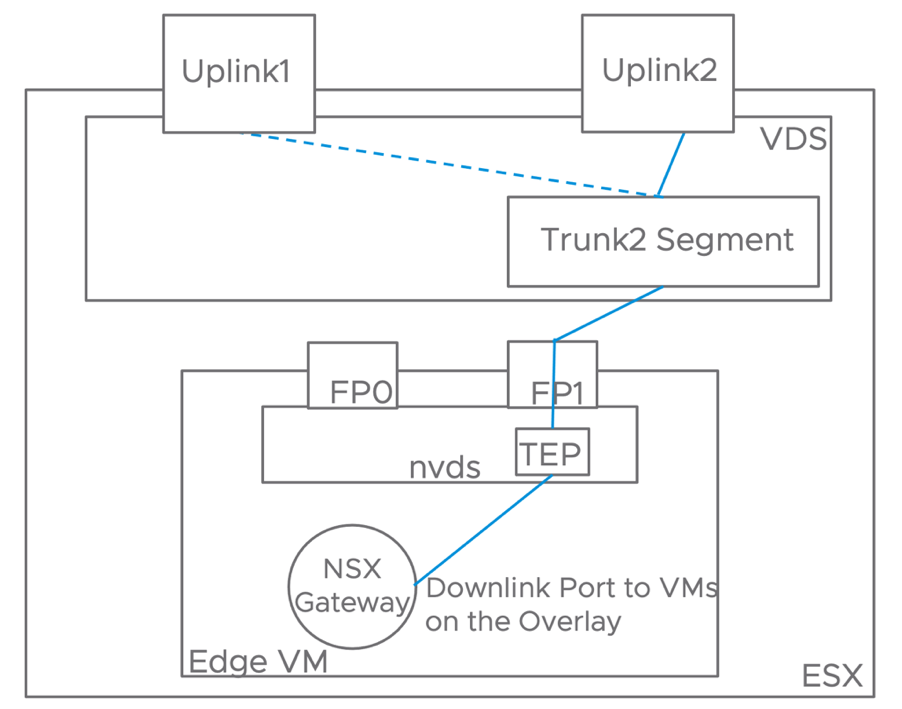

A gateway is an NSX configuration object that is deployed when the administrator desires to provide routing services via NSX. When only East-West routing within the data center is required, NSX instantiates the gateway on the ESXi hosts only. In this case, routing capabilities are fully distributed, and traffic never traverses the edge node VMs. Each host runs a distributed router component (DR) capable of locally routing the traffic for all the connected overlay segments and acting as the default gateway for the VMs on the host.

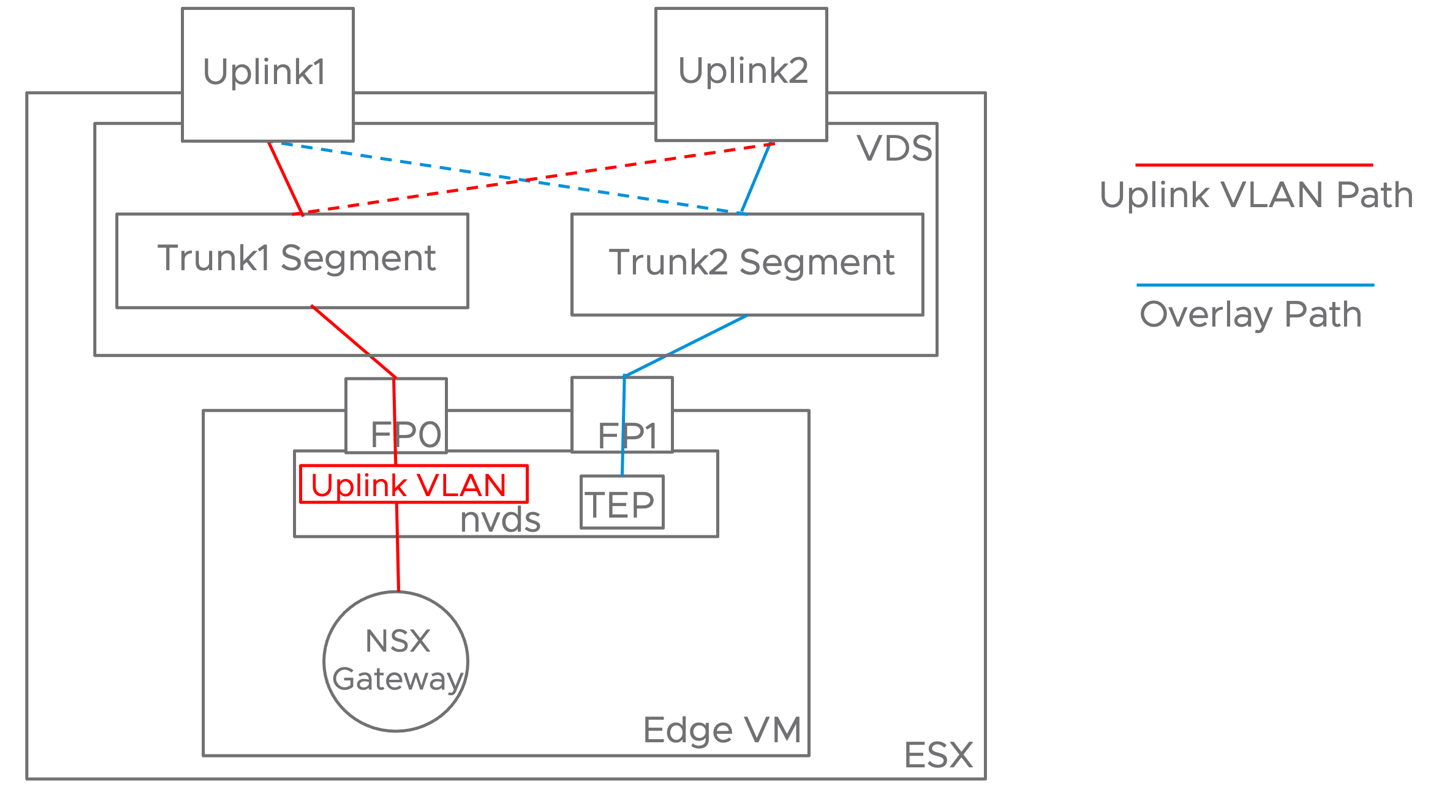

When peering to the physical network or network services are required, the NSX Manager instantiates the gateway on the edge nodes to apply more advanced functionalities to the traffic. Edge nodes participate in the NSX overlay network with the ESXi hosts. They received the traffic over those tunnels before processing it with L4-L7 capabilities and forwarding it to the physical network.

Figure 5: The edge node VM participates in the overlay while providing physical to virtual connectivity and network services

The DC in a Box design includes a single gateway deployed in Active/Standby across the two edge nodes, with all the edge networking stateful services part of the solution attached to it. The edge nodes do not follow the Active/Standby model because it’s the services running on top of them that can be active or standby, edge nodes are always active. Edge nodes could run a mix of active and standby services simultaneously. Still, because of the single gateway design in the DC in a Box use case, one of the edge nodes will run all the active services while the other will host all the standby services. This design resembles a physical network appliance model, where the traffic flow is predictable as one of the devices is not processing traffic until a failure occurs.

2.2.4 NSX Application Platform (NAPP) – Optional for both use cases

As of NSX Data Center 3.2, VMware has introduced the NSX Application Platform (NAPP). This is a new microservices based solution that provides a highly available, resilient, scale out architecture to deliver a set of core platform services which runs several new NSX features such as:

- NSX Intelligence (Application topology discovery and visualization, security policy recommendation)

- NSX Malware Prevention

- Network Traffic Analysis

- NSX Network Detection and Response

Even the most basic deployment of the simple security for application use case can benefit by the addition of NAPP thanks to the application topology mapping and security policy recommendation capabilities provided by NSX Intelligence. NAPP is required to activate some of the advanced threat prevention capabilities such as network traffic analysis (NTA), distributed malware prevention, and network detection and response (NDR). NAPP is not required for distributed intrusion prevention and detection (IDPS).

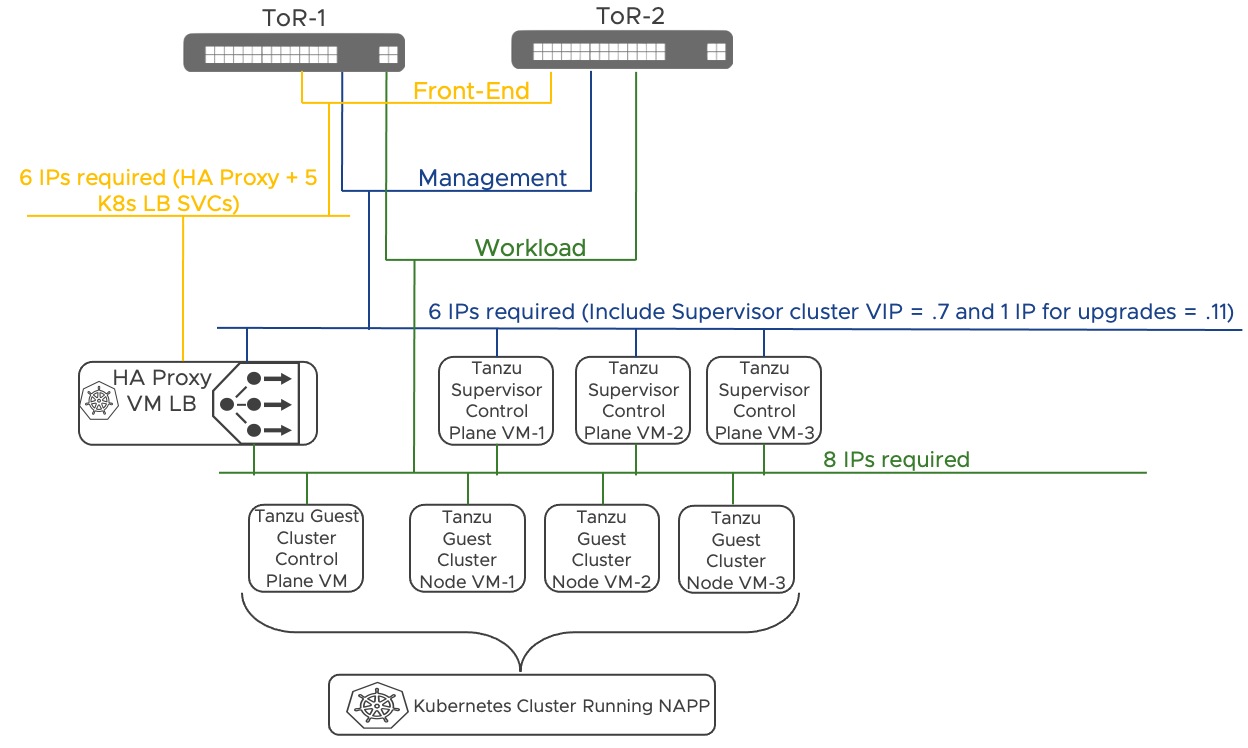

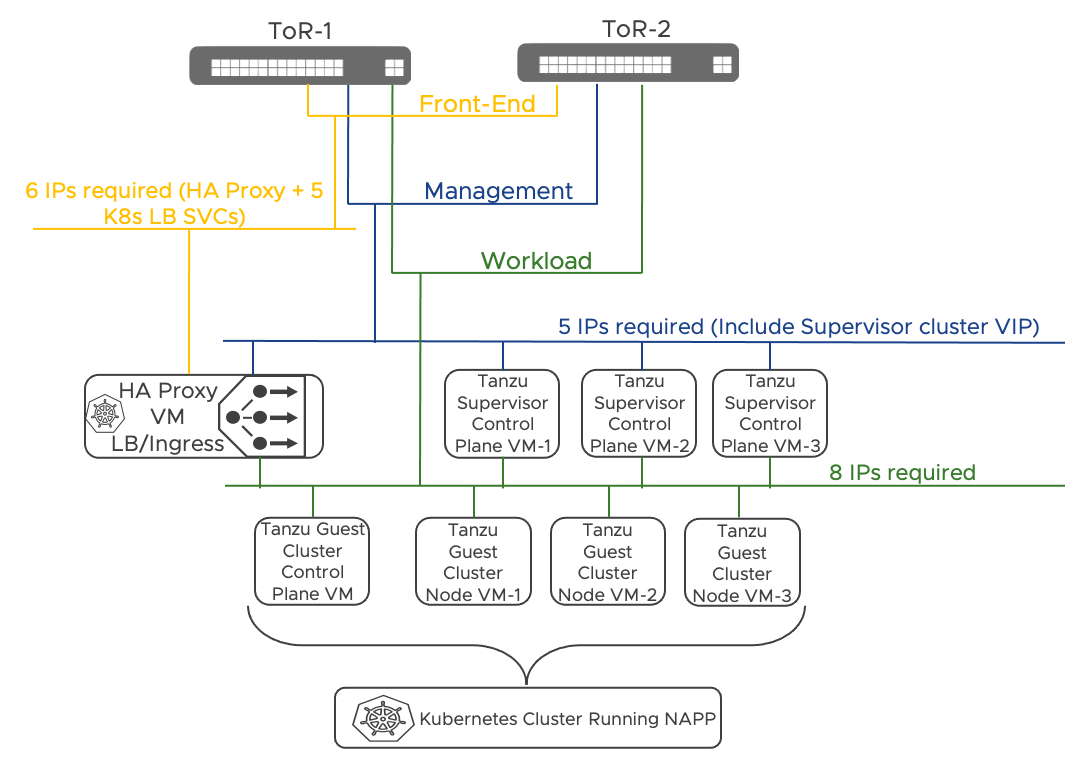

Deploying NAPP requires a Kubernetes cluster which meets the platform requirements. VMware Tanzu Kubernetes Clusters (TKC) and upstream Kubernetes clusters are the supported Kubernetes distributions. At minimum a cluster comprising a single control plane node and three worker nodes is required for a production deployment. System requirements for the different form factors are documented here.

In this guide, to facilitate the deployment of the NSX Application Platform and the supporting Kubernetes cluster, we recommend the use of the NAPP Automation Appliance. The NAPP Automation appliance automates the enablement of a vSphere with Tanzu workload domain on the target vSphere environment, the deployment of a TKC with appropriate specifications to support the NSX Application Platform, and the deployment of NAPP itself. The resulting architecture provides an end-to-end solution that is fully supported by VMware.

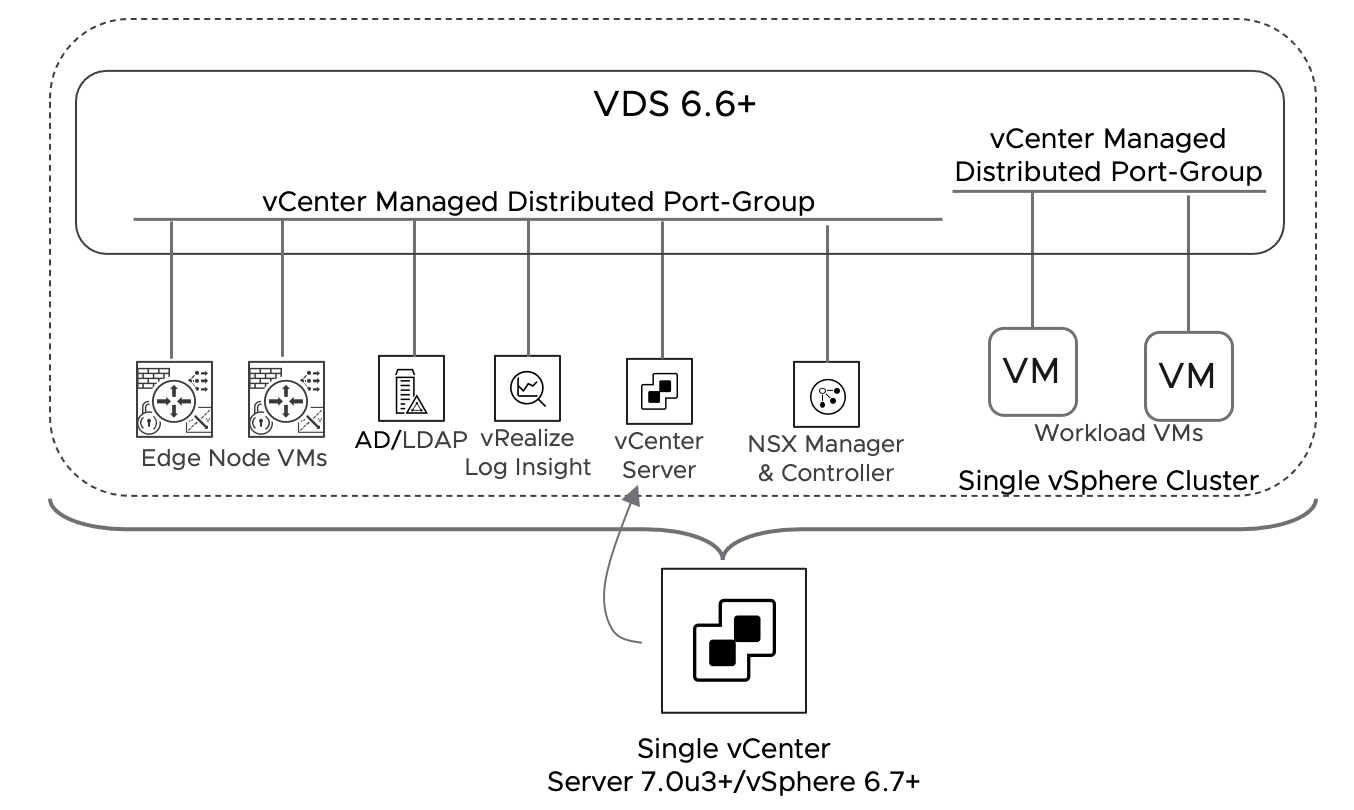

The vSphere with Tanzu deployment automated via the NAPP automation appliance will leverage VDS networking and it is the recommended approach for both the Simple Security for Application and the Data Center in a Box use cases. A total of eight additional virtual machine will be deployed on the target environments. Those VMs will include the Tanzu supervisor cluster (3 VMs), a HA-Proxy load balancer VM, and the guest cluster hosting the NSX Application Platform (4 VMs).

Figure 6: NAPP deployment leveraging Tanzu on vSphere with VDS Networking – Automated via the NAPP Automation Appliance

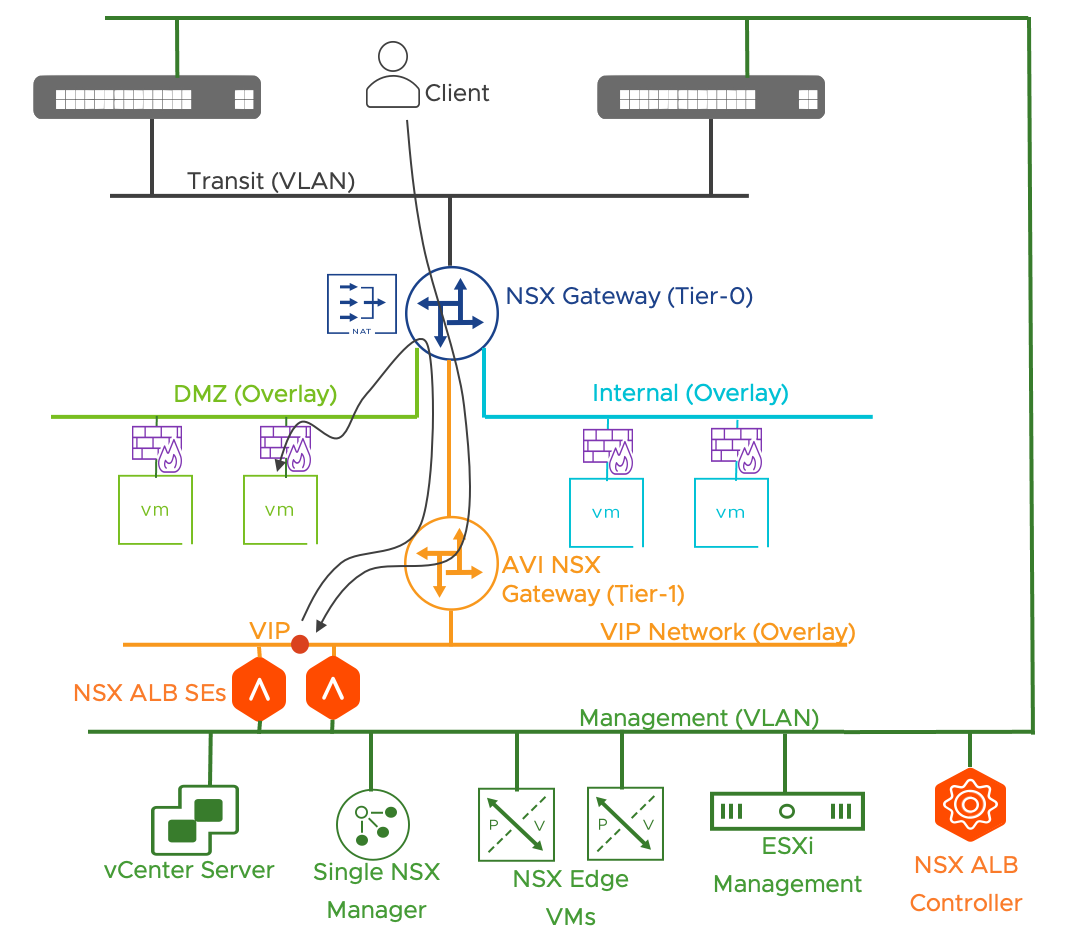

2.2.5 NSX Advanced Load Balancer (AVI) – Optional – Data Center in Box Use Case only

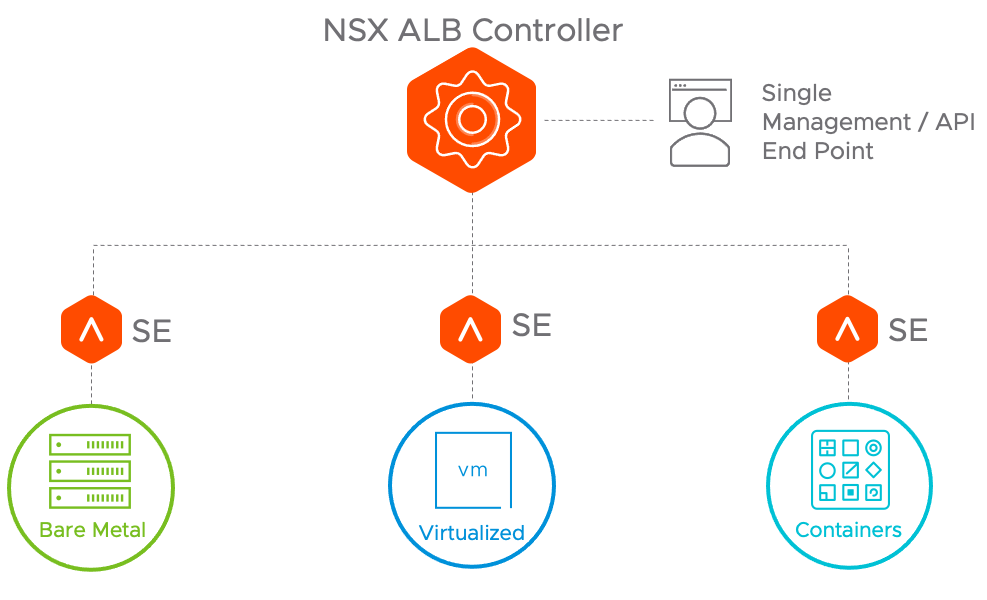

The NSX Advanced Load Balancer is built on software-defined principles. The architecture separates the data and control planes to deliver application services such as load balancing, application analytics, predictive autoscaling, and self-service for app owners. The platform provides a centrally managed, dynamic pool of load-balancing resources to deliver granular services close to the individual applications. This allows network services to scale near infinitely without the added complexity of managing hundreds of disparate appliances.

The NSX Advance Load Balancer deployment for the DC in a Box use case incorporates two components: the Service Engines and the Controller.

Service Engine (SE)

Service Engines handle all data plane operations and execute instructions from the Controller. The SE performs load balancing and controls all client and server-facing network interactions. It collects real-time application telemetry from application traffic flows and provides that information to the Controller. In the DC in a Box design, we provide high availability by deploying two SEs. Additional SE can be added, manually or dynamically via autoscaling, if the scale or the traffic requirements require it.

Controller

The Controller is the single point of management and control that serves as the “brain” of the system. As its name implies, the Controller implements the control plane. Controllers continually exchange information securely with the SEs. The health of servers, client connection statistics, and client-request logs collected by the SEs are regularly offloaded to the Controllers, which process the logs and aggregate analytics. The Controllers also send commands down to the SEs, such as configuration changes. Controllers and SEs communicate over their management IP addresses.

The controller hosts the NSX advanced load balancer console, a modern web-based user interface to manage and monitor applications. All services provided by the platform are available as REST API calls to enable IT automation, developer self-service, and a variety of third-party integrations.

The general recommendation is to deploy a cluster of three NSX Advanced Load Balancer controllers for high availability. Still, the DC in a Box solution adopts a singleton deployment model instead to minimize resource consumption in a small deployment. vSphere HA and backup restore procedure are used to recover the single Controller in case of a failure. The singleton deployment model can be scaled out to a clustered deployment of three NSX Managers if deemed necessary.

More information about the NSX Advanced Load Balancer Architecture can be found here.

Figure 7: NSX Advanced Load Balancer Architecture

2.3 Simple Security for Applications Overview

2.3.1 Definition of Simple Security for Applications

Applications today are responsible for almost every company’s entire revenue stream and their ability to evolve and react to market demands. As a company tries to optimize its operations, expand into a new market, or enable a shift in the way they do business, these changes are accompanied by new application deployments or potentially significant application updates. As the reliance on these applications grows, the impact increases if they are compromised or fail.

A security solution that can keep pace is needed to increase the speed at which an organization can introduce or modify applications while still protecting them. A software-defined security solution is the best way to do this. VMware NSX is the ideal solution to address simple and complex security needs with the simple security for applications use case.

In the simple security for applications use case, the primary objective is to provide a robust security solution for one or more applications based on the tried-and-true zone-based security principles still prevalent in corporate IT environments. While this use case applies to environments of any size or type, this solution is focused on an environment that fits into the following classification:

- Brownfield: applications are already running on the existing infrastructure.

- Flexible footprint - two (2) to fifty (50) hosts deployment

- < 1000 Virtual Machines and most likely < 500 Virtual Machines

- Existing single vCenter, single cluster environment running vSphere 6.7 or later and VDS 6.6 or later

- Primary Routing and Switching is owned by the Physical Network and not the Software Defined Networking solution

The simple security for application solution requires the following NSX components:

- A single NSX Manager appliance running NSX version 3.2.1 or later

- ESXi hosts NSX installation orchestrated by NSX Manager

Optional additional component is the NSX Application platform (NAPP) deployed via the NAPP automation appliance. The NAPP deployment includes:

- 3 Tanzu supervisor cluster control plane VMs

- 4 Guest cluster Kubernetes nodes

- 1 HA-Proxy Load Balancer virtual machine

The simple security for application solution does NOT require the following NSX components:

- Edge node VMs

- Network overlay

The simple security for application solution is agnostic to the physical network topology, it can be deployed on any physical fabric type, and does not require any change or configuration of the physical equipment. Specifically, the simple security for applications does NOT require:

- Changing the MTU

- Create new VLANs (Unless the deployment incudes the NSX Application Platform (recommended), NAPP requires two new routable VLANs)

- IP routing reconfiguration

- Default gateway migration

As the environment grows up to the intended maximum of fifty hosts, the solution can absorb those changes without detrimental effect on the protected workloads. Above this scale, while the solution may not change, the resources required may.

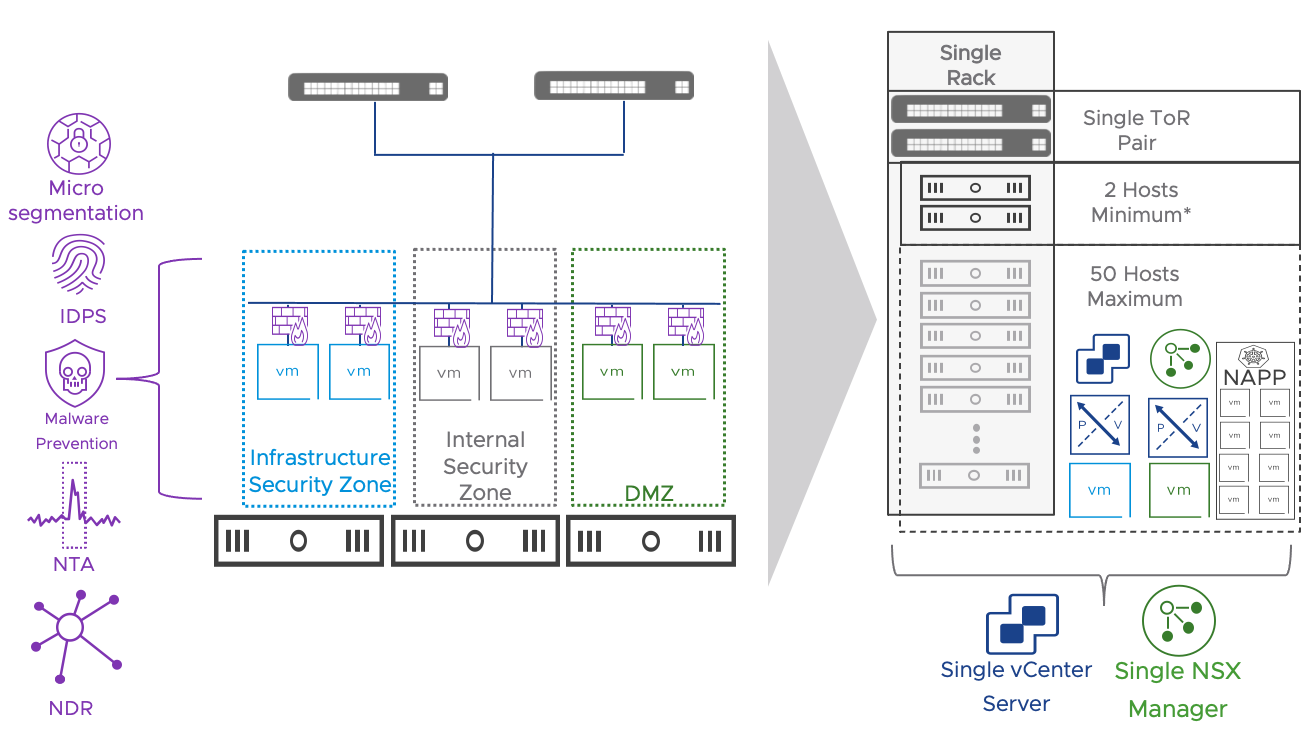

Figure 8: Simple Security Use Case Architecture and Features

Figure 9: Simple Security Use Case Architecture and Features when the NSX Application Platform (NAPP) is deployed

2.3.2 Value to the Security Administrator

For an engineer with responsibility for security, incorporating a simplified Software-Defined Security solution is a game-changer. The engineer can now define security standards and apply them to the existing infrastructure regardless of the network topology implemented in the brownfield environment. In situations where the organization did not implement zone-based segmentation when building the virtualized infrastructure or when the security requirements changed over time, the security engineer can now provide the required segmentation without any dependency or coordination with the physical network.

In environments in the upper half of the range from a scale perspective (i.e., 25+ hosts), procuring hardware appliances with an adequate set of performance and functionalities can be costly. Purchasing decisions based on cost may hinder the environment's future growth. The NSX security model is fully distributed. Security functionalities are implemented leveraging unused CPU and memory resources on the workload compute infrastructure. Scaling the compute infrastructure will automatically scale the performance of the security services.

The other significant benefit is around audit and operations. By implementing this solution, the engineer can both test and validate rules through simplified and centralized logging. Rules can have unique custom identifiers, which can be seen in vRealize Log Insight or any other log analysis tool integrated through Syslog. They can troubleshoot their rules using the TraceFlow tool integrated into NSX Manager, and they can quickly implement emergency rules while seeing their effect on the application rules below.

If NAPP is included as part of the solution, the security administrator gains access to the NSX Intelligence analytics and visualization capabilities. Those functionalities greatly facilitate the adoption of NSX Distributed Firewall providing application topology discovery and visualization, and security policy recommendations.

2.3.3 Value to the Virtual Infrastructure Administrator

The Simple Security for Applications use case makes the Virtual Infrastructure Administrator’s (VI Admin) job more manageable. Security policies are applied to the individual VM and are retained regardless of where the VM is deployed or moved. The modification or installation of agents on virtual machines is unnecessary because all the security functionalities are implemented on the underlying hypervisor. Enabling those capabilities does not require rebooting or placing the hosts in maintenance mode.

The ability to use policies based on Tags and VM Properties enables the VM Admin to leverage automation tools to deploy new workloads, which will inherit a level of security without direct interaction with the security team. The same approach is feasible with a home-grown script, supported automation tools such as Ansible and Terraform, or end-to-end automation solutions such as vRealize Automation.

2.3.4 Role of NSX in the Simple Security Use Case

NSX provides robust security capabilities that the security engineer can implement with no changes to the existing network environment. This is not possible with a traditional security solution or a physical appliance as they must sit in line with the traffic.

Here are a few of the capabilities NSX provides in the context of the simplified security use case enabling a straightforward way of securing an environment:

- Comprehensive L4-L7 Distributed Firewalling capable of protecting workloads transparent to the Workload and the Physical Network

- A High-Level human-readable vernacular for defining and implementing Security rules/policies

- Integrated Audit and Troubleshooting tools for fine-tuning the security policies and rulesets

- Access to more advanced security capabilities such as IDS/IPS without the need to redeploy or rearchitect the Simplified Security Solution at any point in time when the organization identifies a need for those more advanced capabilities.

The distributed security functionalities part of the solution are summarized in the table below.

| Functionality | License | NAPP Required |

| L2 – L4 firewalling | Distributed Firewall | NO |

| L7 Application Identity based firewalling | Distributed Firewall | NO |

| User Identity based firewalling | Distributed Firewall | NO |

| NSX Intelligence (flow visualization, policy recommendation) | Distributed Firewall | YES |

| IDS/IPS (Signature and behavioral based) | Distributed Firewall + Threat Prevention | NO |

| Network Traffic Analysis | Distributed Firewall + Advanced Threat Prevention | YES |

| Network Malware Prevention | Distributed Firewall + Advanced Threat Prevention | YES |

| Network Detection & Response | Distributed Firewall + Advanced Threat Prevention | YES |

Table 1: Security Features part of the Simple Security for Applications Use Case

2.3.5 Extending the Simple Security Use Case

2.3.5.1 Multiple vSphere Clusters

Extending the simple security to a multi-cluster vSphere environment is completely transparent and does not require any additional design consideration. If the appropriate vSphere and VDS versions are available (6.7+ and 6.6+ respectively) the simple security for application use case can be implemented following the same approach described for a single collapsed cluster environment. A single or multiple VDSs are supported within the same vCenter server deployment.

2.3.5.2 Multiple vCenter servers

The simple security for application use cases can be extended to environments encompassing multiple vCenter servers. NSX Manager provides a single configuration and operational point of management across the different compute managers. In this case the only limitation to consider is that we cannot leverage the vSphere plug-in for NSX. The management of the security policies needs to be performed accessing the NSX GUI directly and cannot be embedded into the vSphere client for a unified experience.

2.4 Datacenter in a Box Overview

2.4.1 Definition of the Datacenter in a Box

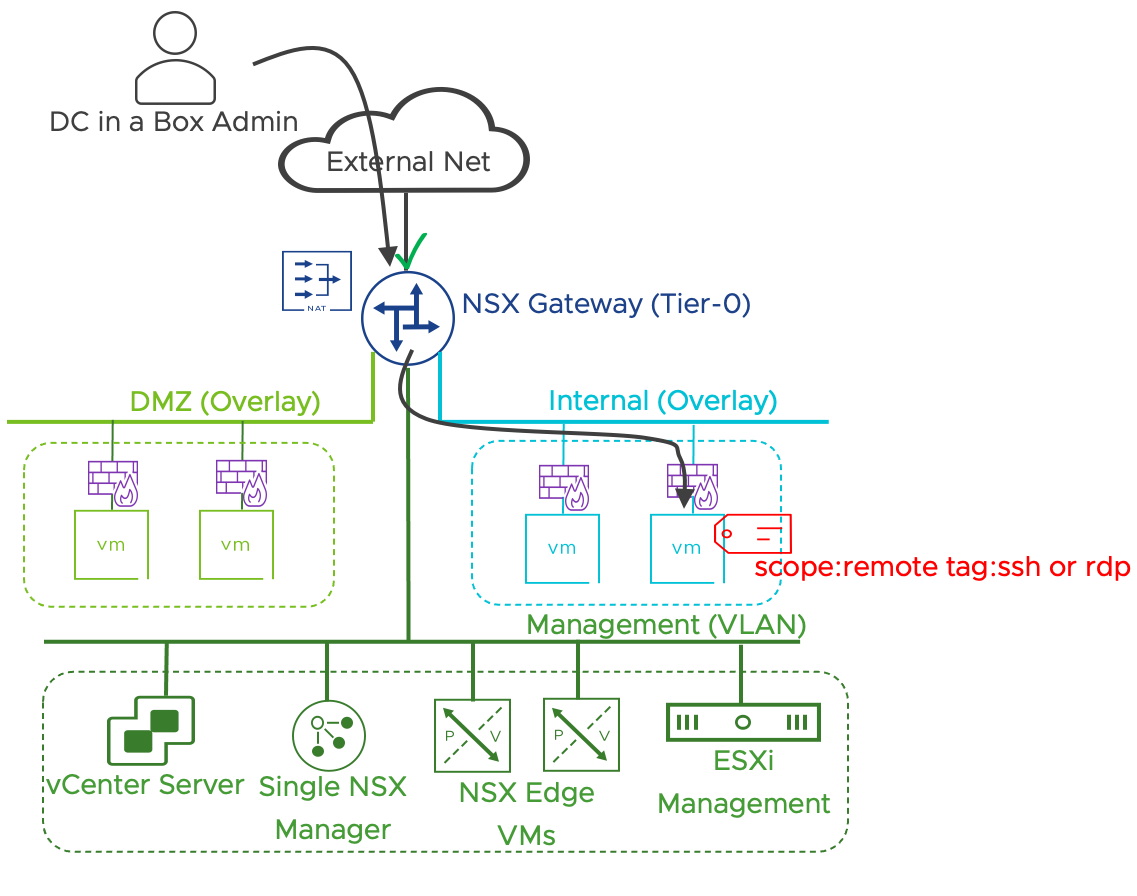

We designed the Data Center in a Box solution to create an easily deployable self-contained environment with as few outside dependencies as possible while providing a full-featured environment. This solution draws a hard line between the physical infrastructure and potentially hosting provider environment and the Customer Controlled software-defined environment created by vSphere and NSX. This solution is focused on an environment with the following characteristics:

- Greenfield: brand new installation.

- Relatively small, two (2) to fifty (50) host deployment

- < 1000 Virtual Machines and most likely < 500 Virtual Machines

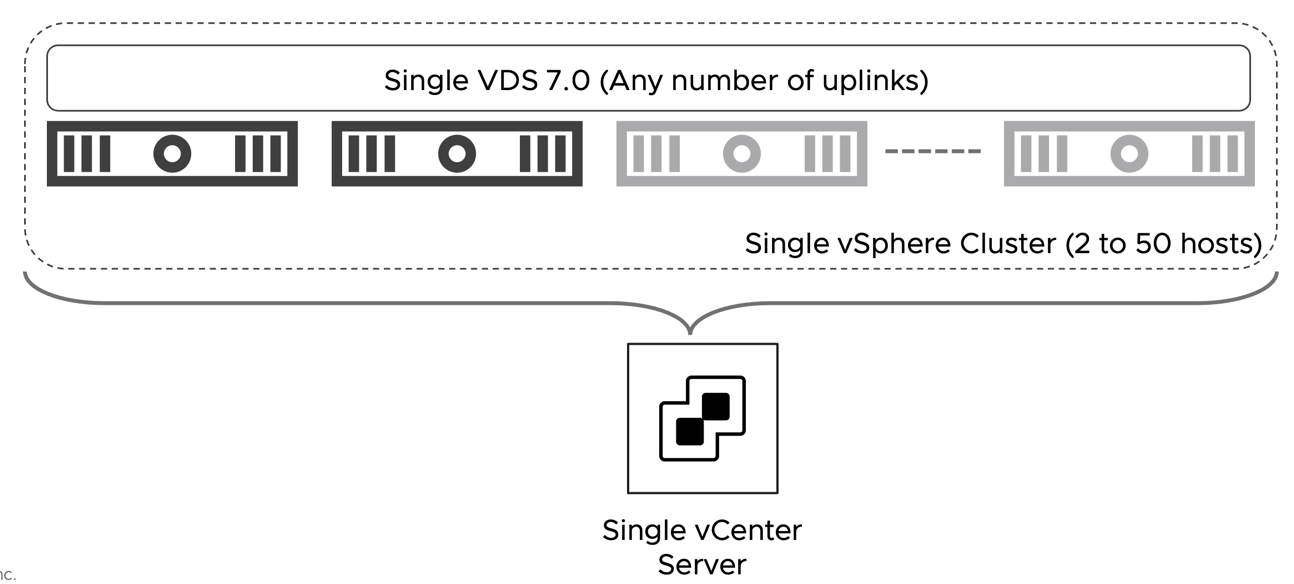

- Single vCenter, single cluster environment running vSphere 7.0 and VDS 7.0

- Primary Routing and Switching is owned by the Software Defined Networking solution

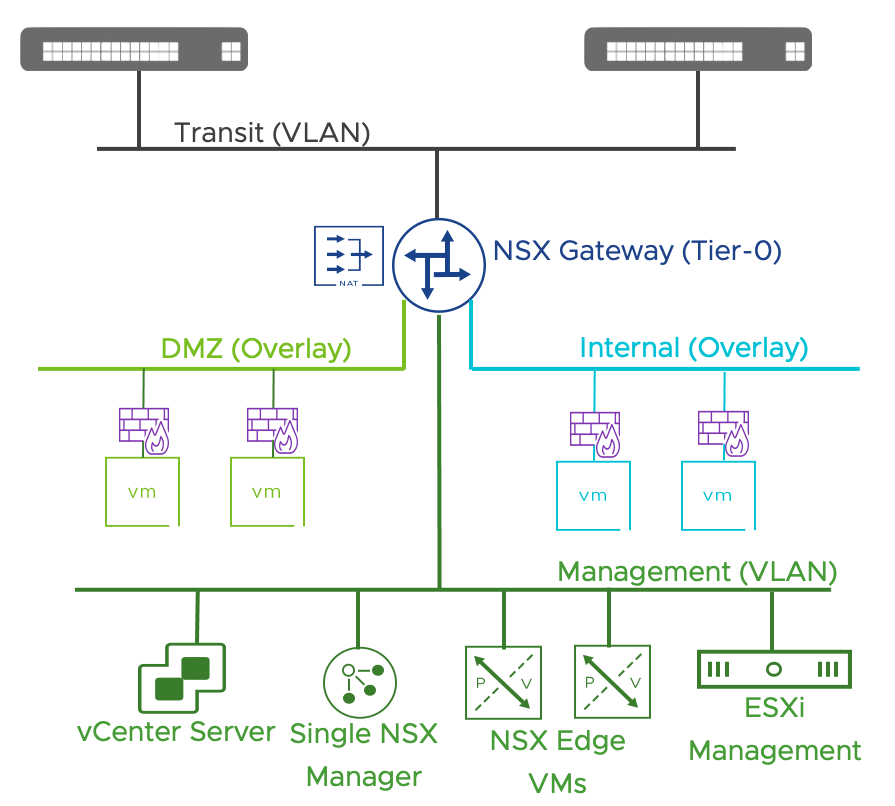

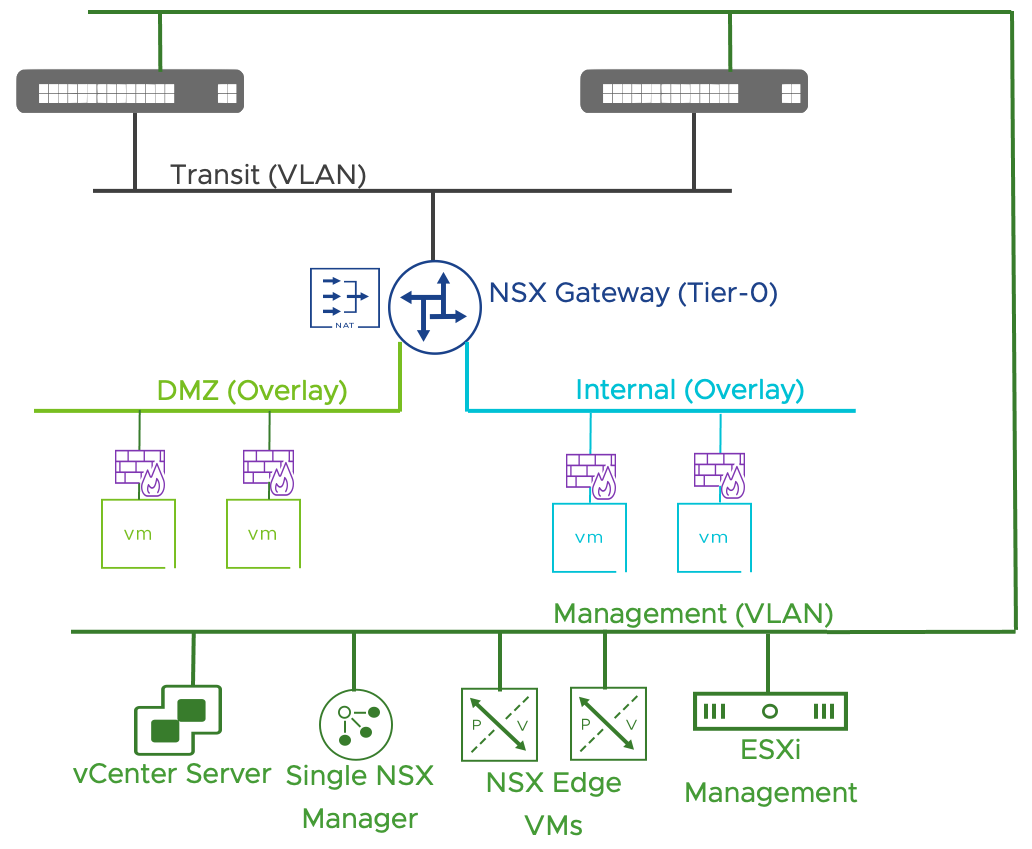

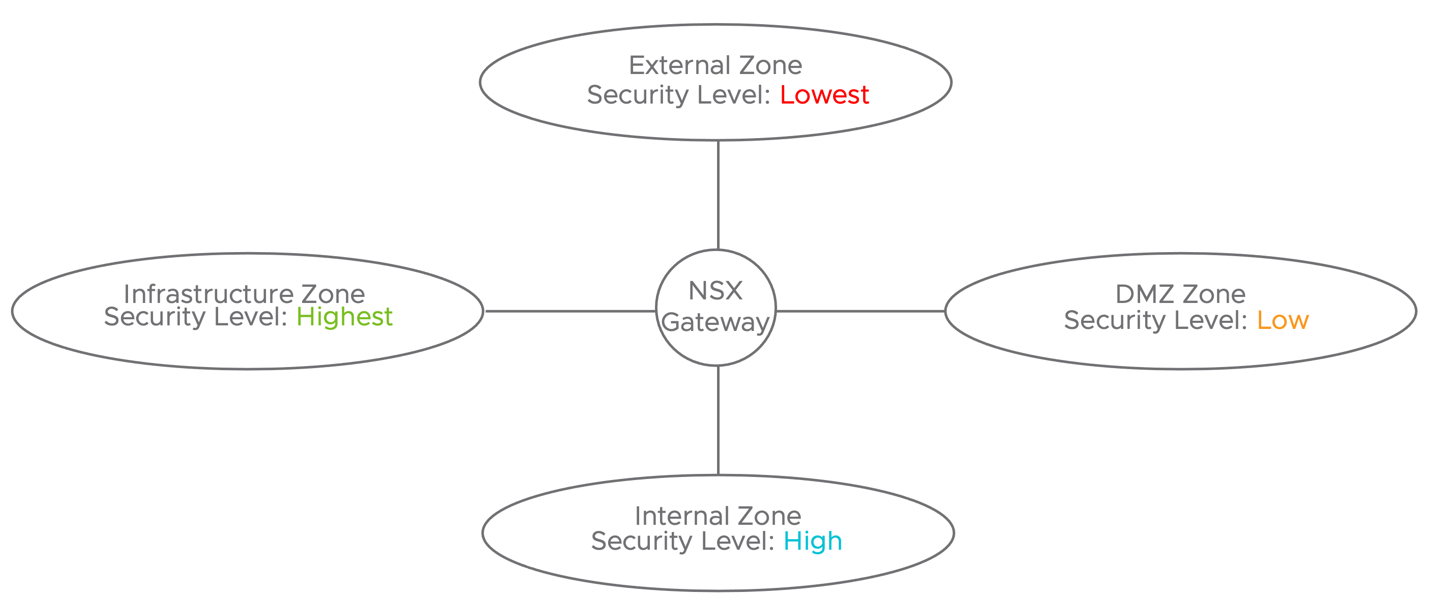

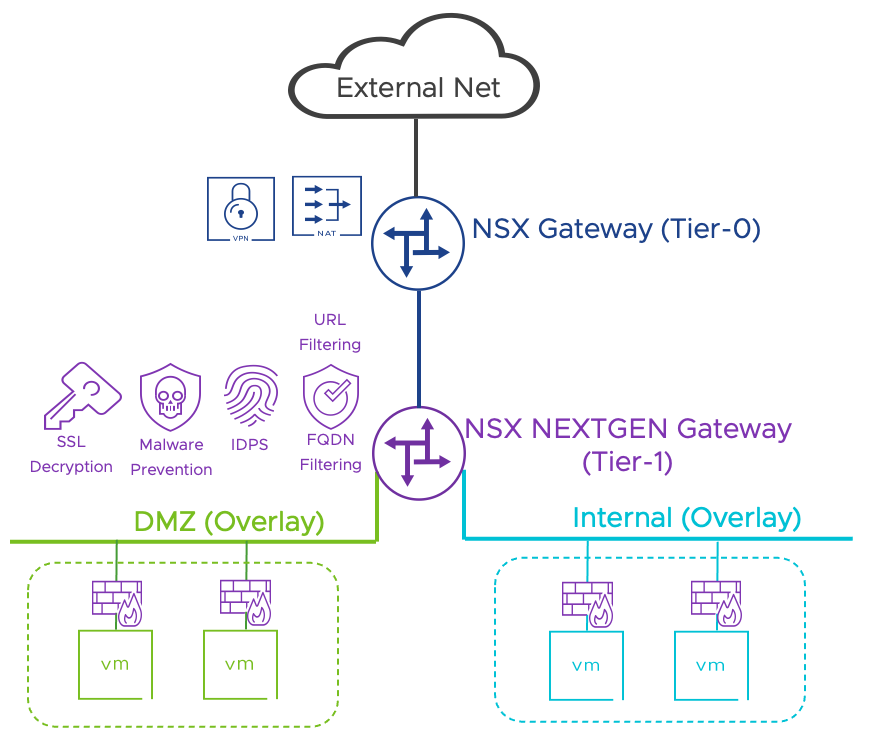

In its most basic implementation, the DC in Box architecture includes a pair NSX Edge VMs providing networking and security services. Workloads are placed on overlay networks that can be provisioned transparently to the physical network configuration. The entire virtual topology is hidden behind a NAT boundary minimizing external network dependencies. Distributed security services are available to the virtual machines located on overlays and can be supplemented by those provided by the NSX Next Generation Gateway Firewall running on the NSX Edges.

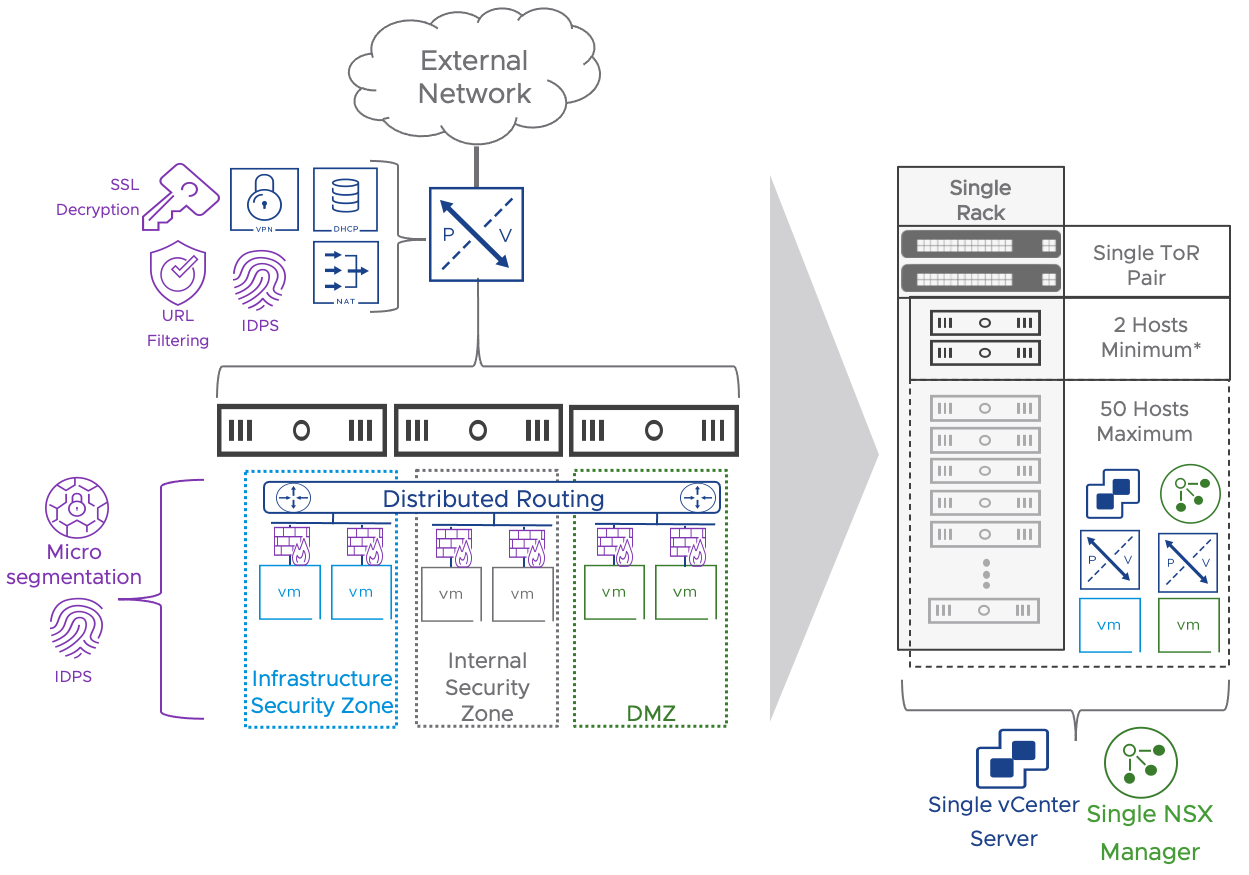

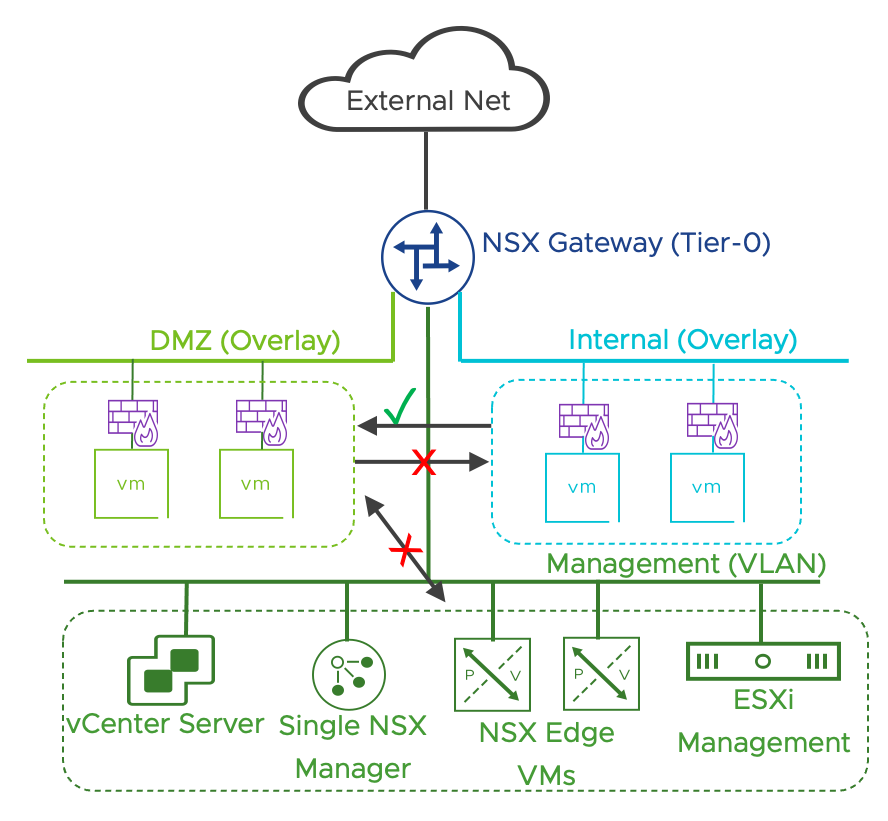

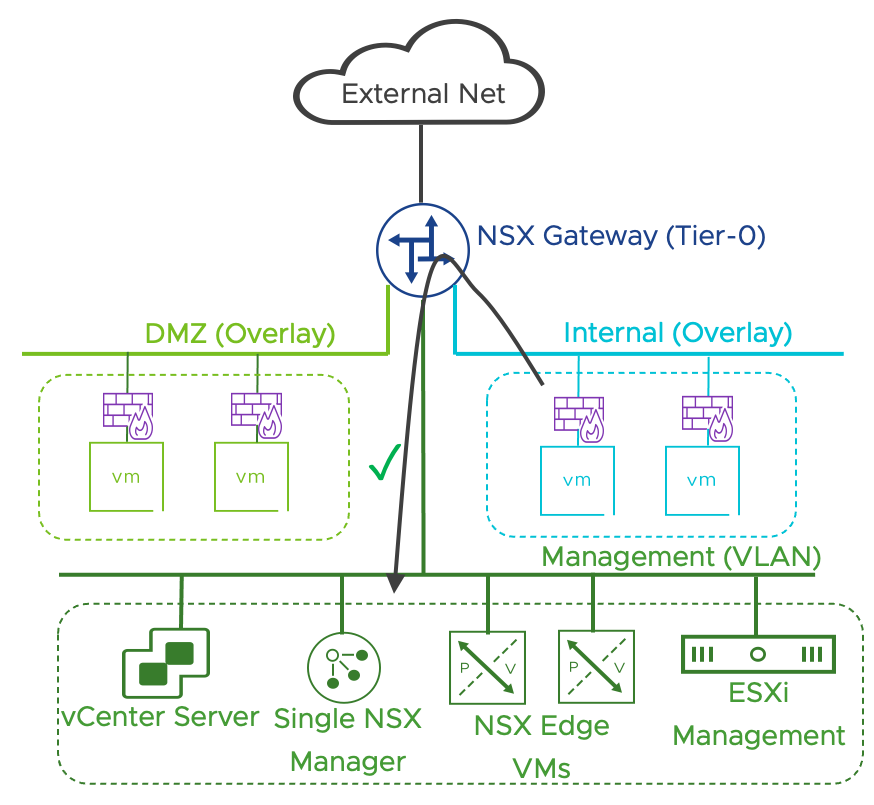

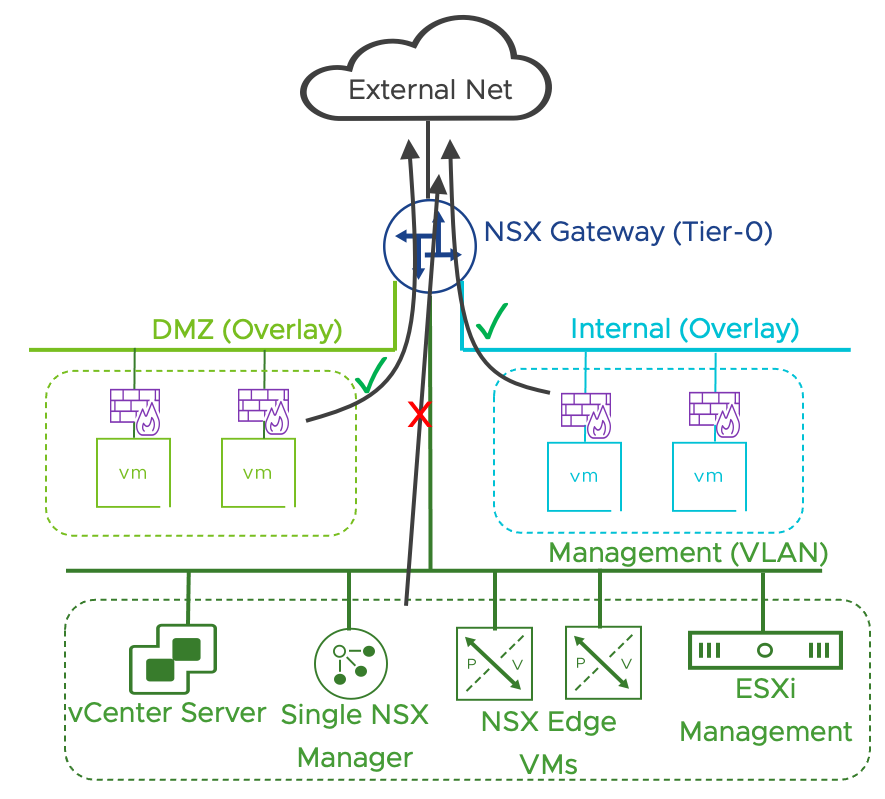

Figure 10: Datacenter in a Box Use Case Architecture and Features

When the NSX Application Platform is deployed, additional capabilities are unlocked. They include:

- NSX Intelligence (Application topology discovery and visualization, security policy recommendation)

- NSX Malware Prevention

- Network Traffic Analysis

- NSX Network Detection and Response

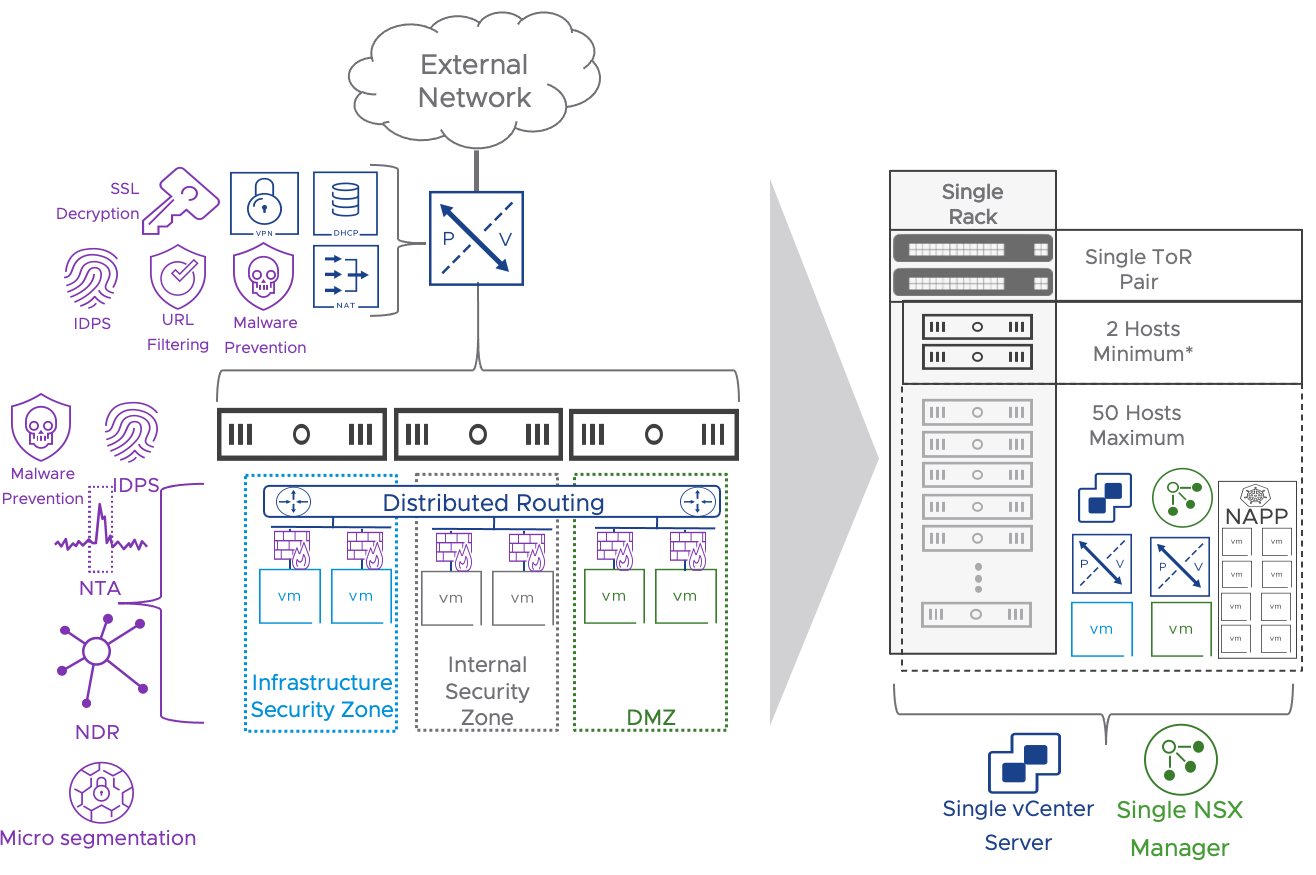

Figure 11: Datacenter in a Box Use Case Architecture and Features when adding NAPP

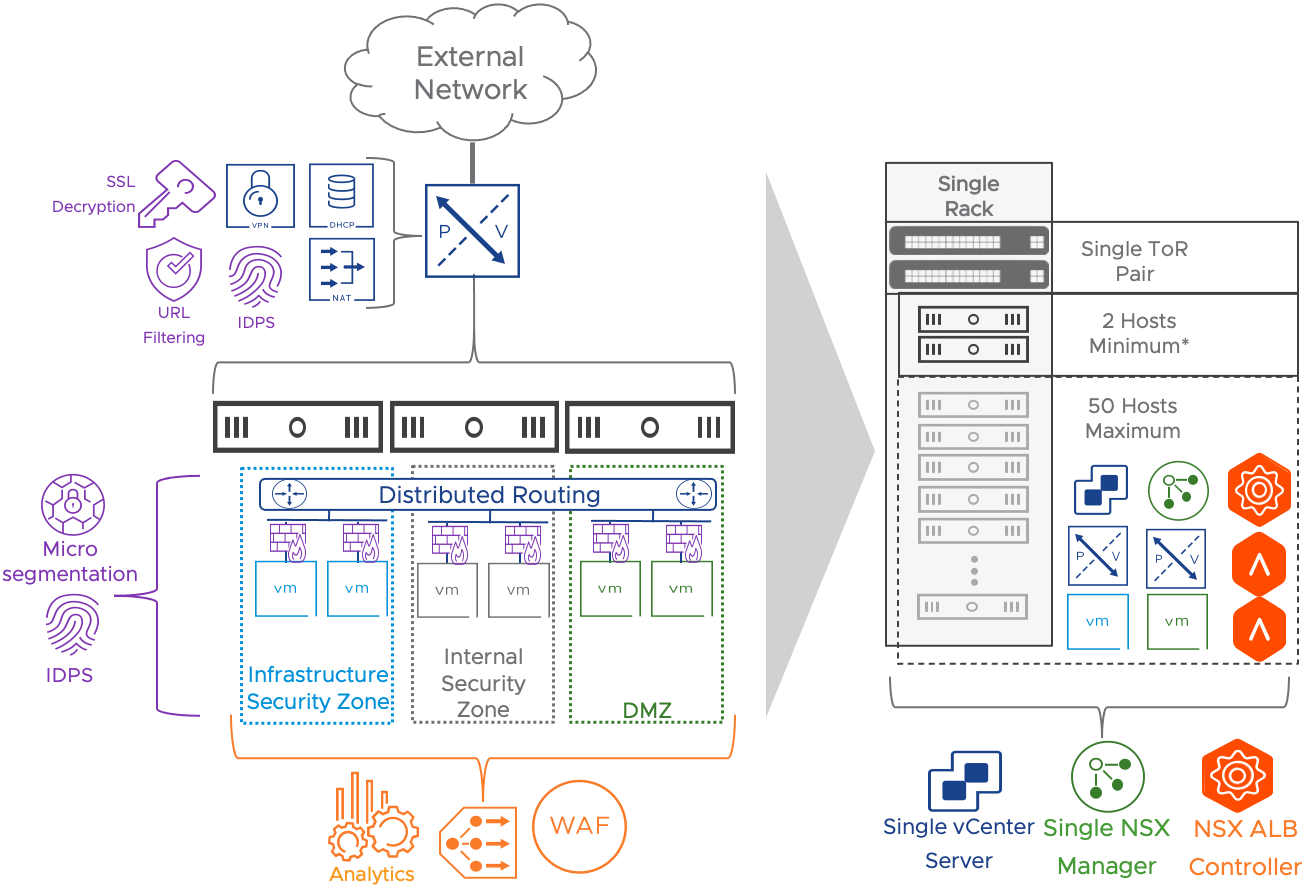

When the deployment requires load balancing services, the NSX advanced Load Balancer is deployed and integrated with NSX based on the model described here. A single NSX ALB controller and a pair of SEs are added to the design.

Figure 12: Datacenter in a Box Use Case Architecture and Features when adding NSX Advanced Load Balancer

The networking and security functionalities part of the solution are summarized in the table below. All the Distributed Security services available for the Simple Security for applications use case are optionally available for the DC in a Box use case. They are not repeated here.

| Functionality | Availability | Notes |

| Network Virtualization | Out-of-the-box | Layer 2 and layer 3 network virtualization is provided by the NSX Gateway and Geneve overlay networks. |

| L4 Gateway Firewall | Out-of-the-box | The NSX Gateway provides edge security functionalities and protects the DC in the Box from the external network. |

| Network Address Translation (NAT) | Out-of-the-box | The NSX Gateway hides the internal IP schema of the DC in a box. This approach minimizes physical network dependencies. |

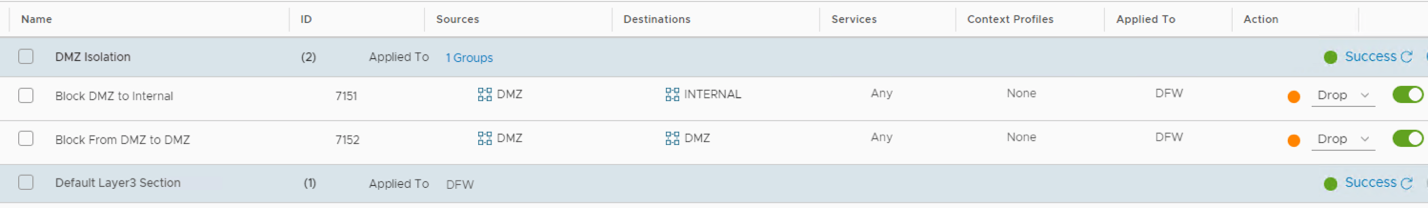

| L4 Zone Based Segmentation | Out-of-the-box | Two security zones are implemented via distributed firewall: DMZ and Internal. |

| Site to Site VPNs (Optional) | Post Deployment - Manual Implementation | L2 and L3 VPNs can be implemented on the NSX Gateway. |

| L4 Application Micro-segmentation (Optional) | Post Deployment - Manual Implementation | The administrator can implement a zero-trust security approach for critical applications leveraging NSX Distributed Firewall. |

| L7 Distributed Next Generation Firewall (Optional) | Post Deployment - Manual Implementation | The administrator can add AppID, FQDN and Identity Based firewall functionalities via the NSX Distributed Firewall. |

| Distributed IDS/IPS (Optional) | Post Deployment - Manual Implementation | Distributed IDS/IPS are available to any workload without any redesign. |

| L7 Next Generation Gateway Firewall (Optional) | Post Deployment - Manual Implementation | The administrator can add AppID, FQDN and Identity Based firewall functionalities via the NSX Gateway Firewall. |

| NSX Gateway URL Filtering (Optional) | Post Deployment - Manual Implementation | The administrator can create access rules based on predefined URL categories and reputation levels. Custom URLs are supported. TLS decryption is required to enforce URL filtering on TLS traffic. |

| NSX Gateway Network Sandboxing - Malware prevention (Optional) | Post Deployment - Manual Implementation | NAPP is required. TLS decryption is required to provide the service to encrypted traffic. |

| NSX Gateway IDS/IPS (Optional) | Post Deployment - Manual Implementation | IDS/IPS rules can be configured on the NSX Gateway. Combined with TLS decryption it provides protection for encrypted traffic (Not available for the distributed IDS/IPS) |

| NSX Gateway TLS Decryption | Post Deployment - Manual Implementation | Extend advanced security features to encrypted traffic. |

| Load Balancing + WAF + Analytics (Optional) | Post Deployment - Manual Implementation | The administrator can add Load Balancing capabilities by implementing the native NSX load balancer or by deploying the NSX Advanced Load Balancer (Preferred). Link to the NSX-T AVI Integration. |

Table 2: Features included in the DC in a Box Use Case

2.4.2 Datacenter in a Box Use Cases and Business Drivers

The following sub-sections cover the business drivers and the use cases of the DC in a Box solution.

2.4.2.1 Adopting Cloud Consumption Model with Data Center in a Box

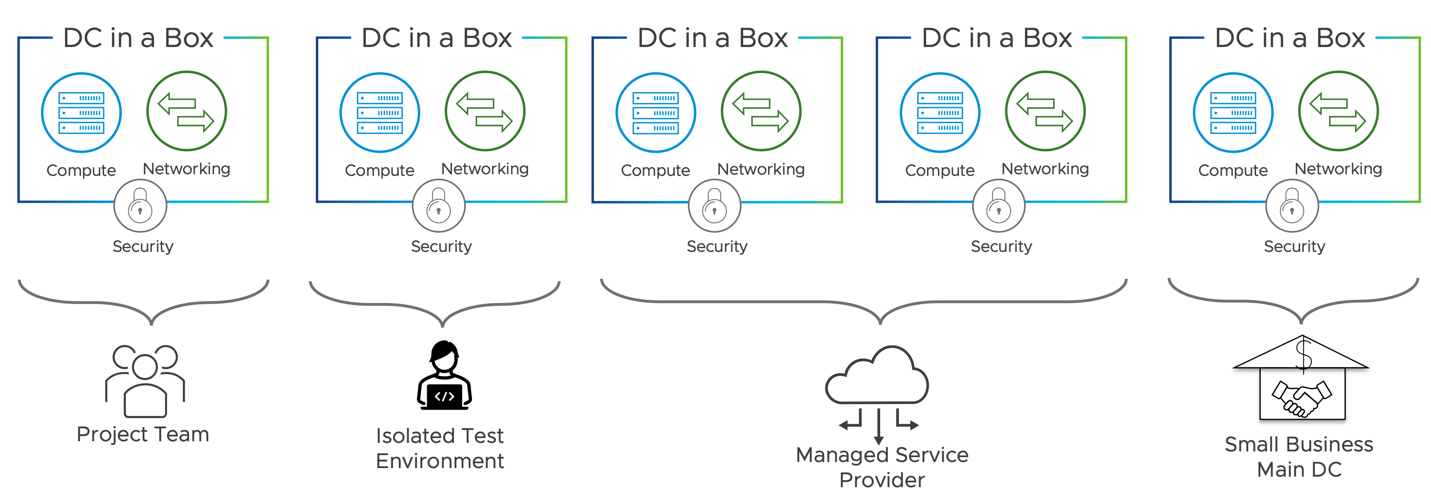

With the advent of public cloud infrastructure services, internal IT customers have significantly more choices on how and where they want to consume the entire stack. This use case allows the internal IT organization to leverage software-defined networking, security, and services (NAT, FW, VPN, and LB) to create comparable self-contained environments. This design pattern aims to address a variety of formations such as small businesses or footprint, consolidation of fragmented environments, support and life cycle of various services (i.e., appliances for the services), and service providers who can provide hosted or managed services. Admins can use the small footprint solution proposed in this design for a separate or combined prod/QA/Test environment with a full stack of services. For example, QA or test environments may be self-contained from a compute perspective and deployed with overlapping IP addresses. NAT and DFW can isolate the different tenants when leveraging common compute resources.

A managed service provider may require quickly deploying a new independent environment for a new customer in their data center.

In some cases, the managed service provider is the IT organization itself. It may not have ready-for-consumption infrastructure to support ad hoc departmental initiatives. They need to rely on the quick deployment and consumption of ad-hoc resources.

Figure 13: DC in a Box Independent Units of Compute

2.4.2.2 Appliance Sprawl

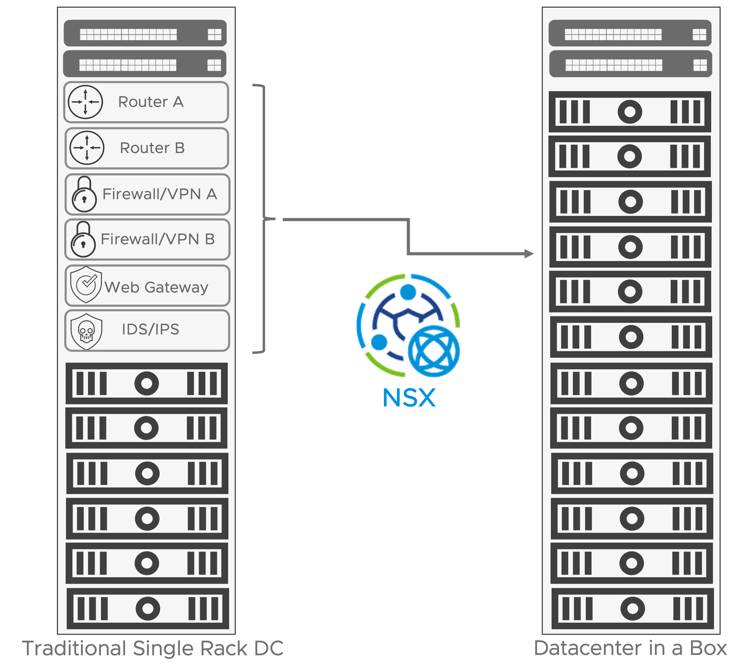

One of the challenges of operating an infrastructure based on independent pods of resources is the overhead imposed by the networking and security in rack space, power, and cooling. A traditional independent datacenter rack often has between 10 and 30 rack units dedicated to networking and security appliances. Those appliances may include top of rack switches, routers for Internet or WAN connectivity, and VPN-capable firewall appliances. In some cases, the security stack expands to a dedicated web gateway, intrusion prevention, load balancer, and web application firewall appliances. Dedicating many resources to networking and security in a small footprint solution is not economically viable or practical. VMware NSX allows virtualizing the entire Networking and Security functions eliminating the need to deploy physical appliances. The entire rack can be dedicated to generic servers capable of running application workloads and network and security services. The derived benefits drive the possibility of running independent units of computing efficiently and cost-effectively.

Figure 14: Appliance Sprawl vs DC In a Box

2.4.2.3 Vendor Sprawl

The networking and security stack in a data center is generally comprised of appliances from different vendors. While those appliances support standard protocols such as Ethernet and IP, and the administrator can integrate them via a coexistence model, every deployment is a snowflake that the IT organization is responsible for designing and validating. These problems are especially of concern when the organization must carefully assess high availability and performance requirements to meet the targeted Service Level Objective (SLO) and Service Level Agreement (SLA). Designing and assessing a full-stack networking and security solution may take quite a lot of time, resources, and expertise, delaying the organization's time to bring the solution into production.

Day two operations management represents a risk too. A heterogeneous solution may require the manual reconfiguration of multiple pieces of equipment or the creation and support of a custom-built automation tool. Troubleshooting and escalation may also become tedious and time-consuming as multiple parties are involved. The DC in a Box addresses this challenge by providing a single platform covering a small footprint data center's entire network and security needs.

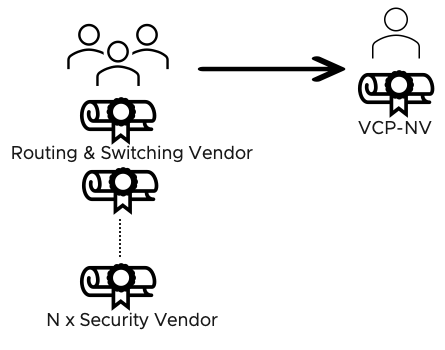

2.4.2.4 IT Staff Expertise

Small and medium organizations may not have the in-house resources to design and validate heterogeneous networking and security solutions. In such situations consulting services from third-party system integrators are often required and increase the overall adoption cost for the solution. Day-to-day activities such as configuration changes, triage, and troubleshooting require staff with various skill sets trained on different vendors and products. Developing and maintaining such a team is costly and time-consuming and may be out of reach for some organizations. The Total Cost of Ownership (TCO) is essential in assessing an implemented solution's success. The Datacenter in a box solution alleviates this pain point by requiring expertise in a single vendor product: VMware NSX.

Figure 15: Vendor Sprawl and IT Staff Reduction for the DC in a Box

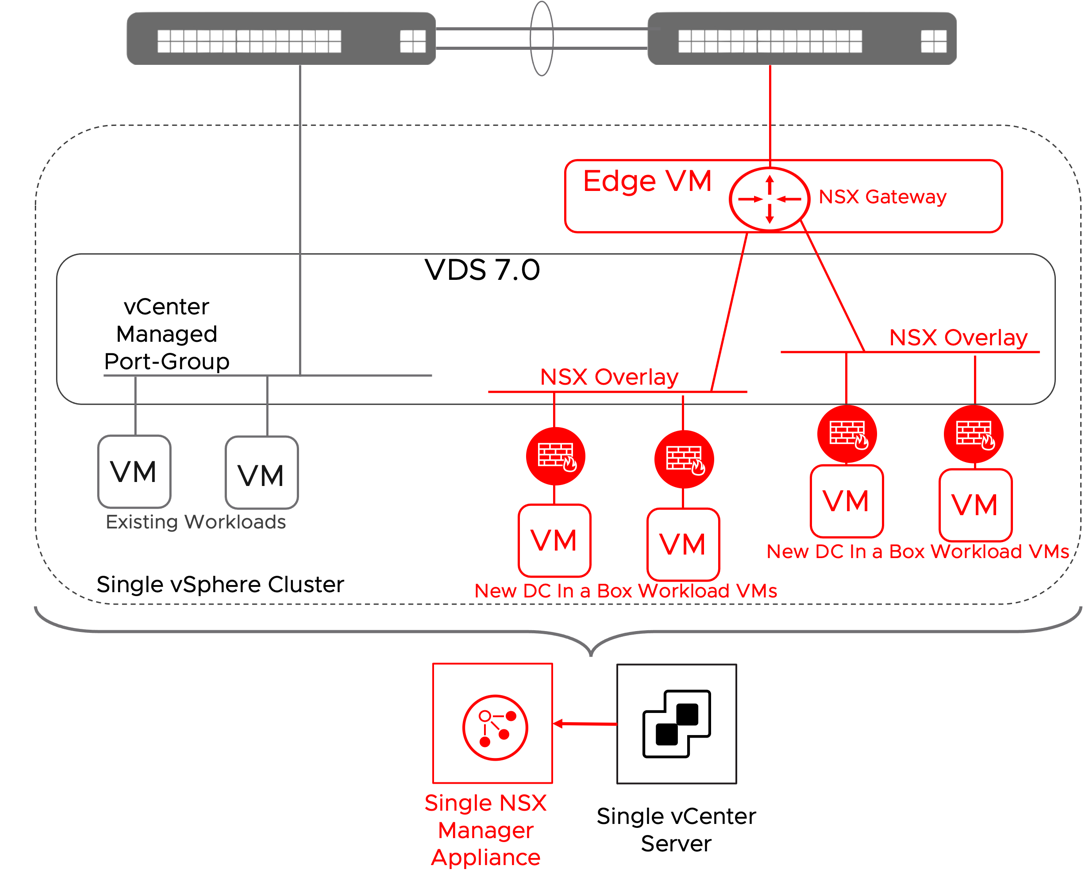

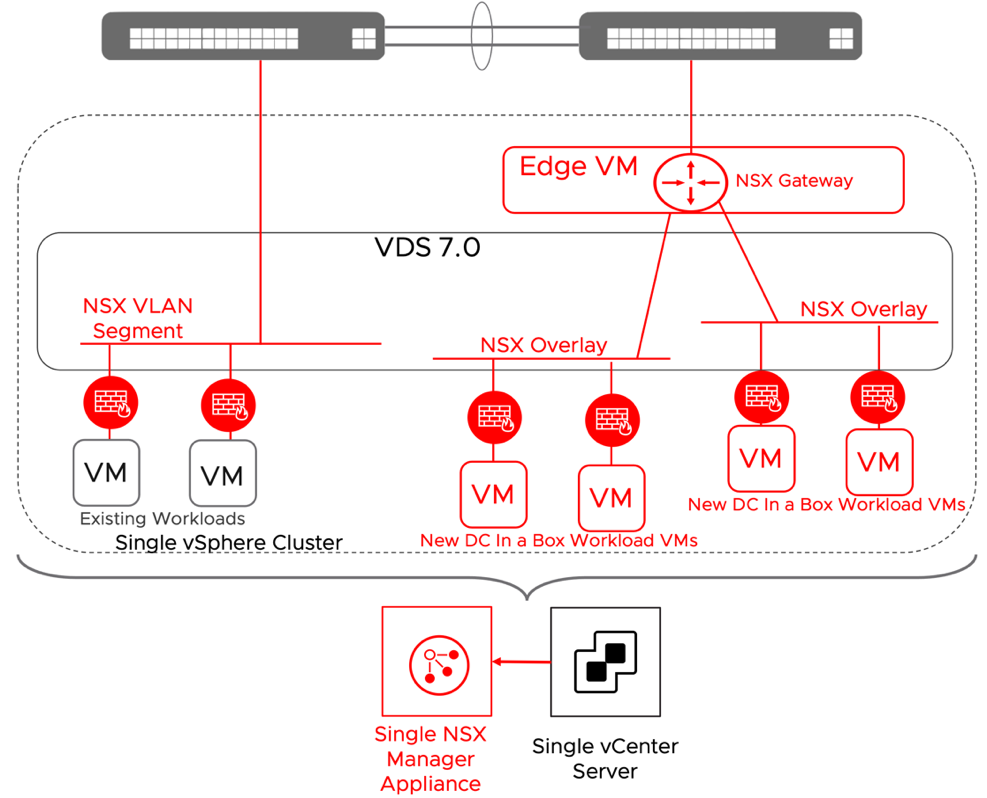

2.4.3 DC in a Box in a Brownfield Environment

The DC in a Box solution generally targets greenfield environments because enterprise architects can use it as an atomic building block to create a more complex infrastructure platform. In some situations, the organization may be looking to deploy the DC in a Box solution in a brownfield environment where workloads are already running. This approach is possible without modifying the DC in a Box design or its pre-requisites. If the environment can satisfy all the assumptions outlined in the solution design section, the DC in a Box can be implemented side by side to the existing workloads. Existing VMs will keep using networking and security services offered by the physical network, new workloads deployed on the new virtualized topology those provided by VMware NSX instead. See diagram below.

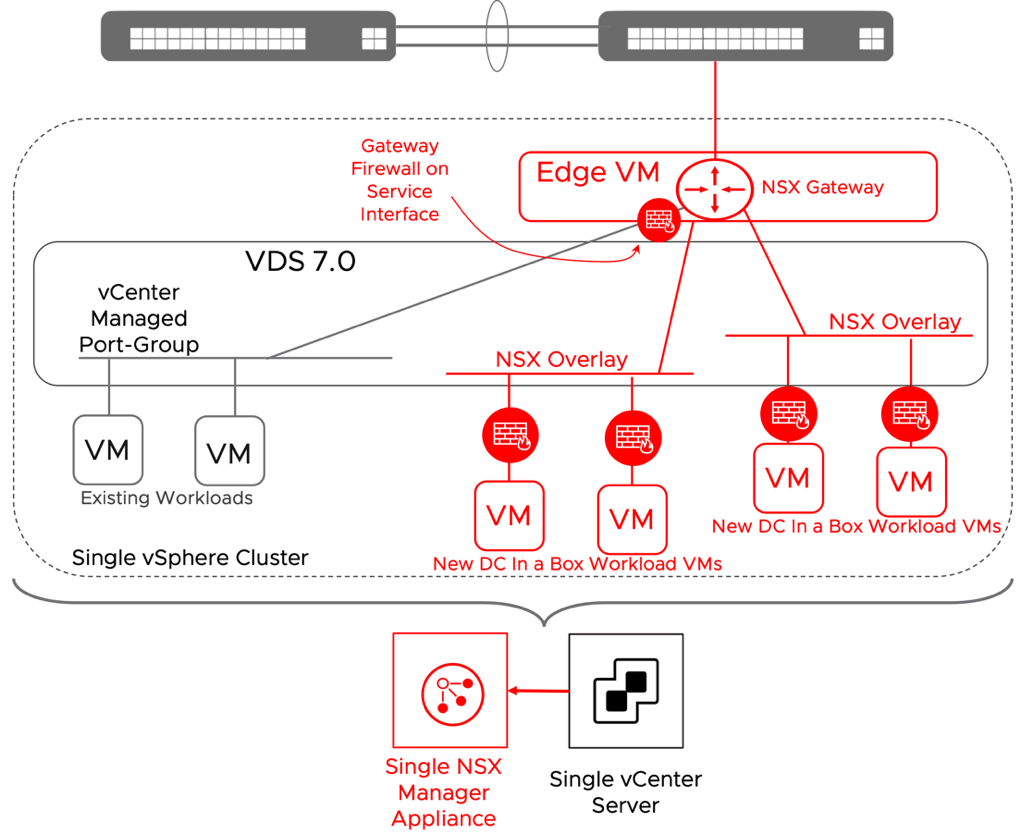

Figure 16: DC in a Box Deployed in a Brownfield

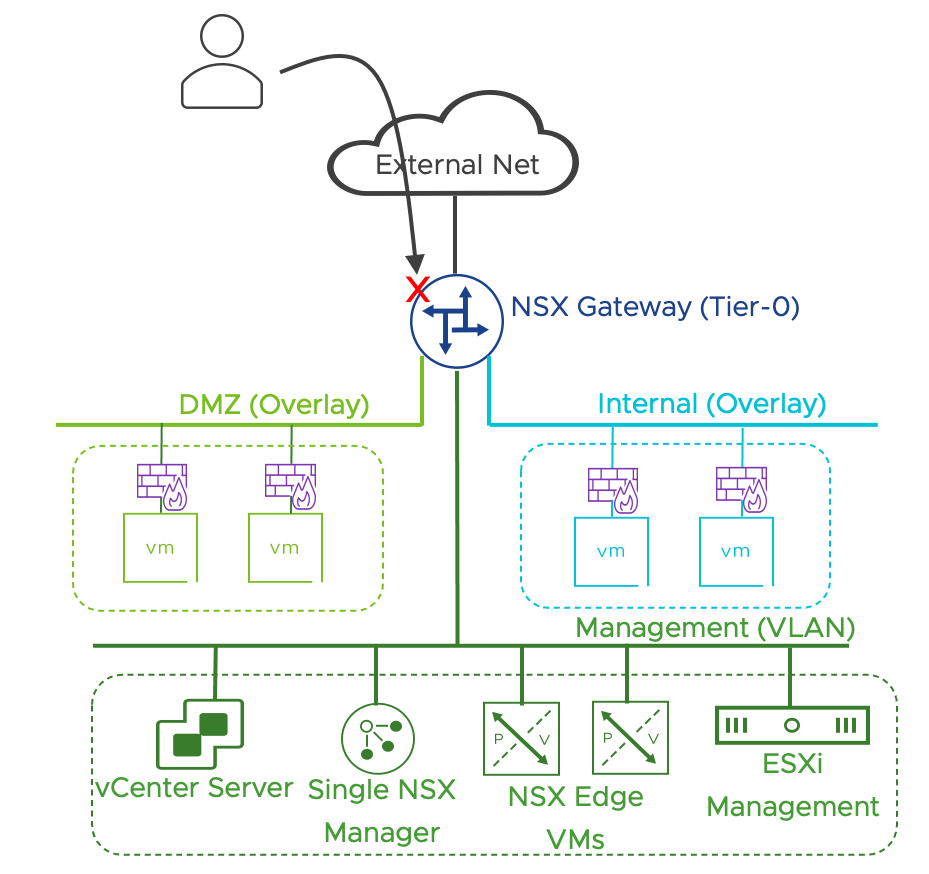

When the primary concern is integrating the existing workloads into the DC in a box networking model, the more straightforward approach is to leverage a service interface on the NSX Gateway. The NSX Gateway will become the default gateway for the existing workloads. While adopting the simple security for applications use case for the exiting workloads is entirely transparent to the physical network, moving the networking services to the NSX gateway is not. A security-only integration is recommended unless specific business drivers call for the networking integration. One example could be providing security services for bare metal servers. The networking integration via the service interface is outlined in the diagram below.

Figure 17: DC in a Box in a Brownfield + Networking Integration

Figure 18: DC in a Box in a Brownfield + Security Integration

Additional integration strategies are possible but not covered in this document because of their operational complexity. Those presented represent what we deem the most effective at delivering business value while striving for simplicity.

3 Solutions Design

3.1 Overview of recent relevant NSX enhancements

The solutions presented in this document have been validated for NSX version 3.2.1, but they should work with any later release. They benefit from the recent introduction in the NSX platform of some critical functionalities. We will review them here because of their relevance in the presented designs and because the reader may not be familiar with them already. Those functionalities are:

- NSX Distributed Security on distributed virtual port-groups

- NSX Deployment on VDS

- Single TEP network shared between Edge and Host Transport Nodes in a collapsed cluster

- Singleton NSX manager support

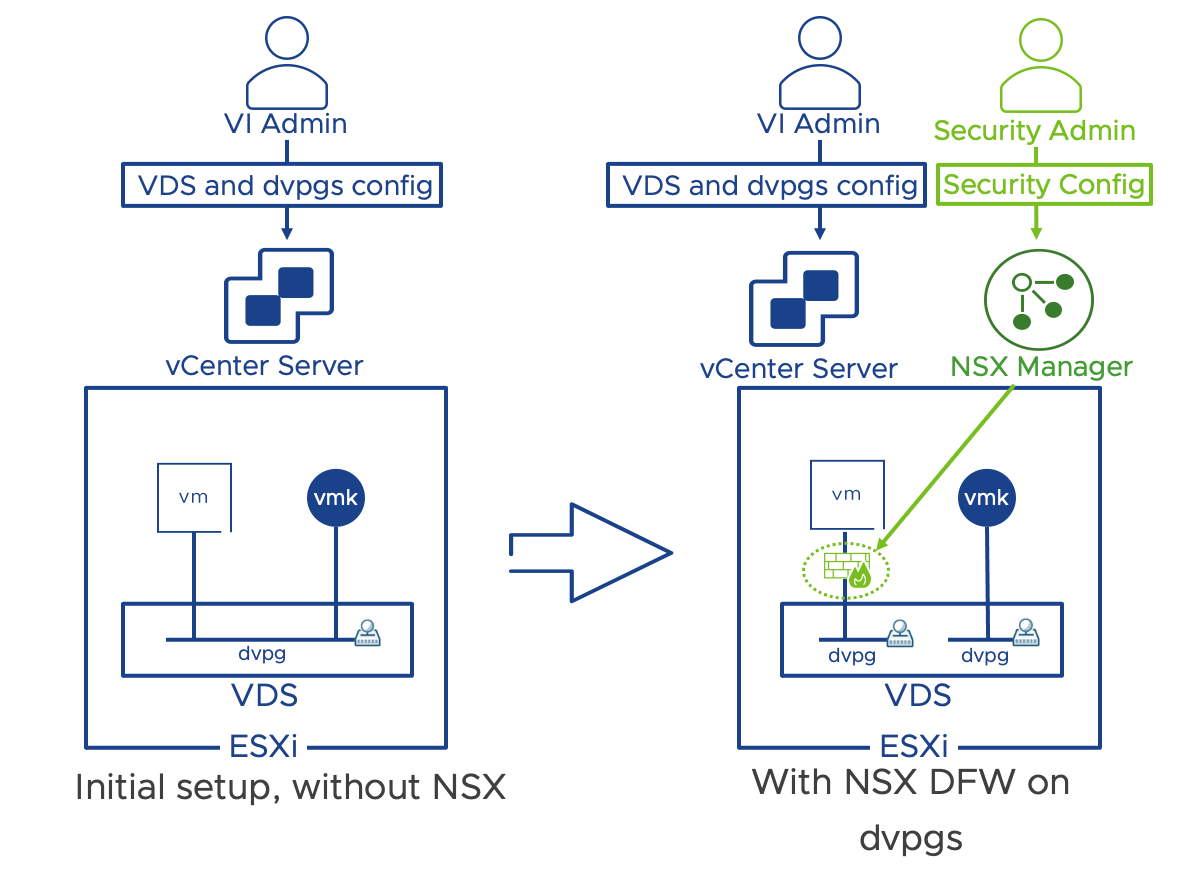

3.1.1 NSX Distributed Security on distributed virtual port-groups

Starting with NSX 3.2, it is possible to protect workloads connected to vSphere distributed port-groups via all the native NSX distributed security services (DFW, IDPS, NTA, Malware Protection, NDR). In deployments that do not include overlays such as the simple security for application use case, there is no need to migrate the VMs to NSX VLAN segments. This capability allows to transparently insert in brownfield environments the NSX Distributed Firewall and the other NSX distributed security services. The Virtual Infrastructure Administrator retains control of the virtual infrastructure management through vCenter, including the management of the VDS and port-groups. The security administrator can manage and apply policies via NSX manager for a complete roles and tools separation. Key requirements and implementation considerations for the solution are:

- No need to create NSX Segments in the NSX UI:

- dvpgs are discovered by NSX, and segment profiles can be applied to them in the NSX UI (IP Discovery, Spoof guard, switch security, etc.)

- DFW is enforced on all VMs connected to dvpgs

- NSX networking features based on overlay are NOT available

- Requires vSphere 6.7+, VDS 6.6+, NSX 3.2+

- No DFW for infrastructure traffic

Figure 19: Distributed Firewall on vSphere Distributed port-groups

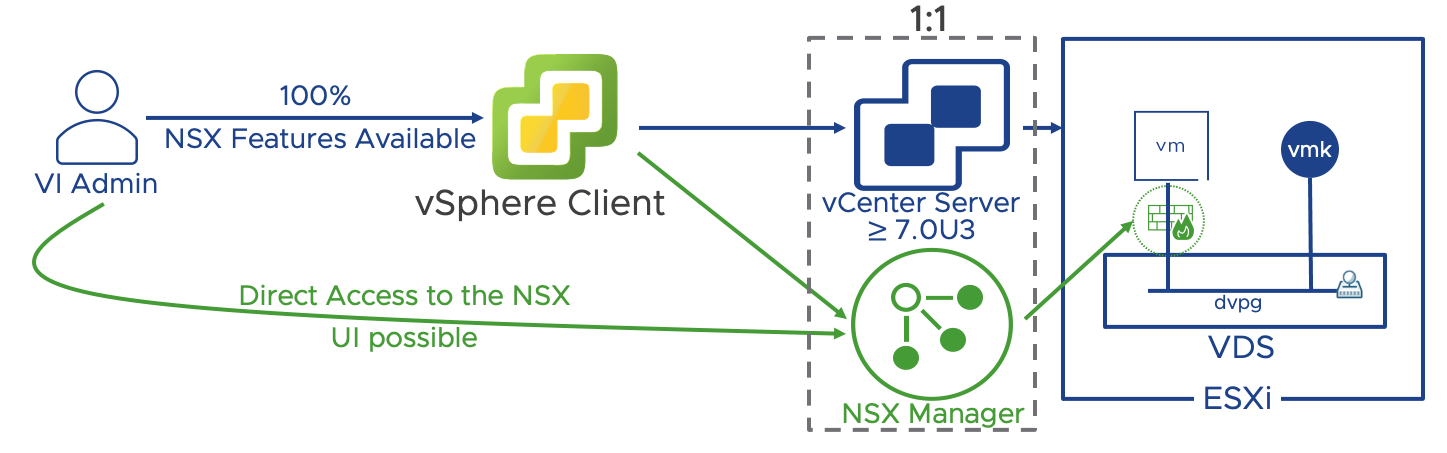

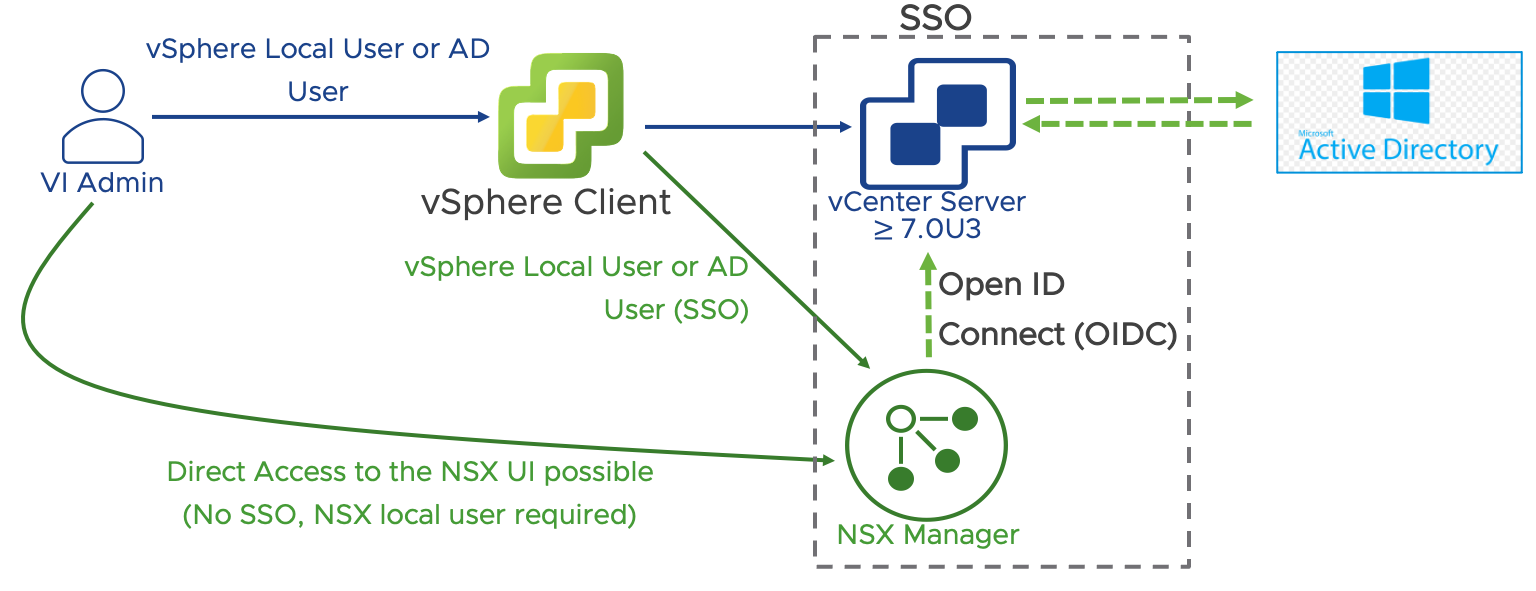

In organizations where the separation of roles between the VI Admin and the Security admin is not desirable, and the VI Admin takes ownership for the implementation of NSX Security policies, it is possible to manage the NSX security configuration via the vSphere client thanks to the vSphere plug-in for NSX. The vSphere plug-in for NSX provides single sign on (SSO) between vCenter and NSX and require vCenter 7.0U3 or later. Key requirements and implementation considerations for the vSphere plug-in for NSX are:

- vSphere plug-in for NSX installation can only happen at the NSX Manager deployment time. If NSX Manager is already deployed, the vSphere plug-in for NSX cannot be installed.

- A single NSX Manager is supported, a 3 nodes NSX Manager cluster is not supported (NSX Manager cluster is supported starting with NSX 4.0.1)

- When leveraging the vSphere plug-in for NSX, NSX Manager can only manage a single vCenter. The ability to manage multiple compute managers is not available.

Figure 20: vSphere plug-in for NSX

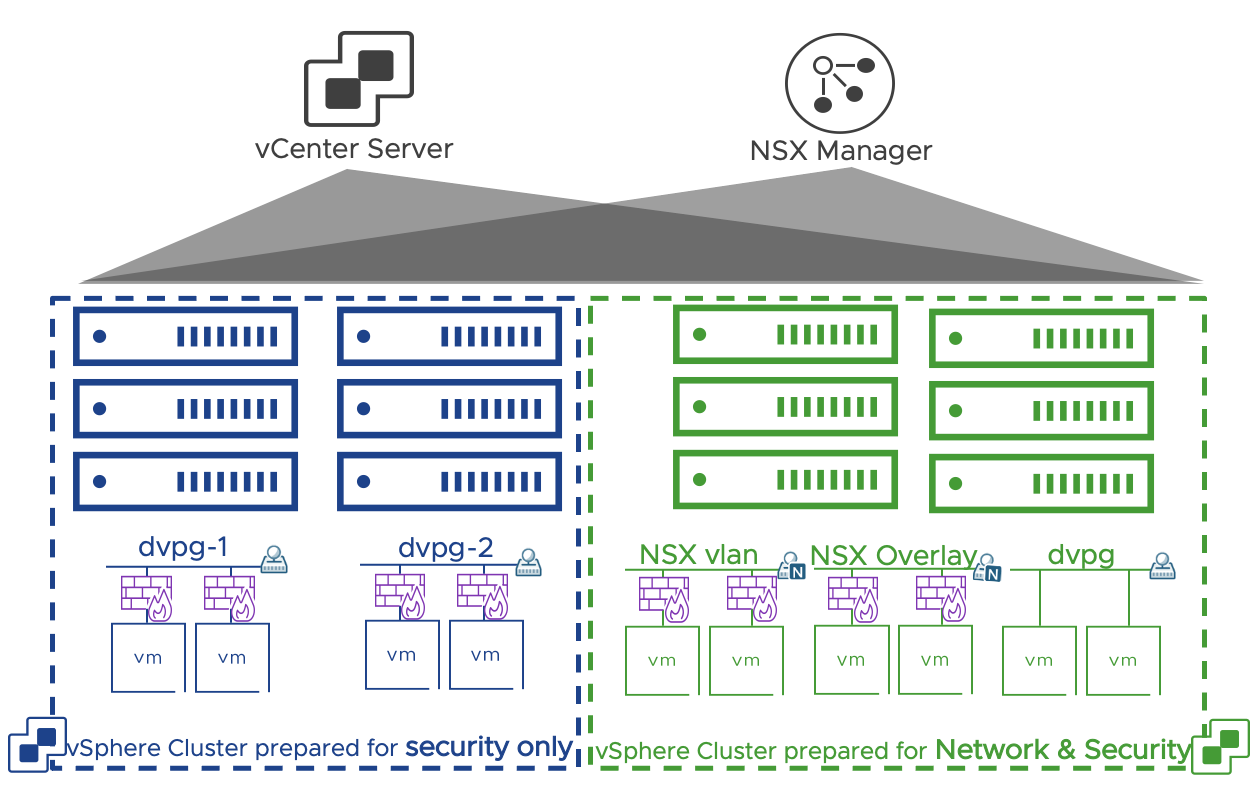

When NSX security of vSphere distributed port-groups is enabled on a vSphere cluster (via the security only preparation), overlay networks are not available. Overlay networks are available in cluster prepared for network and security. Security only and Network & Security prepared clusters can coexist within the same NSX and vCenter installation.

Key implementation considerations for Network & Security clusters compared to security only clusters are:

- Distributed security is available on VLAN networks, but VMs must be connected to NSX VLAN segments

- VMs connected on dvpg are excluded from NSX distributed firewall and any other distributed security feature

- Converting a cluster between the two modes requires removing NSX from the cluster and install it again

In the simple security for application use case, the vSphere cluster is prepared for security only, in the DC in a Box use case for networking and security.

Figure 21: Security only vs Networking and Security clusters

3.1.2 NSX on VDS (Networking and Security Clusters)

3.1.2.1 Overview

Starting in NSX 3.0 and vSphere 7, we can run NSX on top of an existing VDS. The change only affects vSphere. When running NSX on VDS, all the NSX functionalities are preserved including distributed security and overlays, but the NSX segments are also presented as port groups on the VDS. This model allows traditional dvpgs and NSX segments to share the same virtual switch and uplinks, while in the past, deploying NSX required different virtual switches and uplinks. We can now select the workloads requiring NSX services and place them on NSX managed port-groups while leaving on traditional dvpg the components that we want to exclude from the NSX purview.

For in-depth coverage of the NSX on VDS model, please review this TechZone article. The following sections will address how the two use cases covered in this document specifically benefit from it.

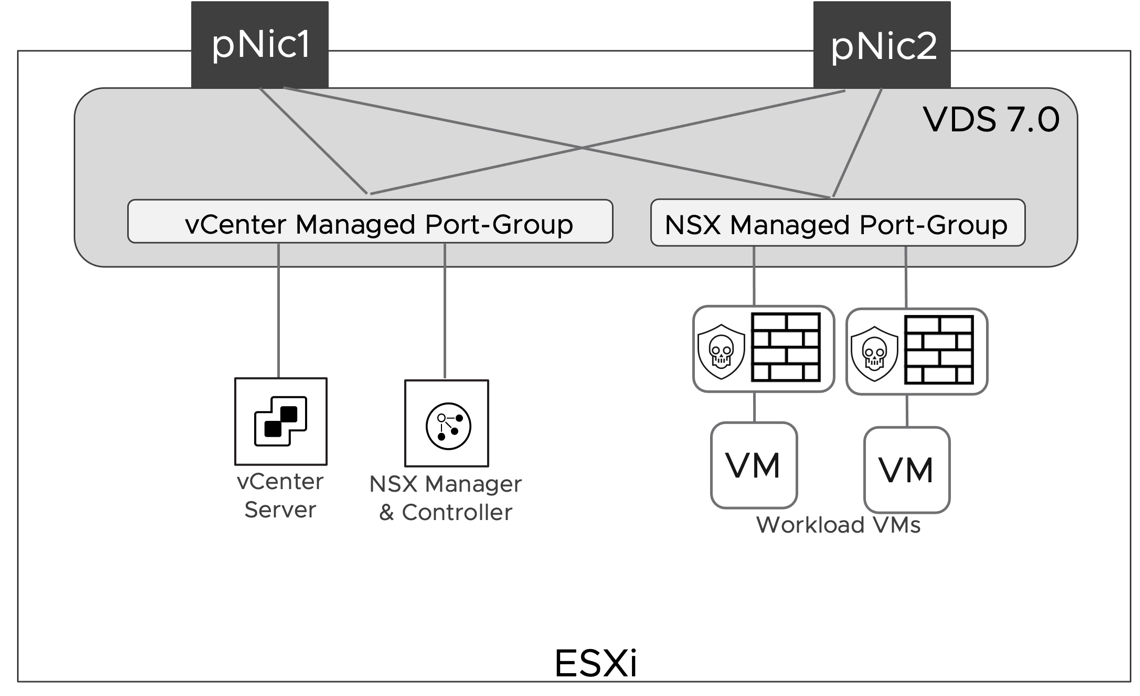

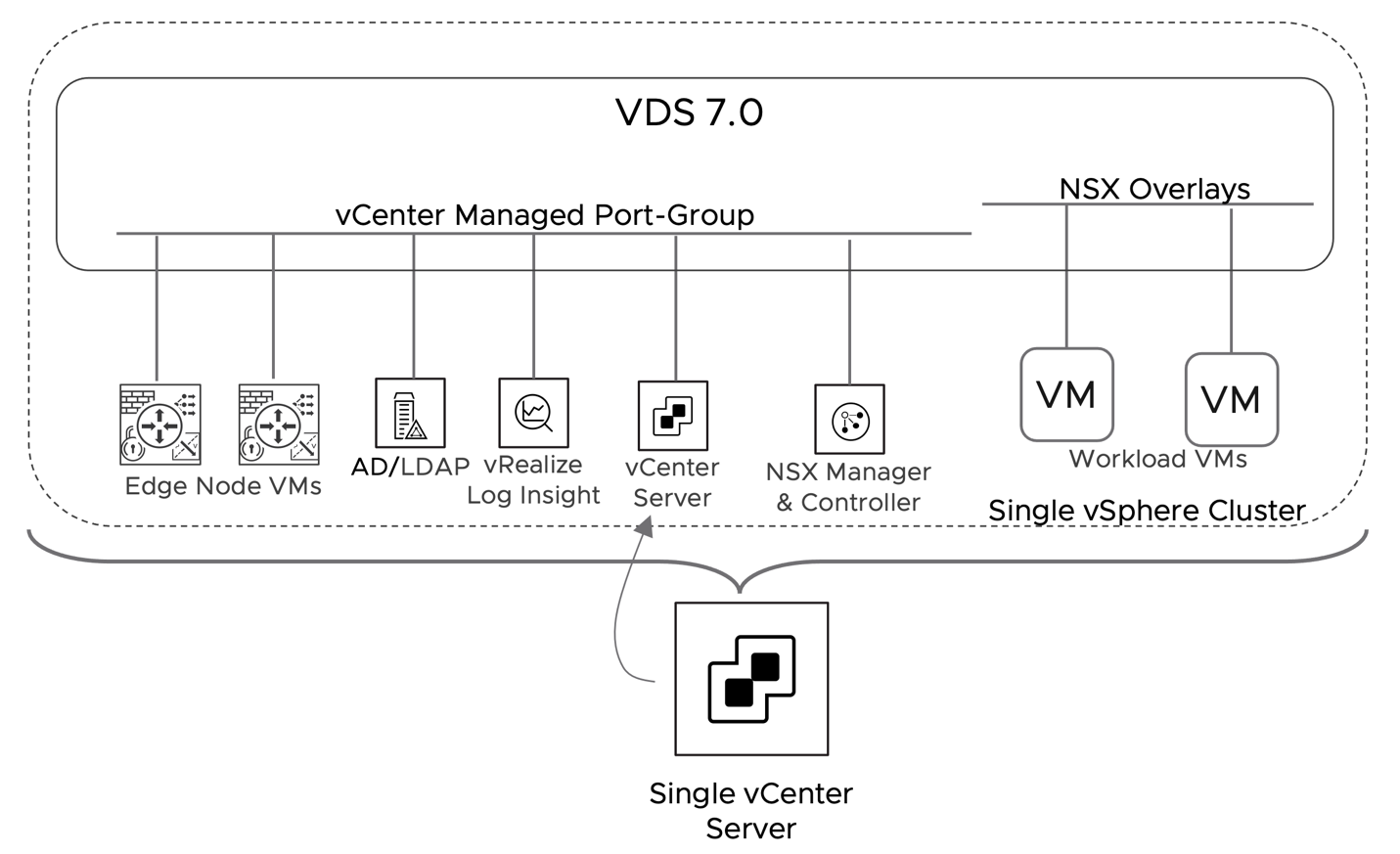

3.1.2.2 DC in a Box

Collapsed cluster design defines a deployment model where all the components of the stack (Management and Edge) along with workloads share the same cluster. VDS 7.0 can have some of its port-groups managed by vCenter and some by NSX Manager. Placing NSX Manager on a dvpg managed by vCenter eliminates any circular dependency and makes implementing a collapsed cluster design straightforward.

Figure 22: Management Components network placement on VDS

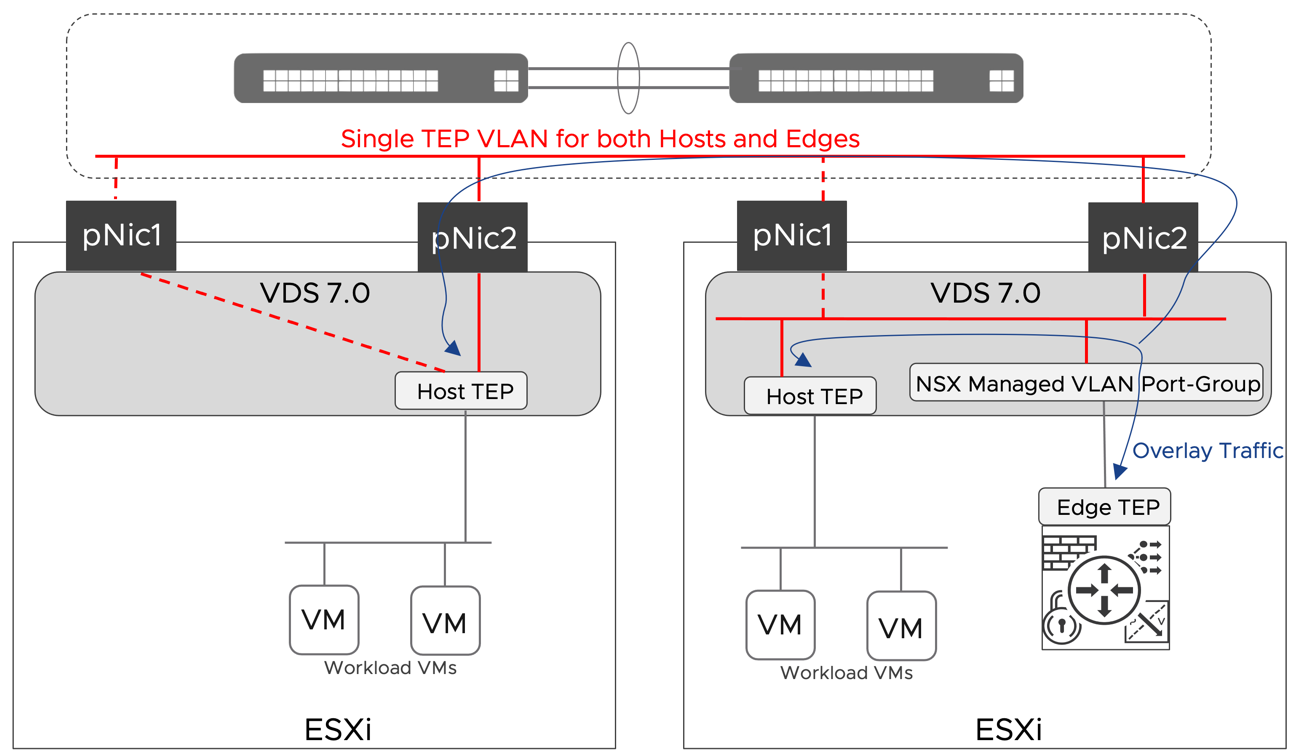

3.1.3 Single TEP Network (DC in a box)

Edge node VMs are deployed on an NSX prepared host in a collapsed cluster design. This is required so that the same server can host both the edge node and the workload VMs. When deploying an edge node on an NSX prepared host with only two uplinks in versions earlier than 3.1, it was required for host and edge TEP interfaces to be on different subnets and VLANs. The reason being the ESXi host was able to process Geneve encapsulated packets only when they were received on a physical uplink.

In NSX version 3.1, VMware eliminated this limitation, and now we support inter-TEP traffic within a host. This improvement does not impact the simple security use case because overlay traffic is not part of the design but positively impacts the DC in a Box solution by simplifying the requirements on the physical network.

Confining the entire overlay traffic to a single VLAN reduces the physical network requirements. The network administrator now has a single VLAN rather than two to configure and enable jumbo frames. Also, a single VLAN model does not require routing overlay traffic, so no switched virtual interfaces (SVIs) and first-hop redundancy protocol to be enabled either. In the past, it was common for the network administrator to enable L2 jumbo frames on the physical switches but forget to do the same for Layer 3 traffic under the SVIs configuration. Reducing the configuration points on the physical network reduces the risk for error and a frustrating adoption experience.

In the context of a simplified design, using a single overlay transport VLAN is also advantageous from a security perspective. When we must route overlay traffic, the SVIs on the transport network can serve as an entry point to the overlay network because overlay protocols such as VXLAN and Geneve do not provide embedded security. There is no better way to secure the transport network than completely isolating it on a single layer 2 domain that only the participating transport nodes can access. Securing a routed transport network requires the use of additional physical network functionalities such as an external firewall, ACLs, or VRFs, which are not in line with the goals of a simplified design.

Figure 23: Inter TEP Communication within the same host

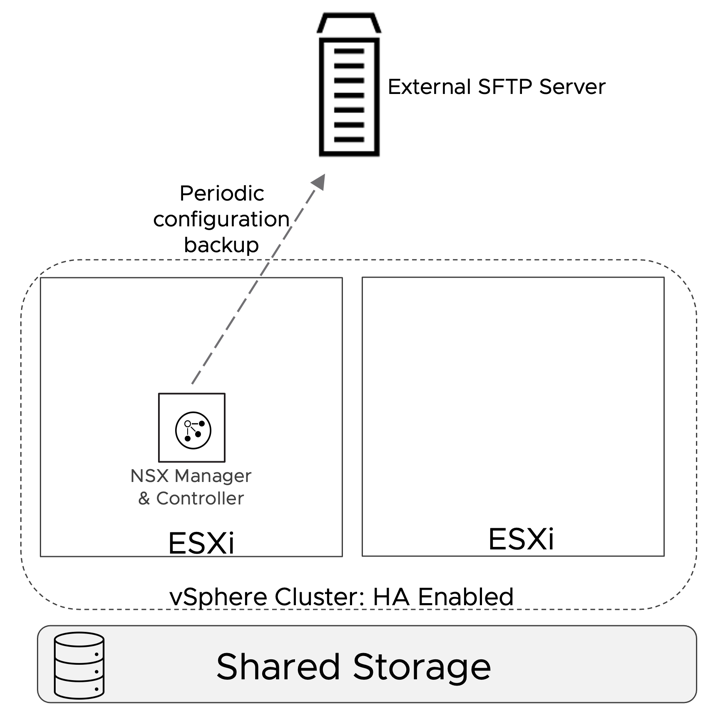

3.1.4 Singleton NSX Manager

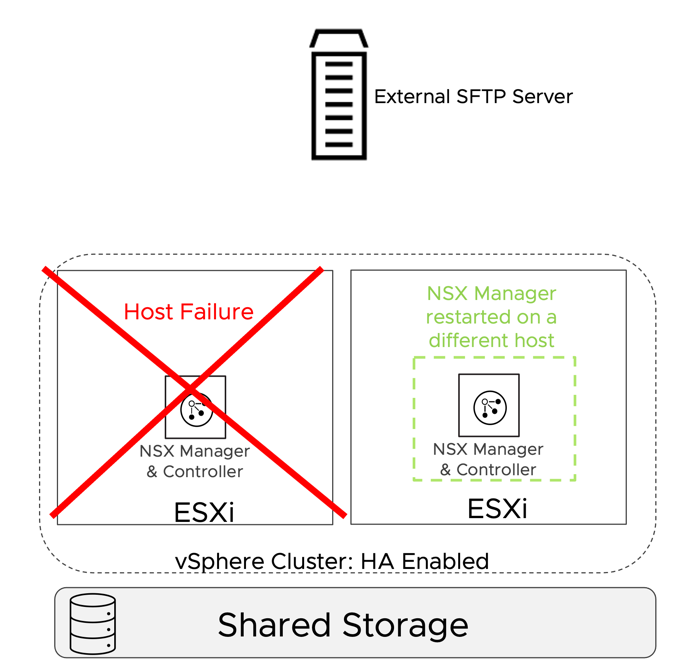

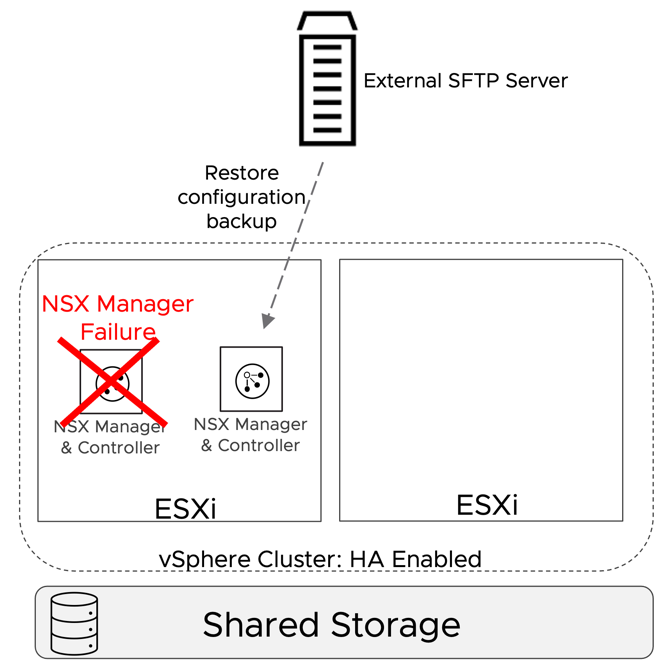

The resources required to run a cluster of three NSX Managers may represent a challenge in small environments. In NSX version 3.1, VMware supports deploying a single NSX manager in production environments. This minimal deployment model relies on vSphere HA and the backup and restore procedure to maintain an adequate level of high availability.

vSphere HA will protect against the failure of the physical host where the NSX manager is running. vSphere HA will restart NSX Manager on a different available host. Enough resources must be available on the surviving hosts; vSphere HA admission control can help ensure they are available in case of failure.

Backup and restore procedures help in case of failure of the NSX manager itself. The SFTP server where the backup is stored should not be placed on an infrastructure shared by the DC in a box.

Figure 24: Singleton NSX Manager HA Model

Figure 25: Singleton NSX Manager - Failure of the ESXi host

Figure 26: Singleton NSX Manager - Failure of the NSX Manager

3.2 Design terminology

The detailed design for the Simple Security for Application and Data Center in a Box use case presented in the next sections will use a set of assumptions and design decisions. In the context of this document, those terms are defined as follows.

Assumption: a required environment property before the use case is implemented. Assumptions define the scope of supportability for the solutions. All assumptions for the selected use case must be met before implementing it. Assumptions cover the physical network, compute hardware specifications, virtual infrastructure design, and authentication solutions.

Design Decision: an arbitrary choice about how the software-defined networking and security solution is implemented. The solution goals drive design decisions.

Each assumption or design decision is associated with an identifier for easy reference. The identifier has three parts (i.e., SS.AS.1 or DC.DD.1). The first two letters identify the use case, SS for Simple Security, DC for Datacenter in a Box. The third and fourth letters distinguish assumptions (AS) from design decisions (DD). The digits at the end represent numerical identifiers.

3.3 Simple Security Solution Design

3.3.1 Assumptions

The solutions in this document make a set of assumptions about the virtual infrastructure and supporting services. We made these assumptions to simplify the deployment and enable a quick path to consumption of the use cases addressed by the solutions. Before modifying one of these configurations or assumptions that are described, it is recommended that an evaluation is performed to determine if the benefit of modifying the solution outweighs the additional effort that it may require.

We do not provide any recommendation or assumption regarding the physical network, because the simple security solution is completely agnostic to it.

3.3.1.1 Virtual Environment Assumptions

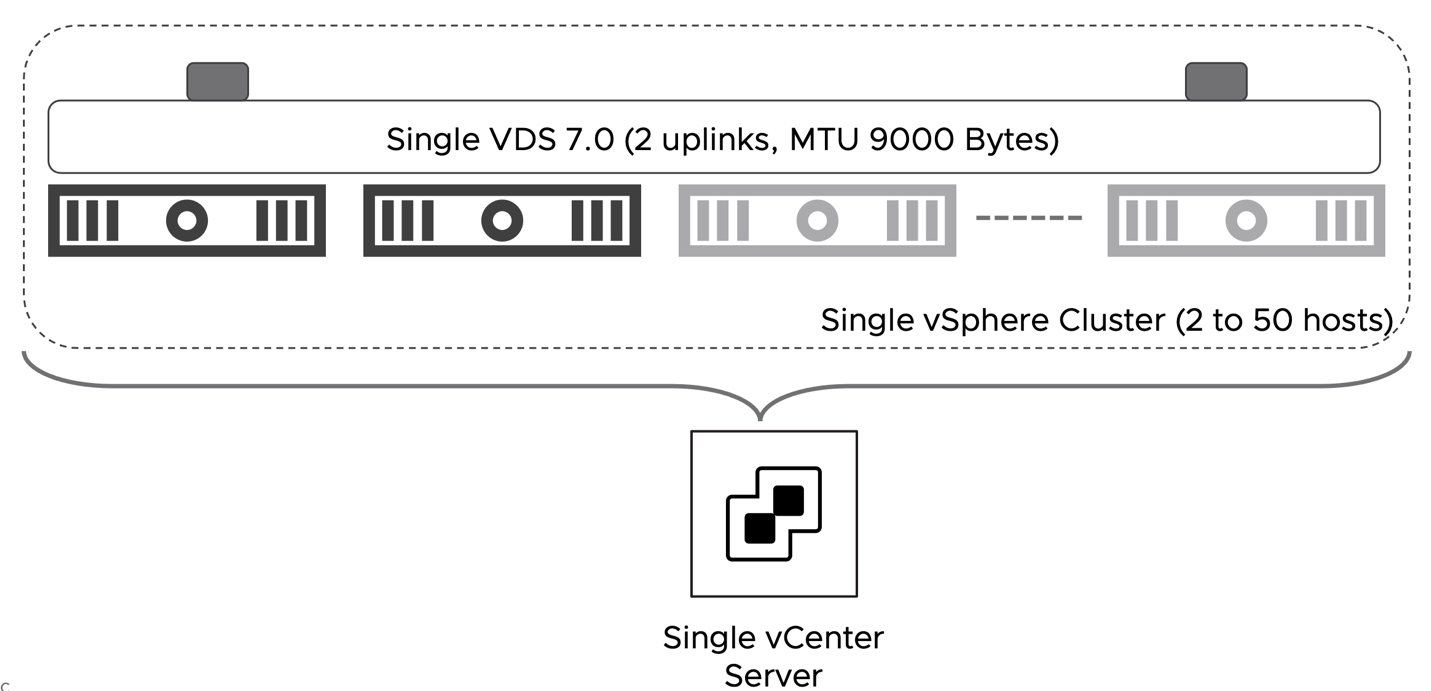

The solutions in this document all assume a vSphere 6.7 or later based environment inside a single vSphere Cluster used in a homogenous manner to present hosting, network, and security services to the workloads that run on top of it. This type of environment from an NSX perspective is called a Fully Collapsed Cluster design. This is because Management Workloads and Production Workloads running on the environment are hosted on a single cluster. We made the following assumptions for the virtual environment to enable this design.

Figure 27: Simple Security - Virtual Infrastructure Assumptions

| # | Assumption | Description | Justification |

| SS.AS.1 | The vSphere environment is running version 6.7 or later | The vCenter server and the ESXi hosts are running on the software version 6.7 or later | vSphere 6.7 is required to dramatically simplify the implementation of NSX distributed security services for VMs connected to vSphere dvpgs. |

| SS.AS.2 | vCenter Server appliance deployment size at least small. | The small size vCenter appliance can support up to 100 hypervisors and 1000 VMs. | The small size can support up to upper boundary of the solution in scope. |

| SS.AS.3 | vCenter Server is deployed on the Management Network VLAN and connected to a vCenter managed dvpg. | vCenter server connectivity is provided by the physical network and completely independent from NSX. | Provides direct secure connection to the ESXi hosts and NSX Manager. |

| SS.AS.4 | Collapsed Cluster Design | Single vSphere collapsed cluster where Management appliances, and Workloads all reside on the same cluster. | Maximizes available resource utilization. |

| SS.AS.5 | VDS 6.6 or later spanning the entire cluster. | The VDS version must be 6.6 or later to support NSX security distributed services on dvpgs. | A single or multiple VDS are supported as long as they are version 6.6 or later. |

| SS.AS.6 | vSphere HA is enabled | Use vSphere HA to protect all virtual machines against failure. | vSphere HA supports a robust level of protection for the NSX components availability. |

| SS.AS.7 | NTP server is available | vCenter and the ESXi hosts are synchronized to a reliable NTP server. | Firewall logs are generated by the ESXi servers. NTP synchronization ensures that the timestamps are accurate. |

Table 3: Virtual Environment Assumptions for Simple Security Solution

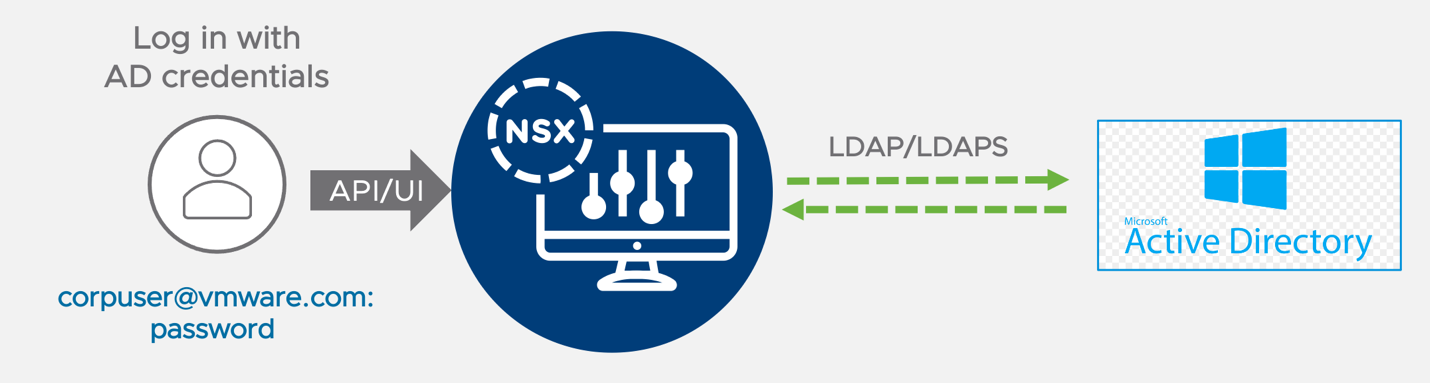

3.3.1.2 Assumptions Access and Authentication

A critical component for any environment meant to host production workloads is its ability to control, track, and provide access to those teams and systems that need to access the environment. NSX provides a granular role-based access control capability through integrations with enterprise grade authentication directories. One requirement is to configure the integration between NSX Manager and vCenter Server with a corporate directory that meets the organization’s requirements for security and compliance.

It is generally recommended to tie the built-in roles in NSX to groups in your corporate directory where they match responsibilities of existing teams for this environment.

For the simple security for application use case, we assume that no complex RBAC is required and that it is sufficient to entitle a group of users to manage the entire NSX solution. For such simple requirements the SSO capabilities between vCenter and NSX Manager provided by the vCenter plug-in for NSX are good fit. It is sufficient to entitle vCenter users (local or external) to the NSX Administrator role and they will automatically have access to manage NSX through the vSphere Client.

If the requirements for RBAC are more complex, or direct access to the NSX GUI is required bypassing the vSphere client, a direct integration between NSX and the Active Directory can be configured.

In this design NSX will leverage the SSO capabilities of the vSphere plug-in for NSX.

Figure 28: NSX Active Directory Authentication via the vSphere plug-in for NSX

Figure 29: NSX Active Directory direct Integration

| # | Assumption | Description | Justification |

| SS.AS.8 | Microsoft Active Directory exists, and it is integrated with vCenter | NSX Manager is managed via the vSphere Client and benefits from the SS0 capabilities across vCenter and NSX. | MS Active Directory is the most common directory service in SMEs and it is commonly integrated with vCenter. No action is required on NSX to leverage Active directory authentication when NSX Manager is deployed via the vSphere plug-in for NSX. |

| SS.AS.9 | Granular RBAC is not required. It is sufficient to entitle a set of users to have full control of NSX. | The vSphere plug-in for NSX does not allow to map vSphere roles to all the NSX pre-defined roles or any custom role. A user with the “NSX Administrator” role in vCenter will be mapped to the Enterprise Admin role in NSX. | In SMEs a single individual or a small team often controls the entire NSX platform. If more granular RBAC is required, the direct integration of NSX with the organization Active Directory should be implemented. |

Table 4: Access and Authentication Assumptions for Simple Security Solution

3.3.2 NSX Design

3.3.2.1 Scale and Placement of Management and Data Plane Components

The Management Components in this solution are the vCenter Server Appliance, NSX Manager Appliance, and vRealize Log Insight. While native HA/Clustering solutions are available for them, we are leveraging vSphere High Availability for their availability profile in this design. While this design assumes that these components will live inside the single vSphere cluster hosting all the workloads, it is fully supported to run them elsewhere.

Note: The deployment of a dedicated instance of vRealize Log Insight is not required if a centralized instance is already present or another logging solution is in place.

All the management components, including vCenter and NSX Manager, reside on a vCenter managed distributed port group (dvpg) with VLAN ID matching the physical network management network. NSX security services are available on every dvpg including the one serving the management network. NSX manager and vCenter server must be included in the Distributed Firewall exclusion list.

Note: Providing Security services to infrastructure components such as NSX Manager and vCenter server is out of scope for the simple security for application solution.

Figure 30: Simple Security - NSX Components and Workload Placement

| # | Design Decision | Design Justification | Design Implication |

| SS.DD.1 | A single NSX Manager will be deployed. | A single NSX manager minimize the resources required to implement the solution. vSphere HA will provide basic level of redundancy to the SDN management and control plane. | During an NSX Manager outage, the NSX Management and Control plane will be unavailable. While the failure will not impact existing flows for already connected VMs, configuration changes will not be possible, new VMs will not have Distributed Firewall rules applied. |

| SS.DD.2 | In vSphere HA, set the restart priority policy for the NSX Manager appliance to high. | NSX Manager implements the control plane for the distributed firewall solution. vSphere HA restarts the NSX Manager appliances first so that other failed virtual machines can receive the correct security policies once they are restored. | If the restart priority for other VMs is set to highest, NSX Manager can take longer to become available and it may impact the effective security policies applied to the restored VMs. |

| SS.DD.3 | Place the NSX Manager appliance on the management VLAN network on a vCenter managed distributed port-group | Provides direct connection to the ESXi hosts and vCenter Server. | An IP address must be available on the Management Network for the NSX Manager Appliance. |

| SS.DD.4 | Place the vRealize Log Insight appliance on the management VLAN network on a vCenter managed distributed port-group | Provides a direct connection to the ESXi hosts and vCenter Server. | An IP address must be available on the Management Network for the vRLI Appliance. |

| SS.DD.5 | Include vCenter Server and NSX Manager in the NSX Distributed firewall exclusion list (If not already included) | Distributed Firewall policies may disable management connectivity to infrastructure management components. While a security policy disabling connectivity to vCenter server can be easily rolled back bypassing the vSphere Client and connecting directly to NSX Manager, a security policy affecting connectivity to NSX Manager requires a more complex recovery procedure and requires contacting VMware Global Services (GSS) | Security protection for vCenter server and NSX Manager is not available. |

Table 5: Scale and Placement of Management Plane Components Design Decisions for the Simple Security Solution

| Management Component | Appliance Size | vCPUs | vRAM | Storage Total GB |

| vCenter Server | Small | 4 | 19G | 694 |

| NSX Manager Virtual Appliance | Medium | 6 | 24G | 200 |

| vRealize Log insight (Not required if another instance is already present) | Small | 4 | 8G | 530 |

Table 6: Management Components Hardware Requirements for the Simple Security Solution

3.3.3 NSX Application Platform (NAPP) Design - Optional

As of NSX Data Center 3.2, VMware has introduced the NSX Application Platform (NAPP). This is a new microservices based solution that provides a highly available, resilient, scale out architecture to deliver a set of core platform services which runs several new NSX features such as:

- NSX Intelligence (Application topology discovery and visualization, security policy recommendation)

- NSX Malware Prevention

- Network Traffic Analysis

- NSX Network Detection and Response

Figure 31: Reference NAPP deployment design for the simple security for application use case

3.3.3.1 High level design Decisions

The table below summarize the design decisions around the NAPP deployment for the simple security for application use case.

| # | Design Decision | Design Justification | Design Implication |

| NAPP.DD.1 | Deploy NAPP on top of a Tanzu Kubernetes Cluster | It provides an end-to-end solution supported by VMware. | A Tanzu Basic license must be available. Additional supervisor cluster VMs must be deployed compared to a solution based on upstream Kubernetes. |

| NAPP.DD.2 | Use Tanzu for vSphere with VDS Networking | It provides an integrated solution with vCenter server, and it does not require NSX networking or overlays This is the only mode supported by the NAPP Automation Appliance. | Three routable VLANs are required An HA Proxy Load Balancer VM is deployed |

| NAPP.DD.3 | Use the NAPP Automation Appliance to deploy Tanzu and NAPP | The NAPP automation appliance provides end-to-end automation of the set-up process with minimal user inputs required. It is also useful for troubleshooting and day 2 operations once the solution is in place. | The NSX Automation Appliance is packaged as a dedicated VM which must be deployed as part of the solution. |

Table 7: NAPP High Level Design Decision

3.3.3.2 Compute and Network requirements

The table below summarizes the compute requirements of the NAPP deployment for the simple security for applications use case. A total of 9 VMs must be deployed. At least a storage policy must be available. If VSAN is part of the solution, we can use the default VSAN storage policy. We can use a tag-based policy instead if external storage is in place.

| Management Component | # | vCPUs | vRAM | Storage Total GB (Thin Provisioned) |

| Supervisor Control Plane VMs | 3 | 4 | 16G | 32 GB |

| TKC Control Plane VM | 1 | 2 | 8G | 328 GB |

| TKC Node VMs | 3 | 16 | 64G | 1128 GB |

| HA Proxy | 1 | 2 | 4G | 20 GB |

| NAPP Automation Appliance | 1 | 1 | 4G | 10 GB |

| Total | 9 | 51 | 256G | 3838 GB |

Table 8: Compute requirements for NAPP

The table below summarizes the network requirements of the NAPP deployment for the simple security for applications use case. Three new dedicated VLANs and subnets must be available and should not be shared with any other component (Not a hard requirement, but it simplifies the IP allocation schema). The physical network must route the three new subnets. They should have connectivity to the Virtual Infrastructure components (vCenter, NSX Manager, and ESXi hosts) and the Internet (Connectivity to the public registry hosting the NAPP images). We can place the NAPP automation appliance on any network as long as IP connectivity to NSX Manager, vCenter, and the Tanzu Kubernetes Cluster is available. The preferred location is the management network where NSX Manager and vCenter reside. vCenter requires connectivity to the Internet to download the Tanzu image library.

| Network | Range Size | Routable | Internet Connectivity | dvpg with the same VLAN ID |

| TKG Management | /27 or more | Yes | No | Yes |

| TKG Workload | /27 or more | Yes | Yes | Yes |

| TKG Front-End | /27 or more | Yes | No | Yes |

Table 9: New networks required for NAPP deployment

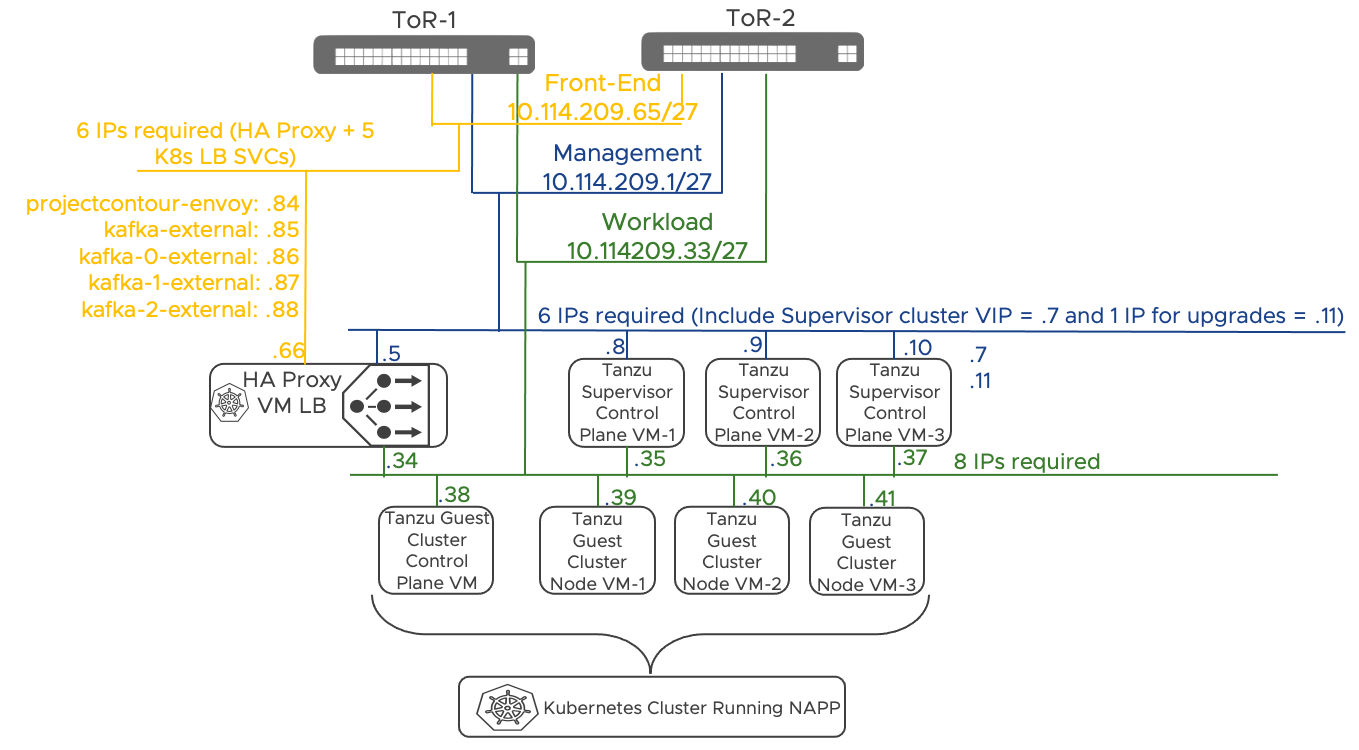

3.3.3.3 Sample NAPP Deployment IP Allocation

In this section we provide an example based on the following three subnets:

| Network | Range | Gateway |

| TKG Management | 10.114.209.0/27 | .1 |

| TKG Workload | 10.114.209.32/27 | .33 |

| TKG Front-End | 10.114.209.64/27 | .65 |

Figure 32: Sample NAPP IP Allocation

3.3.3.4 Reference Resources

NSX Application Platform Automation Guide

A document that covers how to use the NSX Application Platform Automation Appliance to fully automate a NAPP deployment. This included the required microservices environment using a UI based workflow that integrates directly into NSX Manager and only needs the required infrastructure to be provided (vSphere 7.0, compute, network and storage resources):

NSX Application Platform Automation Guide

NSX Application Platform Automation Appliance

The virtual appliance (OVA) referenced in the Automation Guide.

NSX Application Platform Automation Appliance

NSX Application Platform Deployment Guide

A detailed step-by-step guide on how to deploy NSX Application Platform aligned to VMware recommendations and best practices.

NSX Application Platform Deployment Guide

3.4 Data Center in a Box Solution Design

This design is intended to incorporate a small to medium sized hardware footprint of between two and fifty hosts and is limited to the boundary of a single set of Top of Rack (ToR) switches. As such, the workload should fit inside of these boundaries and have under 1000 Virtual Machines.

3.4.1 Assumptions

The solutions in this document make a set of assumptions about the physical networking, compute hardware, and supporting services. We made these assumptions to simplify the deployment and enable a quick path to consumption of the use cases addressed by the solutions. Before modifying one of these configurations or assumptions that are described, it is recommended that an evaluation is performed to determine if the benefit of modifying the solution outweighs the additional effort that it may require.

3.4.1.1 Physical Network Assumptions

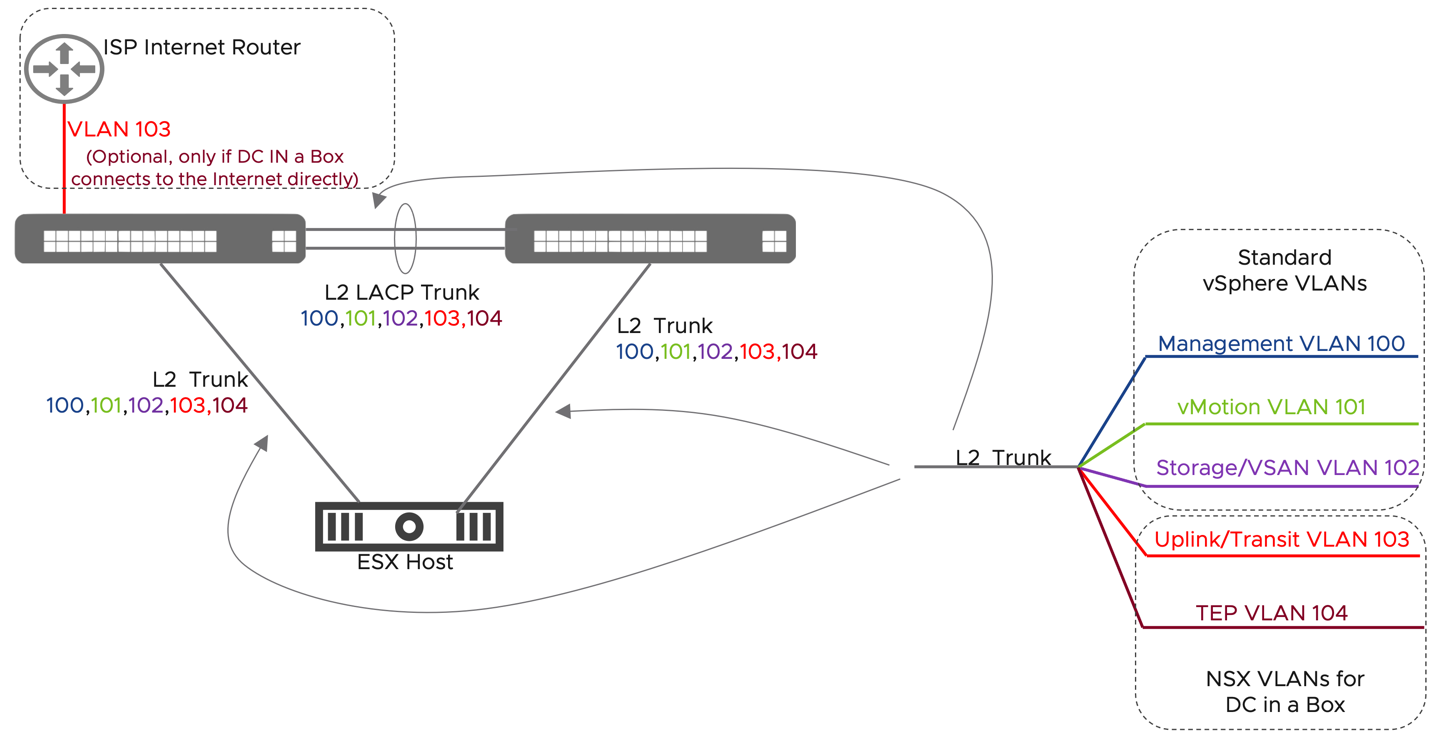

A base set of assumptions are made about physical networking to simplify the solutions in this guide. Those assumptions are detailed in the table below but briefly summarize them here.

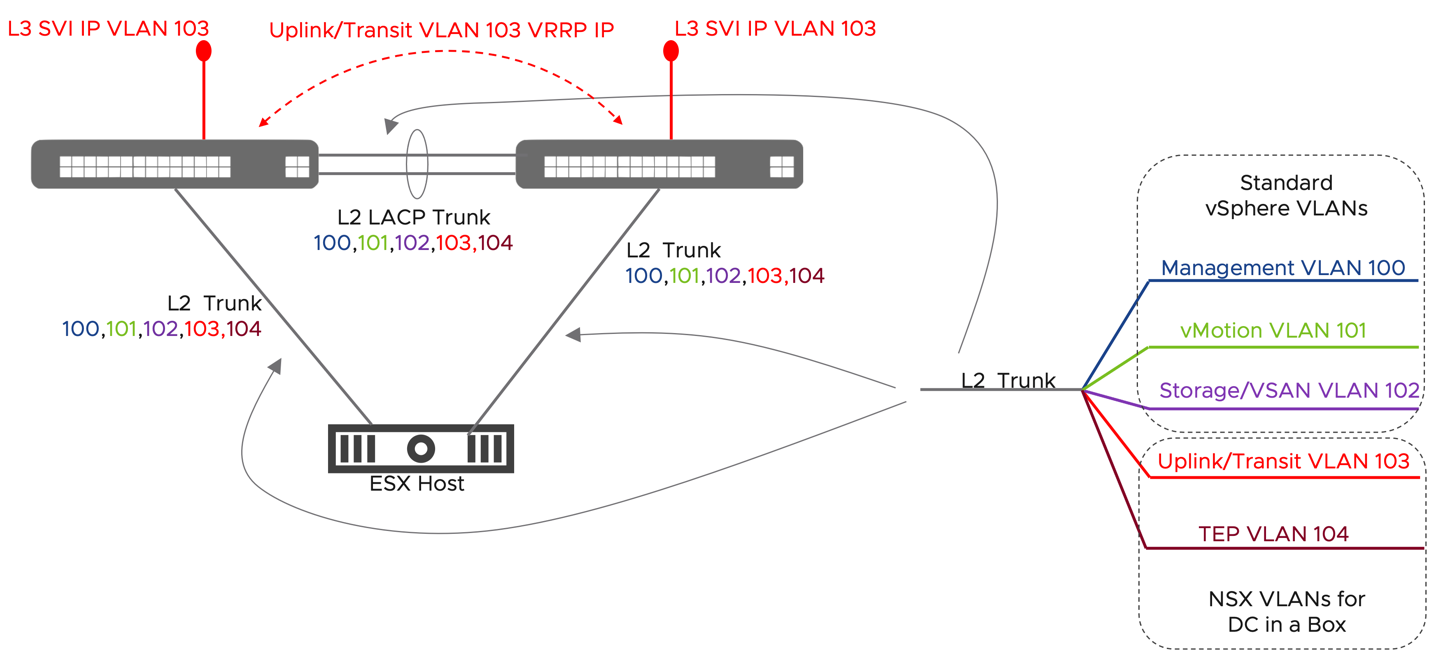

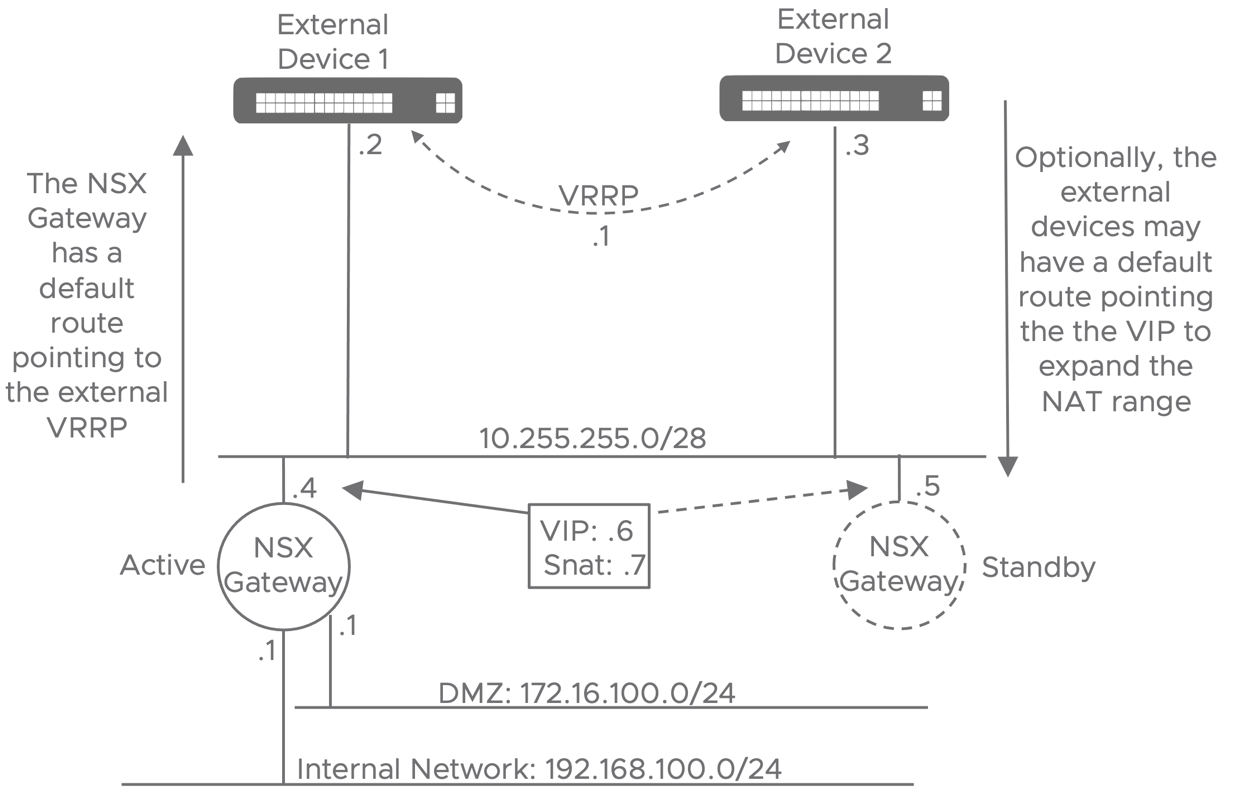

The physical network is limited to two top-of-rack switches, which may or may not provide layer three functionalities. Layer 2 only configurations are supported as long as VLAN trunking and jumbo frames capabilities are available. As with any vSphere deployment, 3 VLANs for ESXi VMKernel traffic are recommended. Those VLANs segment management, vMotion, and storage traffic. On top of those 3 VLANs, the DC in a Box solution requires two additional VLANs, one for Overlay traffic and one for the physical network's interconnection to the virtual network. The Overlay VLAN does not need routing capabilities. The physical to virtual VLAN transit can be implemented as layer two when the DC in a Box connects to the Internet directly. It may require a layer three gateway when the ToR switches act as the external network. The diagrams below outline the two scenarios.

Figure 33: DC in a Box - Physical Network Assumptions, untrusted external network

Figure 34: DC in a Box - Physical Network Assumptions, trusted external network

| # | Assumption | Description | Justification |

| DB.AS.1 | Single ToR Pair Design with the ability to extend VLANs between them | This design assumes that the physical servers making up this environment all sit under a single redundant pair of switches. Supports the use of two 10 GbE (25 GbE or greater recommended) links to each server, provides redundancy and reduces the overall design complexity. | Decreased complexity around L2 vs L3 communication between components and a simplification of the overall design |

| DB.AS.2 | Critical Network Services are Available | Critical Network Services are already available and running outside of this environment. Those services include: NTP, DNS, an LDAP Authentication Source, and SYSLOG or Log Insight logging destination. | While it is not mandatory that these services run outside of the environment, to simplify the deployment, this is the expectation. |

| DB.AS.3 | MTU for TEP Network | The MTU on the TEP Network is set to 9000 in NSX. The ToR Switches should be configured to match. If a lower size is chosen, it must be consistent and at least 1700. | The overlay network is created using a set of Geneve Tunnels between Transport Nodes. |

| DB.AS.4 | The management Network is provided by the physical infrastructure and must be extended between the two ToR switches | A management VLAN is configured on the two ToR switches. The management network of the deployed NSX components must be placed on the underlay network. | The NSX Manager and the Edge Node VMs must be connected to VLANs rather than on Overlay to avoid recursive dependencies |

| DB.AS.5 | 3 IP Addresses available on the Management Network | 3 IP addresses are required for the management interfaces of the single NSX Manager and the two NSX edge nodes VMs. | Management network connectivity is required for all NSX components |

| DB.AS.6 | New Transit VLAN and Subnet (/28 or larger) | This VLAN is dedicated to traffic between the Physical Network and the NSX Edge Nodes. This network will include the range of NAT IPs used to access instances in the DC in a box. A larger subnet will allow for more VMs to be directly accessed. The minimum size /28 range allows for 7 VMs to be reachable on unique IPs. The VLAN must be routable only if the ToR switches are connecting to the external network. For example in the case where the ToR switches are connected to a larger datacenter netwok or a private WAN circuit. If the DC in a Box is isolated and linked to an untrusted network (i.e., the Internet), the ToR will only need to provide Layer2 transport. See the Layer 3 logical design for more details about the two options. | Isolating the physical to virtual traffic in a dedicated broadcast domain reduces the risk of inadvertently affecting it during normal operations. |

| DB.AS.7 | New non routable TEP VLAN and Subnet (/24) | A new /24 IP range and VLAN must be assigned for the tunnel end point interfaces on the ESXi hosts and Edge VMs. It is not required to configure a default gateway on the ToR switches. DHCP is not required for this VLAN. NSX manager will assign the TEP IPs from a configured Pool. | Tunnel Endpoint interfaces (TEPs) are required for network virtualization. Hosts and Edge VMs only create Geneve tunnel with one another, so there is no need to route the network externally. Besides simplifying the physical network configuration, isolating the TEP network provides a simple but effective way of securing the overlay network from external tampering. |

Table 10: Physical Network Assumptions

3.4.1.2 Compute Assumptions

For the solutions described in this document, a set of assumptions have been made about the compute environment. These assumptions cover the physical ESXi servers themselves.

| # | Assumption | Description | Justification |

| DB.AS.8 | Network Adapters in the Compute Hardware is fully supported by NSX | VMware and the Hardware Vendors work together to generate an IO Devices Compatibility List which specifies the specific components that are supported with what features and at what driver and firmware levels. NSX specifically calls out set of features required for Network Adapters to achieve the expected performance levels. Please follow the guidance in Section 8.4 of the NSX Reference Design Guide for which features are required and how to validate that they are available. | Network adaptor support for overly offload and enhancements are mandatory for NSX virtualization performance |

| DB.AS.9 | Each vSphere host has two (2) pNICs available to ESXi and a single VDS. | In all designs inside leveraging this foundational hardware platform, it is assumed that each host will have a single VDS configured with two pNICs assigned to it. This VDS will be used for in-band management and data plane traffic. Out-of-Band Management traffic such as an IPMI, ILO, CIMC, or iDRAC interface is specific to the hardware vendor and out of scope for this design. Remote management capabilities of the physical server is however highly recommended for operational reasons. | The justification for two (2) pNICs specifically in this design is based on DB.AS.11 below from a redundancy and availability perspective. This solution can be extended for 4 pNICs to address isolation and performance requirements. However the scenario is not covered to adhere to a simple and general use case |

| DB.AS.10 | Shared Storage is available to all Compute Hardware in a Cluster | NSX Manager and NSX Edge Node VMs should remain available on persistent storage even in the event of a physical server failure and be automatically recovered through a service like vSphere High Availability in the unlikely event. While NSX does have capabilities to address these types of failures without shared storage, this document for simplicity of deployment and operations will assume that there is shared storage. | This document does not address the methods of recovery from data loss in the event of single host failures. As a result, it assumes all hosts in a single cluster are connected to shared storage allowing for VM Level recovery to other nodes in the cluster leveraging vSphere’s Distributed Availability Service (HA) |

| DB.AS.11 | Component level redundancy is built into each Compute Node | A base level of availability should be built into every hardware component of this system. This includes:

Redundancy at the RAM, Disk Controller, and other layers may be implemented if deemed necessary. | When an individual component failure can bring down a portion of the environment, ensuring that all reasonable steps are taken to prevent those failures and ensure the availability of the system as a whole |

| DB.AS.12 | Scale Requirements, Two Host Minimum and 50 Host Maximum | There is a two-host minimum configuration for solutions in this document. There is also an intended maximum of 50 nodes. | The scale limitation is imposed at the floor by the requirements for availability and reducing the single points of failure in the environment. At the ceiling, this design is bound by the limitations of a single Top of Rack (ToR) switch pair. |

Table 11: Compute Assumptions

3.4.1.3 Virtual Environment Assumptions

The solutions in this document all assume a vSphere 7.x based environment inside a single vSphere Cluster which is used in a homogenous manner to present hosting, network, and security services to the workloads that run on top of it. This type of environment from an NSX perspective is called a Fully Collapsed Cluster design. It is called this because Management Workload for the environment, Production Workload running on the environment and any network and security services provided to the workloads running on the environment will all be hosted on a single cluster. To enable this design the follow assumptions for the virtual environment are made.

Figure 35: DC in a Box - Virtual Environment Assumptions

| # | Assumption | Description | Justification |

| DB.AS.13 | The vSphere environment is running version 7.0u3 or later. | The vCenter server and the ESXi hosts are running on the software version 7.0u3 or later | vSphere 7 is required to dramatically simplify the implementation of NSX on a collapsed cluster design by leveraging VDS 7. Patch levels later than 7.0u3 will most likely work but have not been validated. |

| DB.AS.14 | vCenter Server appliance deployment size at least small. | The small size vCenter appliance can support up to 100 hypervisors and 1000 VMs. | The small size can support up to upper boundary of the solution in scope. |

| DB.AS.15 | vCenter Server is deployed on the Management Network VLAN and connected to a vCenter managed dvpg. | vCenter server connectivity is provided by the physical network and completely independent from NSX. | Provides direct secure connection to the ESXi hosts and NSX Manager. Remove any dependency on NSX networking. |

| DB.AS.16 | Collapsed Cluster Design | Single vSphere collapsed cluster where Management appliances, NSX Edge Node VMs, and Workloads all reside on the same cluster. | Maximizes available resource utilization. |

| DB.AS.17 | Single VDS 7.0 with two uplinks | A single VDS of must be present in the cluster. The VDS version must be 7.0. | VDS allows the implementation of NSX services without deploying an additional virtual switch (NVDS). It also simplifies the collapsed cluster design by allowing for the placement of the management appliances on vCenter managed port-groups. |

| DB.AS.18 | VDS MTU set to 9000 Bytes | When NSX integrates with VDS the MTU value is inherited from the VDS. The NSX Uplink profile must not have any value for the MTU setting. | 9000 is the maximum supported in vSphere. It should match value set on the physical network. |

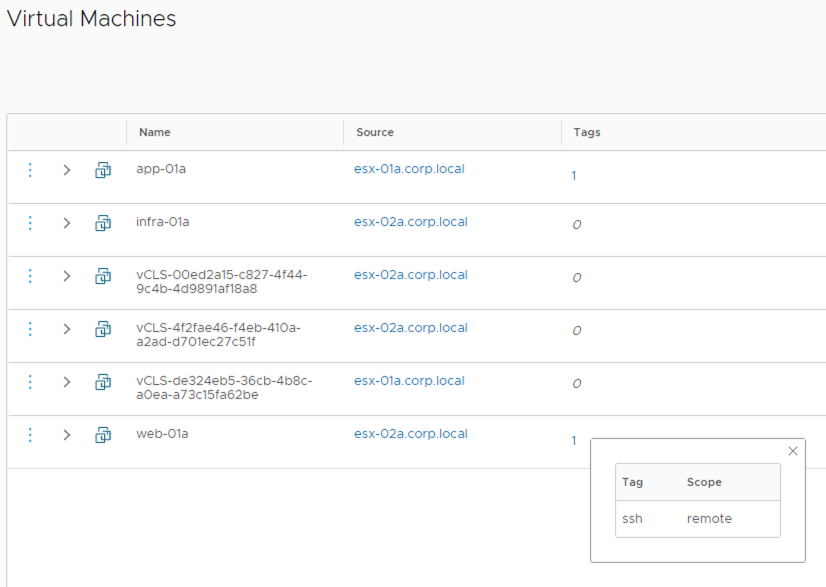

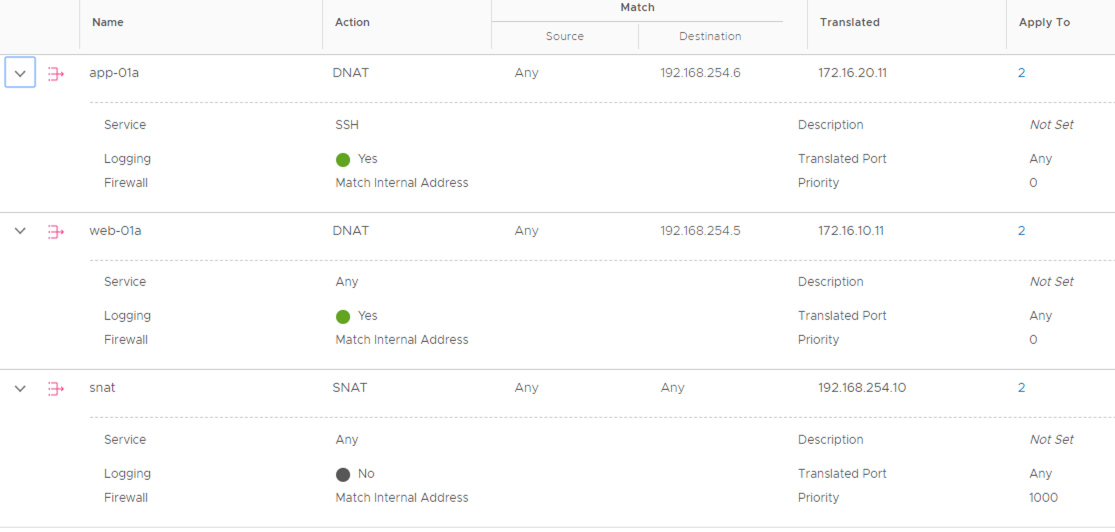

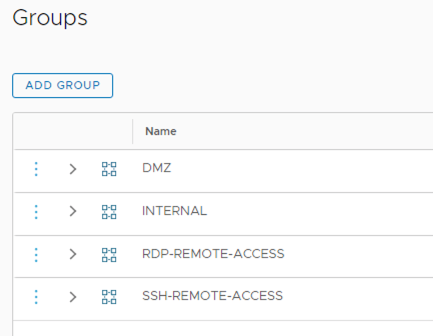

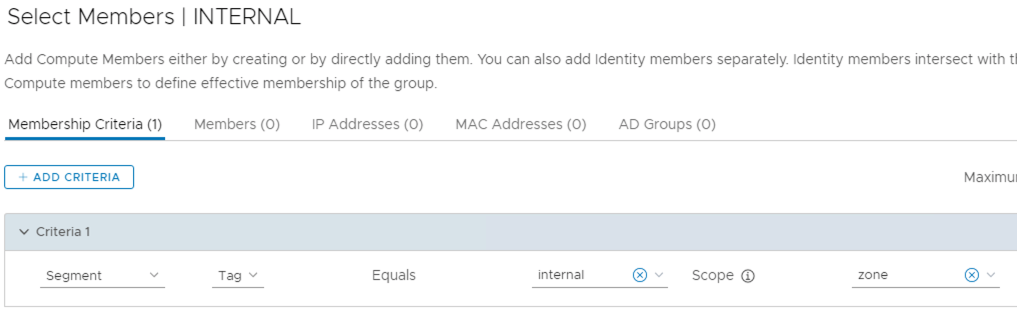

| DB.AS.19 | vSphere HA is enabled | Use vSphere HA to protect all virtual machines against failures. | vSphere HA supports a robust level of protection for the NSX components availability. |